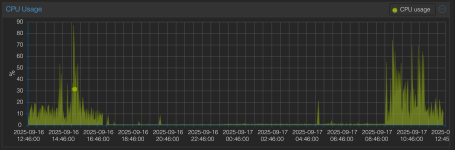

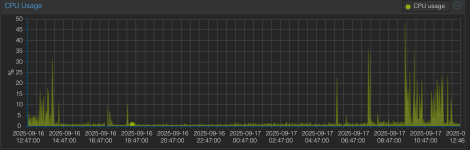

Did CPU accounting change from PVE 8 to PVE 9? I run 50+ nodes all on PVE 8. I've started provisioning my new nodes with PVE 9, and I'm seeing weird stats. A lot of VMs I'm seeing report 120%-160% CPU usage. I don't think I've ever seen over 101% on PVE 8, ever. Additionally, my CPU throttling algorithm (which relies on the 'daily' CPU RRD data) seems to not work the same as before. Can a dev tell me if anything changed regarding CPU accounting in PVE 9?

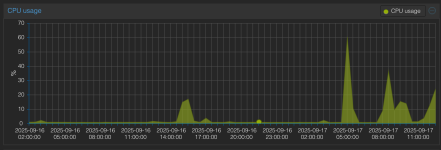

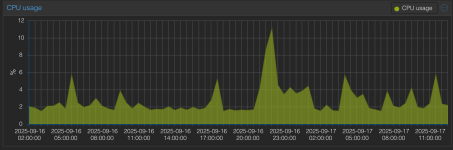

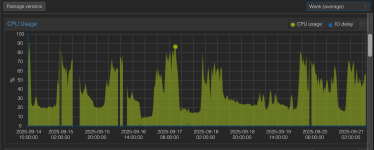

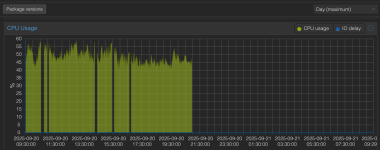

I've also noticed that in PVE 9, the "Day (Maximum)" and "Day (Average)" graphs are the same. In PVE 8 they differed.

I've also noticed that in PVE 9, the "Day (Maximum)" and "Day (Average)" graphs are the same. In PVE 8 they differed.

Last edited: