Hi,

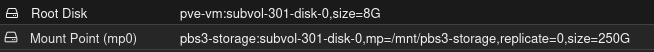

no. ThisJust a thought, as I've recently discover the shared=1 option for mount points. Would adding to your mount line in the .conf file let proxmox know that it is a shared mount and therefore know not to delete it?

so for example in OPs 103.conf file, add ",shared=1" to the end of the "mp0:" line?

shared is to tell Proxmox VE that a specific volume is shared between nodes (but it won't automagically share the volume itself) and thus doesn't need to be migrated. But it does not affect restore AFAIK.