Hi

I’m wondering what the best practice setup is for a iSCSI NAS backend with multi-path. This is coming from a VMware environment, where we are migrating over to ProxmoxVE.

Environment is:

NAS: Synology SA3200D, dual controller NAS to a RAID, iSCSI (but NFS possible). 3x 10Gbe on each controller, controllers work in master/slave mode. One 10Gbe is used for data production network for file sharing, leaving the remaining 2 for iSCSI tasks

2x 10Gbe swtiches

2x servers, with 4x10Gbe ports on each.

With the multiple networks connections, is it recommended to isolate the two different paths on their own network segment and VLANs?

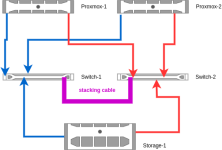

(hmm can't put an image in, so in words

NAS m/s nic 1: 172.24.1.1/24 <-> switch 1 <-> server1 nic 1: 172.24.1.10/24 & server2 nic 1: 172.24.1.11/24

NAS m/s nic 2: 172.23.2.1/24 <-> switch 2 <-> server1 nic 2: 172.23.2.10/24 & server2 nic 2: 172.23.2.11/24

or is it ok they are all in the same network segment?

And I’ve seen is its only possible to use LVM on iSCSI… so no thin provisioning, so will need more space...

Also, I’ve read the quorum network should be setup on its own switch to reduce latency, is there any reason I can’t use a private VLAN on an existing switch?

Regards

Damien

I’m wondering what the best practice setup is for a iSCSI NAS backend with multi-path. This is coming from a VMware environment, where we are migrating over to ProxmoxVE.

Environment is:

NAS: Synology SA3200D, dual controller NAS to a RAID, iSCSI (but NFS possible). 3x 10Gbe on each controller, controllers work in master/slave mode. One 10Gbe is used for data production network for file sharing, leaving the remaining 2 for iSCSI tasks

2x 10Gbe swtiches

2x servers, with 4x10Gbe ports on each.

With the multiple networks connections, is it recommended to isolate the two different paths on their own network segment and VLANs?

(hmm can't put an image in, so in words

NAS m/s nic 1: 172.24.1.1/24 <-> switch 1 <-> server1 nic 1: 172.24.1.10/24 & server2 nic 1: 172.24.1.11/24

NAS m/s nic 2: 172.23.2.1/24 <-> switch 2 <-> server1 nic 2: 172.23.2.10/24 & server2 nic 2: 172.23.2.11/24

or is it ok they are all in the same network segment?

And I’ve seen is its only possible to use LVM on iSCSI… so no thin provisioning, so will need more space...

Also, I’ve read the quorum network should be setup on its own switch to reduce latency, is there any reason I can’t use a private VLAN on an existing switch?

Regards

Damien