Hi all,

I'm running a 3-node Proxmox VE 9 cluster with Ceph and experiencing unexpectedly low performance during rados bench tests. I’d appreciate any insights or suggestions.

and

I get:

Each OSD reports ~525 MB/s throughput with 4MB blocks — so the disks themselves are capable.

Questions:

I'm running a 3-node Proxmox VE 9 cluster with Ceph and experiencing unexpectedly low performance during rados bench tests. I’d appreciate any insights or suggestions.

Cluster Setup:

- Proxmox VE 9 on all nodes

- Ceph Quincy (default in PVE 9)

- 3 nodes: pve01, pve02, pve03

- 1 OSD per node, each on:

- Samsung PM863a SATA SSD (480GB) — SAMSUNG_MZ7L3480HCHQ-00A07

- Dedicated Ceph network using Proxmox SDN fabric

- Nodes are connected in a ring topology via direct 10Gbps DAC

- No separate WAL/DB devices

- Ceph and public networks are separated

Benchmark Results:

Using:

Code:

rados bench -p testbench 60 write --no-cleanupand

Code:

rados bench -p testbench 60 randI get:

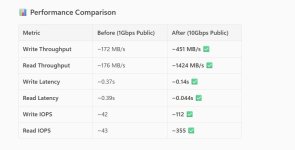

- ~172 MB/s write

- ~176 MB/s random read

- ~42–43 IOPS

- ~0.36–0.37s average latency

OSD Bench Results:

Code:

ceph tell osd.* benchiostat Output:

During the benchmark:- sdb (OSD disk) shows only ~7–19% utilization

- CPU is ~99% idle

- Queue depth (aqu-sz) is low (~1.0–1.5)

What I’ve Tried:

- Checked SMART — all SSDs healthy

- Ceph is clean, no recovery/backfill

Questions:

- Is this performance expected for a 3-node, 3-OSD Ceph cluster with SATA SSDs?

- Would adding more OSDs per node significantly improve performance?

- Would separating WAL/DB to NVMe help, even with SATA data devices?

- Any Ceph tuning recommendations for this setup?

- Could the SDN ring topology be a limiting factor?