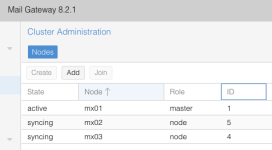

After a Debian update, my PMG cluster is no longer synching. Main node is active and the two nodes are always in 'synching' state. Syslog keeps on showing on both nodes:

Service is running:

I've removed the nodes, readded them successfully with pmgcm join etc. but the situation stays the same. The command runs fine one the command line, paths are available for perl. What's wrong ?

- update - The servers ARE actually synching so that's good. Just the states are not updated.

Code:

pmgtunnel[4515]: status update error: command 'ip -details -json link show' failed: failed to executeService is running:

Bash:

systemctl status pmgtunnel

● pmgtunnel.service - Proxmox Mail Gateway Cluster Tunnel Daemon

Loaded: loaded (/lib/systemd/system/pmgtunnel.service; enabled; preset: enabled)

Active: active (running) since Sat 2025-08-16 11:43:59 CEST; 4min 3s ago

Process: 4512 ExecStart=/usr/bin/pmgtunnel start (code=exited, status=0/SUCCESS)

Main PID: 4515 (pmgtunnel)

Tasks: 1 (limit: 4579)

Memory: 75.1M

CPU: 927ms

CGroup: /system.slice/pmgtunnel.service

└─4515 pmgtunnelI've removed the nodes, readded them successfully with pmgcm join etc. but the situation stays the same. The command runs fine one the command line, paths are available for perl. What's wrong ?

- update - The servers ARE actually synching so that's good. Just the states are not updated.

Attachments

Last edited: