First of all, thanks for this awesome beta release — great work!

We've noticed one major issue with this version: VM boot times (using OVMF) have become extremely slow. It now takes about 1 minute and 30 seconds from pressing "Start" to seeing any console output or UEFI POST completion.

This behavior did not occur on PVE 8.x — VM startup was almost instant in comparison.

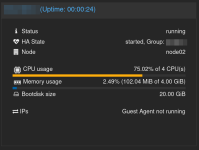

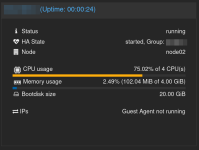

During this delay, CPU usage is consistently high (around 75–80%, regardless of how many cores are assigned), and RAM usage hovers at about 100 MB.

Below are two screenshots showing the VM state during this stall:

And here's the config of one of the affected VMs — though we’ve confirmed the issue occurs on all VMs across all nodes in the cluster:

Some more information:

Has anyone else seen this behavior? Could this be a known issue in the beta?

We've noticed one major issue with this version: VM boot times (using OVMF) have become extremely slow. It now takes about 1 minute and 30 seconds from pressing "Start" to seeing any console output or UEFI POST completion.

This behavior did not occur on PVE 8.x — VM startup was almost instant in comparison.

During this delay, CPU usage is consistently high (around 75–80%, regardless of how many cores are assigned), and RAM usage hovers at about 100 MB.

Below are two screenshots showing the VM state during this stall:

And here's the config of one of the affected VMs — though we’ve confirmed the issue occurs on all VMs across all nodes in the cluster:

Code:

agent: 1

bios: ovmf

boot: order=virtio0

cores: 4

cpu: x86-64-v3

efidisk0: ceph-nvme01:vm-117-disk-1,efitype=4m,pre-enrolled-keys=1,size=528K

hotplug: disk,network

machine: q35

memory: 4096

name: <redacted>

net0: virtio=F2:AC:38:7B:9A:A6,bridge=vmbr0,tag=100

numa: 0

onboot: 1

ostype: l26

scsihw: virtio-scsi-single

smbios1: uuid=8b8a56a6-0684-4b2b-a50a-69b23b789235

sockets: 1

tablet: 0

tags: <redacted>

vga: virtio

virtio0: ceph-nvme01:vm-117-disk-0,discard=on,iothread=1,size=20G

vmgenid: 3890b812-a1fc-4331-a4c0-49d88c7632a6Some more information:

Bash:

root@node02 ~ # pveversion

pve-manager/9.0.0~11/c474e5a0b4bd391d (running kernel: 6.14.8-2-pve)

root@node02 ~ # qemu-system-x86_64 --version

QEMU emulator version 10.0.2 (pve-qemu-kvm_10.0.2-4)

Copyright (c) 2003-2025 Fabrice Bellard and the QEMU Project developersHas anyone else seen this behavior? Could this be a known issue in the beta?