Hello all,

Hoping to get some help with an issue I have been having with my Nimble and setting up multipathing. I have probably spent at least 10-12 hours just researching and trying alone and I am finally ready to get some help. I have followed a number of multipath tutorials and they have helped to a point, but I am stuck with the following issue:

My Nimble iSCSI Discovery address is: 10.32.0.28 which then points to 10.32.0.25 and 10.32.0.26. When I try to setup multipathing to discover on the .28 address, it sometimes duplicates or even adds 3 or 4 different iSCSI targets.

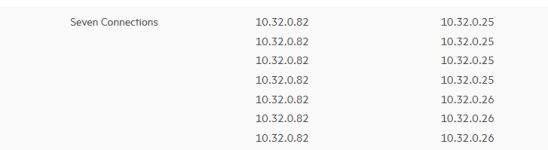

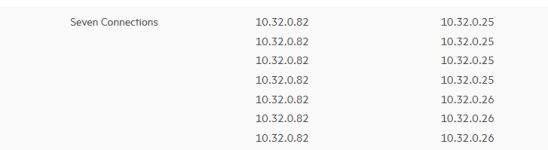

This picture is from the Nimble side. As you can see, it splits and picks which one it wants, but every time I try to do round robin, it never works correctly. If I do a multipath -ll, this is what I get:

Here are the iSCSI sessions (redacted a little bit):

I have tried clearing all of the connections out and re-adding it and it will just keep doing this. I would love to setup multipathing, but it is proving to be really difficult. I will take any help that anyone can give me. Thanks!

Hoping to get some help with an issue I have been having with my Nimble and setting up multipathing. I have probably spent at least 10-12 hours just researching and trying alone and I am finally ready to get some help. I have followed a number of multipath tutorials and they have helped to a point, but I am stuck with the following issue:

My Nimble iSCSI Discovery address is: 10.32.0.28 which then points to 10.32.0.25 and 10.32.0.26. When I try to setup multipathing to discover on the .28 address, it sometimes duplicates or even adds 3 or 4 different iSCSI targets.

This picture is from the Nimble side. As you can see, it splits and picks which one it wants, but every time I try to do round robin, it never works correctly. If I do a multipath -ll, this is what I get:

Code:

size=10T features='1 queue_if_no_path' hwhandler='1 alua' wp=rw

`-+- policy='service-time 0' prio=50 status=active

|- 14:0:0:0 sdi 8:128 active ready running

|- 15:0:0:0 sdj 8:144 active ready running

|- 16:0:0:0 sdk 8:160 active ready running

|- 17:0:0:0 sdl 8:176 active ready running

|- 18:0:0:0 sdm 8:192 active ready running

|- 19:0:0:0 sdn 8:208 active ready running

`- 20:0:0:0 sdo 8:224 active ready runningHere are the iSCSI sessions (redacted a little bit):

Code:

root@proxmox-3:/# iscsiadm -m session

tcp: [28] 10.32.0.26:3260,2461 iqn.2007-11.com.nimblestorage:nimble-g02-g773bb7315XXXXXXX (non-flash)

tcp: [29] 10.32.0.26:3260,2461 iqn.2007-11.com.nimblestorage:nimble-g02-g773bb7315XXXXXXX (non-flash)

tcp: [30] 10.32.0.26:3260,2461 iqn.2007-11.com.nimblestorage:nimble-g02-g773bb7315XXXXXXX (non-flash)

tcp: [31] 10.32.0.26:3260,2461 iqn.2007-11.com.nimblestorage:nimble-g02-g773bb7315XXXXXXX (non-flash)

tcp: [32] 10.32.0.26:3260,2461 iqn.2007-11.com.nimblestorage:nimble-g02-g773bb7315XXXXXXX (non-flash)

tcp: [33] 10.32.0.26:3260,2461 iqn.2007-11.com.nimblestorage:nimble-g02-g773bb7315XXXXXXX (non-flash)

tcp: [34] 10.32.0.26:3260,2461 iqn.2007-11.com.nimblestorage:nimble-g02-g773bb7315XXXXXXX (non-flash)

tcp: [35] 10.32.0.25:3260,2461 iqn.2007-11.com.nimblestorage:nimble-g02-g773bb7315XXXXXXX (non-flash)

tcp: [36] 10.32.0.25:3260,2461 iqn.2007-11.com.nimblestorage:nimble-g02-g773bb7315XXXXXXX (non-flash)

tcp: [37] 10.32.0.25:3260,2461 iqn.2007-11.com.nimblestorage:nimble-g02-g773bb7315XXXXXXX (non-flash)

tcp: [38] 10.32.0.25:3260,2461 iqn.2007-11.com.nimblestorage:nimble-g02-g773bb7315XXXXXXX (non-flash)

tcp: [39] 10.32.0.25:3260,2461 iqn.2007-11.com.nimblestorage:nimble-g02-g773bb7315XXXXXXX (non-flash)

tcp: [40] 10.32.0.25:3260,2461 iqn.2007-11.com.nimblestorage:nimble-g02-g773bb7315XXXXXXX (non-flash)

tcp: [41] 10.32.0.25:3260,2461 iqn.2007-11.com.nimblestorage:nimble-g02-g773bb7315XXXXXXX (non-flash)

tcp: [42] 10.32.0.28:3260,2460 iqn.2007-11.com.nimblestorage:proxmox-v773bb7315aXXXXXX (non-flash)

tcp: [43] 10.32.0.28:3260,2460 iqn.2007-11.com.nimblestorage:proxmox-v773bb7315aXXXXXX (non-flash)

tcp: [44] 10.32.0.28:3260,2460 iqn.2007-11.com.nimblestorage:proxmox-v773bb7315aXXXXXX (non-flash)

tcp: [45] 10.32.0.28:3260,2460 iqn.2007-11.com.nimblestorage:proxmox-v773bb7315aXXXXXX (non-flash)

tcp: [46] 10.32.0.28:3260,2460 iqn.2007-11.com.nimblestorage:proxmox-v773bb7315aXXXXXX (non-flash)

tcp: [47] 10.32.0.28:3260,2460 iqn.2007-11.com.nimblestorage:proxmox-v773bb7315aXXXXXX (non-flash)

tcp: [48] 10.32.0.28:3260,2460 iqn.2007-11.com.nimblestorage:proxmox-v773bb7315aXXXXXX (non-flash)I have tried clearing all of the connections out and re-adding it and it will just keep doing this. I would love to setup multipathing, but it is proving to be really difficult. I will take any help that anyone can give me. Thanks!

Last edited: