RTX-A5000

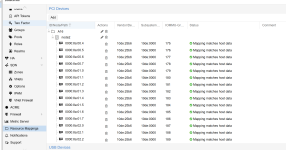

map id=10de:2231,iommugroup=46,node=cube-001,path=0000:43:00.4,subsystem-id=103c:0000

map id=10de:2231,iommugroup=47,node=cube-001,path=0000:43:00.5,subsystem-id=103c:0000

map id=10de:2231,iommugroup=48,node=cube-001,path=0000:43:00.6,subsystem-id=103c:0000

map id=10de:2231,iommugroup=49,node=cube-001,path=0000:43:00.7,subsystem-id=103c:0000

map id=10de:2231,iommugroup=50,node=cube-001,path=0000:43:01.0,subsystem-id=103c:0000

map id=10de:2231,iommugroup=51,node=cube-001,path=0000:43:01.1,subsystem-id=103c:0000

map id=10de:2231,iommugroup=52,node=cube-001,path=0000:43:01.2,subsystem-id=103c:0000

map id=10de:2231,iommugroup=53,node=cube-001,path=0000:43:01.3,subsystem-id=103c:0000

map id=10de:2231,iommugroup=54,node=cube-001,path=0000:43:01.4,subsystem-id=103c:0000

map id=10de:2231,iommugroup=55,node=cube-001,path=0000:43:01.5,subsystem-id=103c:0000

map id=10de:2231,iommugroup=56,node=cube-001,path=0000:43:01.6,subsystem-id=103c:0000

map id=10de:2231,iommugroup=57,node=cube-001,path=0000:43:01.7,subsystem-id=103c:0000

map id=10de:2231,iommugroup=58,node=cube-001,path=0000:43:02.0,subsystem-id=103c:0000

map id=10de:2231,iommugroup=59,node=cube-001,path=0000:43:02.1,subsystem-id=103c:0000

map id=10de:2231,iommugroup=60,node=cube-001,path=0000:43:02.2,subsystem-id=103c:0000

map id=10de:2231,iommugroup=61,node=cube-001,path=0000:43:02.3,subsystem-id=103c:0000

map id=10de:2231,iommugroup=62,node=cube-001,path=0000:43:02.4,subsystem-id=103c:0000

map id=10de:2231,iommugroup=63,node=cube-001,path=0000:43:02.5,subsystem-id=103c:0000

map id=10de:2231,iommugroup=64,node=cube-001,path=0000:43:02.6,subsystem-id=103c:0000

map id=10de:2231,iommugroup=65,node=cube-001,path=0000:43:02.7,subsystem-id=103c:0000

map id=10de:2231,iommugroup=66,node=cube-001,path=0000:43:03.0,subsystem-id=103c:0000

map id=10de:2231,iommugroup=67,node=cube-001,path=0000:43:03.1,subsystem-id=103c:0000

map id=10de:2231,iommugroup=68,node=cube-001,path=0000:43:03.2,subsystem-id=103c:0000

map id=10de:2231,iommugroup=69,node=cube-001,path=0000:43:03.3,subsystem-id=103c:0000

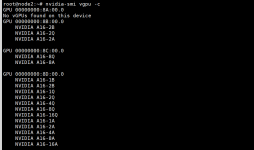

mdev 1