Hi all,

I've spent days trying to figure this out to no avail.

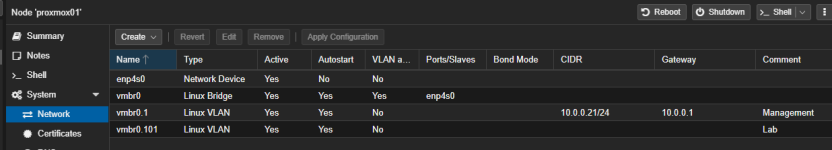

Quick details, NIC is a RT8125

IPERF3 from my host to another machine on the same switch can hit 2.5 Gbps no problem.

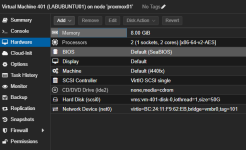

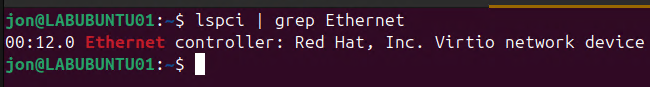

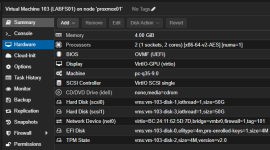

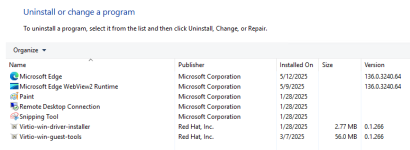

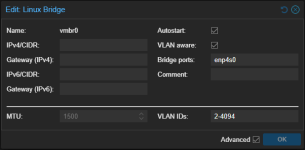

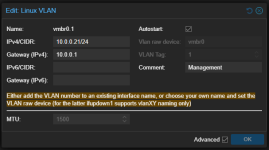

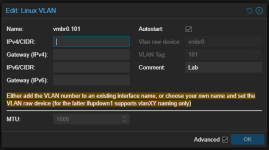

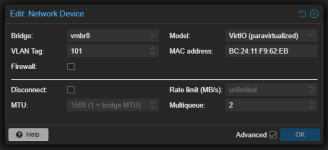

Going from a VM on the host to the host, I'm limited to 1 Gbps. I've tried adjusting MTU's to 9000 on all my network items, no change. Tried changing CPU to HOST on VM, no change. My main ethernet detects 2500baseT/Full no problem, my bridge has 10000MB/s no problem (visible at least).

04:00.0 Ethernet controller: Realtek Semiconductor Co., Ltd. RTL8125 2.5GbE Controller (rev 05)

Settings for enp4s0:

Supported ports: [ TP MII ]

Supported link modes: 10baseT/Half 10baseT/Full

100baseT/Half 100baseT/Full

1000baseT/Full

2500baseT/Full

Supported pause frame use: Symmetric Receive-only

Supports auto-negotiation: Yes

Supported FEC modes: Not reported

Advertised link modes: 10baseT/Half 10baseT/Full

100baseT/Half 100baseT/Full

1000baseT/Full

2500baseT/Full

Advertised pause frame use: No

Advertised auto-negotiation: Yes

Advertised FEC modes: Not reported

Link partner advertised link modes: 10baseT/Half 10baseT/Full

100baseT/Half 100baseT/Full

1000baseT/Full

2500baseT/Full

Link partner advertised pause frame use: Symmetric Receive-only

Link partner advertised auto-negotiation: Yes

Link partner advertised FEC modes: Not reported

Speed: 2500Mb/s

Duplex: Full

Auto-negotiation: on

master-slave cfg: preferred slave

master-slave status: slave

Port: Twisted Pair

PHYAD: 0

Transceiver: external

MDI-X: Unknown

Supports Wake-on: pumbg

Wake-on: d

Link detected: yes

Settings for vmbr0:

Supported ports: [ ]

Supported link modes: Not reported

Supported pause frame use: No

Supports auto-negotiation: No

Supported FEC modes: Not reported

Advertised link modes: Not reported

Advertised pause frame use: No

Advertised auto-negotiation: No

Advertised FEC modes: Not reported

Speed: 10000Mb/s

Duplex: Unknown! (255)

Auto-negotiation: off

Port: Other

PHYAD: 0

Transceiver: internal

Link detected: yes

IPERF3 from my host to a Windows box on the same switch:

Connecting to host 10.0.0.188, port 5201

[ 5] local 10.0.0.21 port 44012 connected to 10.0.0.188 port 5201

[ ID] Interval Transfer Bitrate Retr Cwnd

[ 5] 0.00-1.00 sec 282 MBytes 2.37 Gbits/sec 0 267 KBytes

[ 5] 1.00-2.00 sec 280 MBytes 2.35 Gbits/sec 0 267 KBytes

[ 5] 2.00-3.00 sec 282 MBytes 2.37 Gbits/sec 0 535 KBytes

[ 5] 3.00-4.00 sec 281 MBytes 2.36 Gbits/sec 0 535 KBytes

[ 5] 4.00-5.00 sec 280 MBytes 2.35 Gbits/sec 0 535 KBytes

[ 5] 5.00-6.00 sec 281 MBytes 2.35 Gbits/sec 0 535 KBytes

[ 5] 6.00-7.00 sec 281 MBytes 2.36 Gbits/sec 0 535 KBytes

[ 5] 7.00-8.00 sec 281 MBytes 2.36 Gbits/sec 0 535 KBytes

[ 5] 8.00-9.00 sec 280 MBytes 2.35 Gbits/sec 0 535 KBytes

[ 5] 9.00-10.00 sec 281 MBytes 2.35 Gbits/sec 0 535 KBytes

- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID] Interval Transfer Bitrate Retr

[ 5] 0.00-10.00 sec 2.74 GBytes 2.36 Gbits/sec 0 sender

[ 5] 0.00-10.00 sec 2.74 GBytes 2.35 Gbits/sec receiver

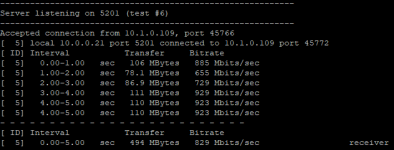

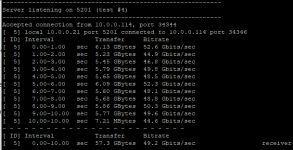

IPERF3 from a VM to the host (and vice versa):

Connecting to host 10.0.0.21, port 5201

[ 5] local 10.1.0.109 port 40430 connected to 10.0.0.21 port 5201

[ ID] Interval Transfer Bitrate Retr Cwnd

[ 5] 0.00-1.00 sec 112 MBytes 943 Mbits/sec 158 962 KBytes

[ 5] 1.00-2.00 sec 111 MBytes 935 Mbits/sec 0 1.02 MBytes

[ 5] 2.00-3.00 sec 110 MBytes 923 Mbits/sec 0 1.09 MBytes

[ 5] 3.00-4.00 sec 111 MBytes 929 Mbits/sec 0 1.15 MBytes

[ 5] 4.00-5.00 sec 112 MBytes 938 Mbits/sec 26 915 KBytes

[ 5] 5.00-6.00 sec 110 MBytes 924 Mbits/sec 0 1004 KBytes

[ 5] 6.00-7.00 sec 110 MBytes 921 Mbits/sec 0 1.06 MBytes

[ 5] 7.00-8.00 sec 111 MBytes 931 Mbits/sec 0 1.14 MBytes

[ 5] 8.00-9.00 sec 112 MBytes 940 Mbits/sec 35 898 KBytes

[ 5] 9.00-10.00 sec 111 MBytes 929 Mbits/sec 0 996 KBytes

- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID] Interval Transfer Bitrate Retr

[ 5] 0.00-10.00 sec 1.08 GBytes 931 Mbits/sec 219 sender

[ 5] 0.00-10.01 sec 1.08 GBytes 929 Mbits/sec receiver

Pretty much out of ideas, all my VMs are like this on the host.

Any help would be greatly appreciated, I've found a bunch of threads but no real answers for onboard 2.5 Gbps. Either the network driver is wrong and 2500baseT/Full isn't detected, or people adding secondary cards.

I've spent days trying to figure this out to no avail.

Quick details, NIC is a RT8125

IPERF3 from my host to another machine on the same switch can hit 2.5 Gbps no problem.

Going from a VM on the host to the host, I'm limited to 1 Gbps. I've tried adjusting MTU's to 9000 on all my network items, no change. Tried changing CPU to HOST on VM, no change. My main ethernet detects 2500baseT/Full no problem, my bridge has 10000MB/s no problem (visible at least).

04:00.0 Ethernet controller: Realtek Semiconductor Co., Ltd. RTL8125 2.5GbE Controller (rev 05)

Settings for enp4s0:

Supported ports: [ TP MII ]

Supported link modes: 10baseT/Half 10baseT/Full

100baseT/Half 100baseT/Full

1000baseT/Full

2500baseT/Full

Supported pause frame use: Symmetric Receive-only

Supports auto-negotiation: Yes

Supported FEC modes: Not reported

Advertised link modes: 10baseT/Half 10baseT/Full

100baseT/Half 100baseT/Full

1000baseT/Full

2500baseT/Full

Advertised pause frame use: No

Advertised auto-negotiation: Yes

Advertised FEC modes: Not reported

Link partner advertised link modes: 10baseT/Half 10baseT/Full

100baseT/Half 100baseT/Full

1000baseT/Full

2500baseT/Full

Link partner advertised pause frame use: Symmetric Receive-only

Link partner advertised auto-negotiation: Yes

Link partner advertised FEC modes: Not reported

Speed: 2500Mb/s

Duplex: Full

Auto-negotiation: on

master-slave cfg: preferred slave

master-slave status: slave

Port: Twisted Pair

PHYAD: 0

Transceiver: external

MDI-X: Unknown

Supports Wake-on: pumbg

Wake-on: d

Link detected: yes

Settings for vmbr0:

Supported ports: [ ]

Supported link modes: Not reported

Supported pause frame use: No

Supports auto-negotiation: No

Supported FEC modes: Not reported

Advertised link modes: Not reported

Advertised pause frame use: No

Advertised auto-negotiation: No

Advertised FEC modes: Not reported

Speed: 10000Mb/s

Duplex: Unknown! (255)

Auto-negotiation: off

Port: Other

PHYAD: 0

Transceiver: internal

Link detected: yes

IPERF3 from my host to a Windows box on the same switch:

Connecting to host 10.0.0.188, port 5201

[ 5] local 10.0.0.21 port 44012 connected to 10.0.0.188 port 5201

[ ID] Interval Transfer Bitrate Retr Cwnd

[ 5] 0.00-1.00 sec 282 MBytes 2.37 Gbits/sec 0 267 KBytes

[ 5] 1.00-2.00 sec 280 MBytes 2.35 Gbits/sec 0 267 KBytes

[ 5] 2.00-3.00 sec 282 MBytes 2.37 Gbits/sec 0 535 KBytes

[ 5] 3.00-4.00 sec 281 MBytes 2.36 Gbits/sec 0 535 KBytes

[ 5] 4.00-5.00 sec 280 MBytes 2.35 Gbits/sec 0 535 KBytes

[ 5] 5.00-6.00 sec 281 MBytes 2.35 Gbits/sec 0 535 KBytes

[ 5] 6.00-7.00 sec 281 MBytes 2.36 Gbits/sec 0 535 KBytes

[ 5] 7.00-8.00 sec 281 MBytes 2.36 Gbits/sec 0 535 KBytes

[ 5] 8.00-9.00 sec 280 MBytes 2.35 Gbits/sec 0 535 KBytes

[ 5] 9.00-10.00 sec 281 MBytes 2.35 Gbits/sec 0 535 KBytes

- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID] Interval Transfer Bitrate Retr

[ 5] 0.00-10.00 sec 2.74 GBytes 2.36 Gbits/sec 0 sender

[ 5] 0.00-10.00 sec 2.74 GBytes 2.35 Gbits/sec receiver

IPERF3 from a VM to the host (and vice versa):

Connecting to host 10.0.0.21, port 5201

[ 5] local 10.1.0.109 port 40430 connected to 10.0.0.21 port 5201

[ ID] Interval Transfer Bitrate Retr Cwnd

[ 5] 0.00-1.00 sec 112 MBytes 943 Mbits/sec 158 962 KBytes

[ 5] 1.00-2.00 sec 111 MBytes 935 Mbits/sec 0 1.02 MBytes

[ 5] 2.00-3.00 sec 110 MBytes 923 Mbits/sec 0 1.09 MBytes

[ 5] 3.00-4.00 sec 111 MBytes 929 Mbits/sec 0 1.15 MBytes

[ 5] 4.00-5.00 sec 112 MBytes 938 Mbits/sec 26 915 KBytes

[ 5] 5.00-6.00 sec 110 MBytes 924 Mbits/sec 0 1004 KBytes

[ 5] 6.00-7.00 sec 110 MBytes 921 Mbits/sec 0 1.06 MBytes

[ 5] 7.00-8.00 sec 111 MBytes 931 Mbits/sec 0 1.14 MBytes

[ 5] 8.00-9.00 sec 112 MBytes 940 Mbits/sec 35 898 KBytes

[ 5] 9.00-10.00 sec 111 MBytes 929 Mbits/sec 0 996 KBytes

- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID] Interval Transfer Bitrate Retr

[ 5] 0.00-10.00 sec 1.08 GBytes 931 Mbits/sec 219 sender

[ 5] 0.00-10.01 sec 1.08 GBytes 929 Mbits/sec receiver

Pretty much out of ideas, all my VMs are like this on the host.

Any help would be greatly appreciated, I've found a bunch of threads but no real answers for onboard 2.5 Gbps. Either the network driver is wrong and 2500baseT/Full isn't detected, or people adding secondary cards.