Currently I have my Proxmox up and running and with untagged and tagged networks configured. When I want to add the gateway for my last VLAN on the setup the proxmox host starts not behaving correctly and can't reach the internet.

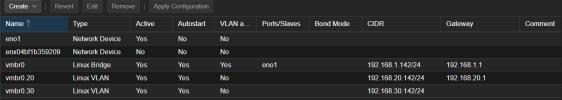

This is how the configuration looks like without the gateway:

/etc/network/interfaces:

With the configuration shown all seem to work, however if I add on the vmbr0.30 the "gateway 192.168.30.1" I lose access to the internet from the proxmox host.

Any ideas of what the problem might be?

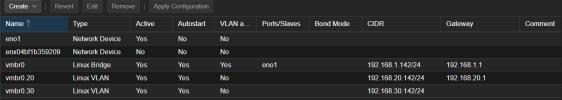

This is how the configuration looks like without the gateway:

/etc/network/interfaces:

Code:

auto lo

iface lo inet loopback

iface eno1 inet manual

iface enx04bf1b359209 inet manual

auto vmbr0

iface vmbr0 inet static

address 192.168.1.142/24

gateway 192.168.1.1

bridge-ports eno1

bridge-stp off

bridge-fd 0

bridge-vlan-aware yes

bridge-vids 20 30

auto vmbr0.20

iface vmbr0.20 inet static

address 192.168.20.142/24

gateway 192.168.20.1

auto vmbr0.30

iface vmbr0.30 inet static

address 192.168.30.142/24With the configuration shown all seem to work, however if I add on the vmbr0.30 the "gateway 192.168.30.1" I lose access to the internet from the proxmox host.

Any ideas of what the problem might be?