I've read through all the related posts I can find, and haven't been able to identify a clear path to escape my situation safely.

Can anyone recommend a strategy to transfer this to the new 40G smaller SSD?

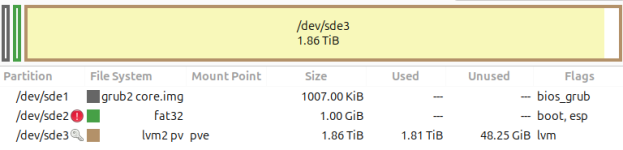

- I have an SSD (1.86 TB) that is dying. My whole proxmox install is on this SSD.

- I have backups of all my VMs on another drive which is safe.

- I have a full drive image of the ailing SSD.

- My replacement SSD is 1.82 TB - sadly it is smaller. so I cannot restore the drive image to it, nor do I want to spend more to get a bigger one.

- Gparted is a no go, as the LVM thin pool consumed the whole disk and I cannot reduce the partition by enough to fit on the new drive

Code:

sde 8:64 0 1.9T 0 disk

├─sde1 8:65 0 1007K 0 part

├─sde2 8:66 0 1G 0 part

└─sde3 8:67 0 1.9T 0 part

├─pve-swap 252:0 0 8G 0 lvm

├─pve-root 252:1 0 96G 0 lvm

├─pve-data_tmeta 252:2 0 15.9G 0 lvm

│ └─pve-data-tpool 252:4 0 1.7T 0 lvm

│ ├─pve-data 252:5 0 1.7T 1 lvm

│ ├─pve-vm--100--disk--1 252:6 0 64G 0 lvm

│ ├─pve-vm--100--disk--0 252:7 0 4M 0 lvm

│ ├─pve-vm--100--disk--2 252:8 0 4M 0 lvm

│ ├─pve-vm--102--disk--0 252:9 0 32G 0 lvm

│ ├─pve-vm--102--disk--1 252:10 0 100G 0 lvm

│ ├─pve-vm--103--disk--0 252:11 0 100G 0 lvm

│ ├─pve-vm--101--disk--1 252:12 0 50G 0 lvm

│ ├─pve-vm--101--disk--0 252:13 0 4M 0 lvm

│ ├─pve-vm--101--disk--2 252:14 0 4M 0 lvm

│ ├─pve-vm--104--disk--1 252:15 0 100G 0 lvm

│ └─pve-vm--103--disk--1 252:16 0 50G 0 lvm

└─pve-data_tdata 252:3 0 1.7T 0 lvm

└─pve-data-tpool 252:4 0 1.7T 0 lvm

├─pve-data 252:5 0 1.7T 1 lvm

├─pve-vm--100--disk--1 252:6 0 64G 0 lvm

├─pve-vm--100--disk--0 252:7 0 4M 0 lvm

├─pve-vm--100--disk--2 252:8 0 4M 0 lvm

├─pve-vm--102--disk--0 252:9 0 32G 0 lvm

├─pve-vm--102--disk--1 252:10 0 100G 0 lvm

├─pve-vm--103--disk--0 252:11 0 100G 0 lvm

├─pve-vm--101--disk--1 252:12 0 50G 0 lvm

├─pve-vm--101--disk--0 252:13 0 4M 0 lvm

├─pve-vm--101--disk--2 252:14 0 4M 0 lvm

├─pve-vm--104--disk--1 252:15 0 100G 0 lvm

└─pve-vm--103--disk--1 252:16 0 50G 0 lvmCan anyone recommend a strategy to transfer this to the new 40G smaller SSD?