For the moment Ill hold off posting any configs because at this point I'm assuming I'm missing something obvious but maybe not.

In the quest for more speed I upgraded my box from 1g to 10g via pcie card. It shows up in the network config and all VM's are now attached to it no problem.

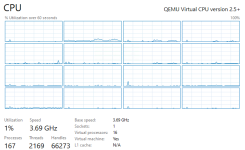

The issue I'm seeing is performance. File transfers were unexpectedly slow so I got out iperf. WVM = Windows 10 VM. LVM = Linux VM. Here's what I'm seeing

LVM to itself - 50 to 70 Gbits/s

WVM to itself - 2.1 Gbits/s

WVM to WVM - 2.75 Gbits/s

LVM to LVM - 2.3 Gbits/s

WVM to another pc on 10gig - 600 Mbit/s

So, something is for sure wrong here, everything is on VirtIO and the windows machines have the driver installed and report a 10g connection.

vmbr1 is the new bridge to enp5s0

2nd thing I noticed is I cant access proxmox VE over the new NIC, only the old one. Do I have to point the base OS towards the new NIC? This isnt critical, but I did make a note of it.

In the quest for more speed I upgraded my box from 1g to 10g via pcie card. It shows up in the network config and all VM's are now attached to it no problem.

The issue I'm seeing is performance. File transfers were unexpectedly slow so I got out iperf. WVM = Windows 10 VM. LVM = Linux VM. Here's what I'm seeing

LVM to itself - 50 to 70 Gbits/s

WVM to itself - 2.1 Gbits/s

WVM to WVM - 2.75 Gbits/s

LVM to LVM - 2.3 Gbits/s

WVM to another pc on 10gig - 600 Mbit/s

So, something is for sure wrong here, everything is on VirtIO and the windows machines have the driver installed and report a 10g connection.

vmbr1 is the new bridge to enp5s0

auto loiface lo inet loopbackiface enp4s0 inet manualauto enp5s0iface enp5s0 inet manualauto vmbr0iface vmbr0 inet static address 192.168.4.199/24 gateway 192.168.4.1 bridge-ports enp4s0 bridge-stp off bridge-fd 0 bridge-vlan-aware yes bridge-vids 2-4094auto vmbr1iface vmbr1 inet static address 192.168.4.200/24 gateway 192.168.4.1 bridge-ports enp5s0 bridge-stp off bridge-fd 01: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host noprefixroute valid_lft forever preferred_lft forever2: enp4s0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq master vmbr0 state UP group default qlen 1000 link/ether d8:43:ae:6c:bf:12 brd ff:ff:ff:ff:ff:ff3: enp5s0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq master vmbr1 state UP group default qlen 1000 link/ether 98:b7:85:21:67:16 brd ff:ff:ff:ff:ff:ff4: wlo1: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000 link/ether f8:fe:5e:ab:69:cc brd ff:ff:ff:ff:ff:ff altname wlp0s20f35: vmbr0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000 link/ether d8:43:ae:6c:bf:12 brd ff:ff:ff:ff:ff:ff inet 192.168.4.199/24 scope global vmbr0 valid_lft forever preferred_lft forever inet6 fe80::da43:aeff:fe6c:bf12/64 scope link valid_lft forever preferred_lft forever6: vmbr1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000 link/ether 98:b7:85:21:67:16 brd ff:ff:ff:ff:ff:ff inet 192.168.4.200/24 scope global vmbr1 valid_lft forever preferred_lft forever inet6 fe80::9ab7:85ff:fe21:6716/64 scope link valid_lft forever preferred_lft forever2nd thing I noticed is I cant access proxmox VE over the new NIC, only the old one. Do I have to point the base OS towards the new NIC? This isnt critical, but I did make a note of it.