I have a local PBS server to which I backup from different local Proxmox hosts. I keep backups like 12x hourly, 7x daily, 4 weekly ....

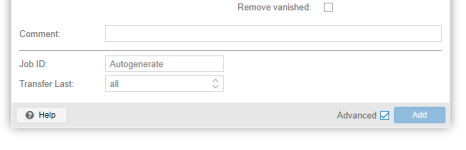

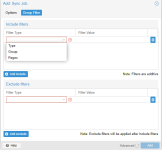

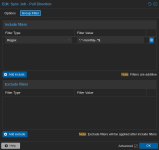

I want to push sync this PBS to an offsite. But I dont want to sync all of the local backups. Just a subset like 1x hourly, 1x daily ...

or just the last 1-2 of hourly/daily/weekly

is there a way to configure my sync job like this?

I want to push sync this PBS to an offsite. But I dont want to sync all of the local backups. Just a subset like 1x hourly, 1x daily ...

or just the last 1-2 of hourly/daily/weekly

is there a way to configure my sync job like this?