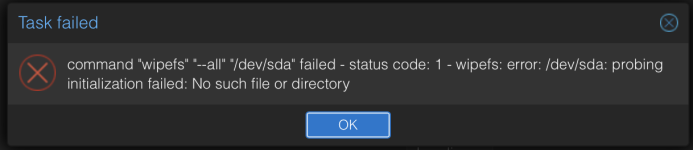

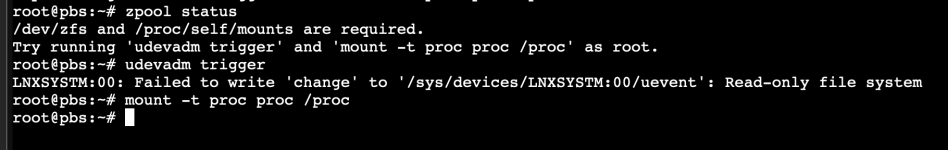

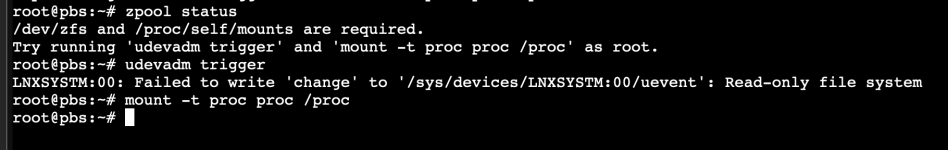

The error stems most likely from the fact that the disk is still in use? Maybe you already had the zpool imported? What is the output of zpool status?

Note: Above suggestions were made under the assumption that your PBS root disk and the disk you want to setup your datastore on are different disks. Did you maybe wipe the root disk? In that case you will have to start fresh, yes.

Regarding NFS share: How did you mount the NFS share? Please share the output of mount?

Log output doesn't look like much but here is the errors shown in cli. and yes that is correct PBS is running off an NVME in an little n100 machine and I have another SSD drive id like to back things up thats installed on the PBS host device.

Jan 17 12:23:29 pbs systemd-logind[94]: Removed session 237.

Jan 17 12:23:39 pbs dhclient[131]: DHCPREQUEST for 192.168.1.26 on eth0 to 192.168.1.1 port 67

Jan 17 12:23:39 pbs dhclient[131]: DHCPACK of 192.168.1.26 from 192.168.1.1

Jan 17 12:23:39 pbs dhclient[131]: bound to 192.168.1.26 -- renewal in 300 seconds.

Jan 17 12:23:39 pbs systemd[1]: Started postfix-resolvconf.service - Copies updated resolv.conf to postfix chroot and restarts postfix..

Jan 17 12:23:39 pbs systemd[1]: Reloading

postfix@-.service - Postfix Mail Transport Agent (instance -)...

Jan 17 12:23:39 pbs systemd[1]: postfix-resolvconf.service: Deactivated successfully.

Jan 17 12:23:39 pbs postfix[31263]: Postfix is using backwards-compatible default settings

Jan 17 12:23:39 pbs postfix[31263]: See

http://www.postfix.org/COMPATIBILITY_README.html for details

Jan 17 12:23:39 pbs postfix[31263]: To disable backwards compatibility use "postconf compatibility_level=3.6" and "postfix reload"

Jan 17 12:23:39 pbs postfix/postfix-script[31269]: refreshing the Postfix mail system

Jan 17 12:23:39 pbs postfix/master[341]: reload -- version 3.7.11, configuration /etc/postfix

Jan 17 12:23:39 pbs systemd[1]: Reloaded

postfix@-.service - Postfix Mail Transport Agent (instance -).

Jan 17 12:23:39 pbs systemd[1]: Reloading postfix.service - Postfix Mail Transport Agent...

Jan 17 12:23:39 pbs systemd[1]: Reloaded postfix.service - Postfix Mail Transport Agent.

output of mount

root@pbs:~# mount

/dev/mapper/pve-vm--100--disk--0 on / type ext4 (rw,relatime,stripe=16)

none on /dev type tmpfs (rw,relatime,size=492k,mode=755,inode64)

proc on /proc type proc (rw,nosuid,nodev,noexec,relatime)

proc on /proc/sys/net type proc (rw,nosuid,nodev,noexec,relatime)

proc on /proc/sys type proc (ro,relatime)

proc on /proc/sysrq-trigger type proc (ro,relatime)

sysfs on /sys type sysfs (ro,nosuid,nodev,noexec,relatime)

sysfs on /sys/devices/virtual/net type sysfs (rw,nosuid,nodev,noexec,relatime)

fusectl on /sys/fs/fuse/connections type fusectl (rw,nosuid,nodev,noexec,relatime)

proc on /dev/.lxc/proc type proc (rw,relatime)

sys on /dev/.lxc/sys type sysfs (rw,relatime)

none on /sys/fs/cgroup type cgroup2 (rw,nosuid,nodev,noexec,relatime)

lxcfs on /proc/cpuinfo type fuse.lxcfs (rw,nosuid,nodev,relatime,user_id=0,group_id=0,allow_other)

lxcfs on /proc/diskstats type fuse.lxcfs (rw,nosuid,nodev,relatime,user_id=0,group_id=0,allow_other)

lxcfs on /proc/loadavg type fuse.lxcfs (rw,nosuid,nodev,relatime,user_id=0,group_id=0,allow_other)

lxcfs on /proc/meminfo type fuse.lxcfs (rw,nosuid,nodev,relatime,user_id=0,group_id=0,allow_other)

lxcfs on /proc/slabinfo type fuse.lxcfs (rw,nosuid,nodev,relatime,user_id=0,group_id=0,allow_other)

lxcfs on /proc/stat type fuse.lxcfs (rw,nosuid,nodev,relatime,user_id=0,group_id=0,allow_other)

lxcfs on /proc/swaps type fuse.lxcfs (rw,nosuid,nodev,relatime,user_id=0,group_id=0,allow_other)

lxcfs on /proc/uptime type fuse.lxcfs (rw,nosuid,nodev,relatime,user_id=0,group_id=0,allow_other)

lxcfs on /sys/devices/system/cpu type fuse.lxcfs (rw,nosuid,nodev,relatime,user_id=0,group_id=0,allow_other)

devpts on /dev/pts type devpts (rw,nosuid,noexec,relatime,gid=5,mode=620,ptmxmode=666,max=1026)

devpts on /dev/ptmx type devpts (rw,nosuid,noexec,relatime,gid=5,mode=620,ptmxmode=666,max=1026)

devpts on /dev/console type devpts (rw,nosuid,noexec,relatime,gid=5,mode=620,ptmxmode=666,max=1026)

devpts on /dev/tty1 type devpts (rw,nosuid,noexec,relatime,gid=5,mode=620,ptmxmode=666,max=1026)

devpts on /dev/tty2 type devpts (rw,nosuid,noexec,relatime,gid=5,mode=620,ptmxmode=666,max=1026)

none on /proc/sys/kernel/random/boot_id type tmpfs (ro,nosuid,nodev,noexec,relatime,size=492k,mode=755,inode64)

tmpfs on /dev/shm type tmpfs (rw,nosuid,nodev,inode64)

tmpfs on /run type tmpfs (rw,nosuid,nodev,size=3230524k,nr_inodes=819200,mode=755,inode64)

tmpfs on /run/lock type tmpfs (rw,nosuid,nodev,noexec,relatime,size=5120k,inode64)

hugetlbfs on /dev/hugepages type hugetlbfs (rw,relatime,pagesize=2M)

mqueue on /dev/mqueue type mqueue (rw,nosuid,nodev,noexec,relatime)

ramfs on /run/credentials/systemd-sysctl.service type ramfs (ro,nosuid,nodev,noexec,relatime,mode=700)

ramfs on /run/credentials/systemd-sysusers.service type ramfs (ro,nosuid,nodev,noexec,relatime,mode=700)

ramfs on /run/credentials/systemd-tmpfiles-setup-dev.service type ramfs (ro,nosuid,nodev,noexec,relatime,mode=700)

ramfs on /run/credentials/systemd-tmpfiles-setup.service type ramfs (ro,nosuid,nodev,noexec,relatime,mode=700)

sunrpc on /run/rpc_pipefs type rpc_pipefs (rw,relatime)

192.168.1.12:/volume1/remotebackups on /mnt/remotebackups type nfs4 (rw,relatime,vers=4.0,rsize=131072,wsize=131072,namlen=255,hard,proto=tcp,timeo=600,retrans=2,sec=sys,clientaddr=192.168.1.26,local_lock=none,addr=192.168.1.12)

//192.168.1.12/remotebackups/backups on /mnt/remotebackups/backups type cifs (rw,relatime,vers=3.1.1,cache=strict,username=storage,uid=0,noforceuid,gid=0,noforcegid,addr=192.168.1.12,file_mode=0777,dir_mode=0777,soft,nounix,serverino,mapposix,rsize=4194304,wsize=4194304,bsize=1048576,retrans=1,echo_interval=60,actimeo=1,closetimeo=1)

tmpfs on /run/user/0 type tmpfs (rw,nosuid,nodev,relatime,size=1615260k,nr_inodes=403815,mode=700,inode64)

proc on /proc type proc (rw,relatime)

proc on /proc type proc (rw,relatime)