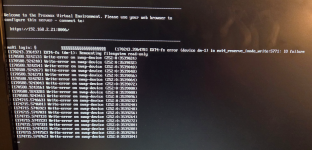

I am new to proxmox and servers. I installed proxmox on my ibm system x3550 m4 server. and installed truenas on it. I did PCI passthrough and turned on truenas. immediately my proxmox showed connection error and in cli it displayed

"EXT4-fs (dm-1): Remounting filesystem read only "

if I try to type something it says :

"-bash: /usr/bin/ls: Input/output error "

what should I do now

"EXT4-fs (dm-1): Remounting filesystem read only "

if I try to type something it says :

"-bash: /usr/bin/ls: Input/output error "

what should I do now