Brand new to Proxmox and LVM (running Linux, VMs and docker for decades). The error I get now is this:

Background, I started playing with Proxmox on an old 1TB HDD SATA I had. I then bought a 2TB NVME & PCIe card as my PC is too old to have a SSD slot. I added the 2TB to the 1TB LVM pve/data pool. Turns out it's also too old to boot the NVME. So I bought a 1TB mSATA to put in the card (it has a slot for each). I clonezilla my HDD to the SDD and all was great. Then I noticed in Gparted that the new 1TB SSD had like 23GB extra (I'm guessing the HD used 1000 vs SD using 1024 for MB count or something). I then used Gparted to extend the partition to the full size of the drive (note that the HD also had this same thing, no extra space that I can tell). I suspect this is maybe where things went sideways...

I've tried so many things but nothing seems to get me unstuck here. I also see many unanswered posts in this forum which is kind of disheartening.

I had deleted my first VM (100) and was going to re-do it when I got the above error. Below is all the stats I see other posts have (or get requested). I'd rather not start over, and also if this were a "production" environment in my home-lab I wouldn't want to be doing that either if possible as it will have many VM/LXC setup and running and being down for all that would be excruciating.

This is the one showing the drive is in read-only mode

I can't get this

Code:

WARNING: Thin pool pve/data needs check has read-only metadata.

TASK ERROR: unable to create VM 100 - lvcreate 'pve/vm-100-disk-0' error: Cannot create new thin volume, free space in thin pool pve/data reached threshold.I've tried so many things but nothing seems to get me unstuck here. I also see many unanswered posts in this forum which is kind of disheartening.

I had deleted my first VM (100) and was going to re-do it when I got the above error. Below is all the stats I see other posts have (or get requested). I'd rather not start over, and also if this were a "production" environment in my home-lab I wouldn't want to be doing that either if possible as it will have many VM/LXC setup and running and being down for all that would be excruciating.

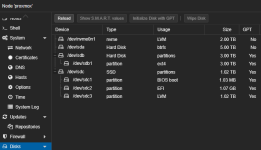

This is the one showing the drive is in read-only mode

Code:

root@proxmox:~# lvdisplay pve/data

--- Logical volume ---

LV Name data

VG Name pve

LV UUID HSgCyL-dsmq-r4gI-ajf1-gsXL-N63v-EGYkAB

LV Write Access read/write (activated read only)

LV Creation host, time proxmox, 2024-09-28 13:52:59 -0700

LV Pool metadata data_tmeta

LV Pool data data_tdata

LV Status available

# open 0

LV Size 2.63 TiB

Allocated pool data 2.63%

Allocated metadata 0.57%

Current LE 690093

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 256

Block device 252:5

Code:

root@proxmox:~# pvs

PV VG Fmt Attr PSize PFree

/dev/nvme0n1p1 pve lvm2 a-- <1.82t 0

/dev/sda3 pve lvm2 a-- <952.87g 0

Code:

root@proxmox:~# pvscan

PV /dev/sda3 VG pve lvm2 [<952.87 GiB / 0 free]

PV /dev/nvme0n1p1 VG pve lvm2 [<1.82 TiB / 0 free]

Total: 2 [<2.75 TiB] / in use: 2 [<2.75 TiB] / in no VG: 0 [0 ]

Code:

root@proxmox:~# pvdisplay

--- Physical volume ---

PV Name /dev/sda3

VG Name pve

PV Size <952.87 GiB / not usable 1.00 MiB

Allocatable yes (but full)

PE Size 4.00 MiB

Total PE 243934

Free PE 0

Allocated PE 243934

PV UUID Bw6w32-1m0q-ed1h-esEn-qSZZ-nix6-J1EWLd

--- Physical volume ---

PV Name /dev/nvme0n1p1

VG Name pve

PV Size <1.82 TiB / not usable 4.00 MiB

Allocatable yes (but full)

PE Size 4.00 MiB

Total PE 476931

Free PE 0

Allocated PE 476931

PV UUID 5S1rOr-mzeA-zOly-quph-QaZ0-dGF7-Chfm40

Code:

root@proxmox:~# lvs -a

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

data pve twi-cotzM- 2.63t 2.63 0.57

[data_tdata] pve Twi-ao---- 2.63t

[data_tmeta] pve ewi-ao---- 8.10g

[lvol0_pmspare] pve ewi------- 8.10g

root pve -wi-ao---- 96.00g

swap pve -wi-ao---- 8.00g

vm-101-disk-0 pve Vwi-a-tz-- 8.00g data 83.85

vm-102-disk-0 pve Vwi-a-tz-- 8.00g data 29.07

vm-103-disk-0 pve Vwi-a-tz-- 60.00g data 88.86

Code:

root@proxmox:~# vgdisplay pve

--- Volume group ---

VG Name pve

System ID

Format lvm2

Metadata Areas 2

Metadata Sequence No 35

VG Access read/write

VG Status resizable

MAX LV 0

Cur LV 6

Open LV 2

Max PV 0

Cur PV 2

Act PV 2

VG Size <2.75 TiB

PE Size 4.00 MiB

Total PE 720865

Alloc PE / Size 720865 / <2.75 TiB

Free PE / Size 0 / 0

VG UUID sMiGZL-4dsT-A2cg-xmOl-YGIN-nMwu-ylGCfGI can't get this

--repair to ever work b/c the pool is active, but I can't get it to ever NOT be active...

Code:

root@proxmox:~# lvscan -a

ACTIVE '/dev/pve/data' [2.63 TiB] inherit

ACTIVE '/dev/pve/swap' [8.00 GiB] inherit

ACTIVE '/dev/pve/root' [96.00 GiB] inherit

ACTIVE '/dev/pve/vm-101-disk-0' [8.00 GiB] inherit

ACTIVE '/dev/pve/vm-102-disk-0' [8.00 GiB] inherit

ACTIVE '/dev/pve/vm-103-disk-0' [60.00 GiB] inherit

ACTIVE '/dev/pve/data_tdata' [2.63 TiB] inherit

ACTIVE '/dev/pve/data_tmeta' [8.10 GiB] inherit

inactive '/dev/pve/lvol0_pmspare' [8.10 GiB] inherit

root@proxmox:~# lvconvert --repair pve/data

Active pools cannot be repaired. Use lvchange -an first.

root@proxmox:~# lvchange -an -v -f /dev/pve/data

lvchange -an -v -f /dev/pve/data_tdata

lvchange -an -v -f /dev/pve/data_tmeta

lvchange -an -v -f /dev/pve/vm-101-disk-0

lvchange -an -v -f /dev/pve/vm-102-disk-0

lvchange -an -v -f /dev/pve/vm-103-disk-0

lvscan -a

Deactivating logical volume pve/data.

Removing pve-data (252:5)

Deactivating logical volume pve/data_tdata.

Device pve-data_tdata (252:3) is used by another device.

Deactivating logical volume pve/data_tmeta.

Device pve-data_tmeta (252:2) is used by another device.

Deactivating logical volume pve/vm-101-disk-0.

Removing pve-vm--101--disk--0 (252:6)

Deactivating logical volume pve/vm-102-disk-0.

Removing pve-vm--102--disk--0 (252:7)

Deactivating logical volume pve/vm-103-disk-0.

Not monitoring pve/data with libdevmapper-event-lvm2thin.so

Unmonitored LVM-sMiGZL4dsTA2cgxmOlYGINnMwuylGCfGHSgCyLdsmqr4gIajf1gsXLN63vEGYkAB-tpool for events

Removing pve-vm--103--disk--0 (252:8)

Removing pve-data-tpool (252:4)

Removing pve-data_tdata (252:3)

Removing pve-data_tmeta (252:2)

inactive '/dev/pve/data' [2.63 TiB] inherit

ACTIVE '/dev/pve/swap' [8.00 GiB] inherit

ACTIVE '/dev/pve/root' [96.00 GiB] inherit

inactive '/dev/pve/vm-101-disk-0' [8.00 GiB] inherit

inactive '/dev/pve/vm-102-disk-0' [8.00 GiB] inherit

inactive '/dev/pve/vm-103-disk-0' [60.00 GiB] inherit

inactive '/dev/pve/data_tdata' [2.63 TiB] inherit

inactive '/dev/pve/data_tmeta' [8.10 GiB] inherit

inactive '/dev/pve/lvol0_pmspare' [8.10 GiB] inherit

root@proxmox:~# lvconvert --repair pve/data

Active pools cannot be repaired. Use lvchange -an first.

Code:

root@proxmox:~# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS

sda 8:0 0 953.9G 0 disk

├─sda1 8:1 0 1007K 0 part

├─sda2 8:2 0 1G 0 part

└─sda3 8:3 0 952.9G 0 part

├─pve-swap 252:0 0 8G 0 lvm [SWAP]

├─pve-root 252:1 0 96G 0 lvm /

├─pve-data_tmeta 252:2 0 8.1G 0 lvm

│ └─pve-data-tpool 252:4 0 2.6T 0 lvm

│ ├─pve-data 252:5 0 2.6T 1 lvm

│ ├─pve-vm--101--disk--0 252:6 0 8G 0 lvm

│ ├─pve-vm--102--disk--0 252:7 0 8G 0 lvm

│ └─pve-vm--103--disk--0 252:8 0 60G 0 lvm

└─pve-data_tdata 252:3 0 2.6T 0 lvm

└─pve-data-tpool 252:4 0 2.6T 0 lvm

├─pve-data 252:5 0 2.6T 1 lvm

├─pve-vm--101--disk--0 252:6 0 8G 0 lvm

├─pve-vm--102--disk--0 252:7 0 8G 0 lvm

└─pve-vm--103--disk--0 252:8 0 60G 0 lvm

sdb 8:16 0 465.8G 0 disk

├─sdb1 8:17 0 512M 0 part

├─sdb2 8:18 0 1K 0 part

├─sdb5 8:21 0 318.2G 0 part

└─sdb6 8:22 0 147G 0 part

sdc 8:32 1 0B 0 disk

sdd 8:48 1 0B 0 disk

sde 8:64 1 0B 0 disk

sdf 8:80 1 0B 0 disk

sr0 11:0 1 1024M 0 rom

nvme0n1 259:0 0 1.8T 0 disk

└─nvme0n1p1 259:1 0 1.8T 0 part

└─pve-data_tdata 252:3 0 2.6T 0 lvm

└─pve-data-tpool 252:4 0 2.6T 0 lvm

├─pve-data 252:5 0 2.6T 1 lvm

├─pve-vm--101--disk--0 252:6 0 8G 0 lvm

├─pve-vm--102--disk--0 252:7 0 8G 0 lvm

└─pve-vm--103--disk--0 252:8 0 60G 0 lvm