I'm not sure how I managed to do this, but I must have messed up the initial config of my PVE server.

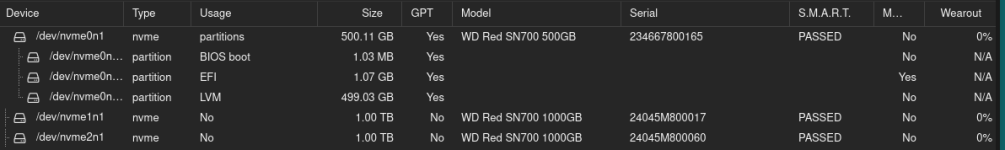

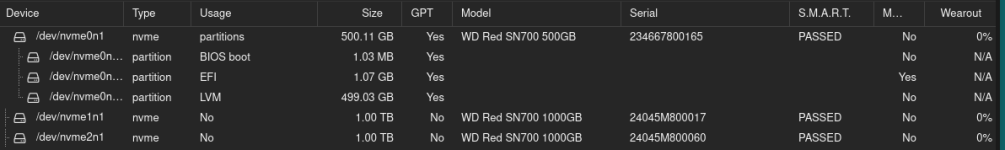

I have 3x NVME:

How should I move the existing VMs, etc, to the 1TB NVME?

I have 3x NVME:

- 1x 500GB for OS

- 2x 1TB for VMs

How should I move the existing VMs, etc, to the 1TB NVME?