Hello,

I have just created a cluster and ceph in Proxmox VE, but it the HA doesn't work becouse it says status none even when i add it to HA. I can't even migrate it to other nodes. How can i fix it? Also i have never checked before if it's normal that Proxmox automatically creates LVM when i make ceph pool. Is it, becouse it doesn't let me create a OSD? When it creates a LVM at the start i delete it to make ceph OSD, but after i create OSD it also creates LVM. What could couse it not being HA?

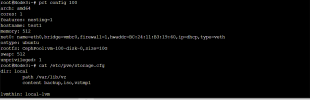

it should be ok:

It says status none after i set it to started:

best regards.

I have just created a cluster and ceph in Proxmox VE, but it the HA doesn't work becouse it says status none even when i add it to HA. I can't even migrate it to other nodes. How can i fix it? Also i have never checked before if it's normal that Proxmox automatically creates LVM when i make ceph pool. Is it, becouse it doesn't let me create a OSD? When it creates a LVM at the start i delete it to make ceph OSD, but after i create OSD it also creates LVM. What could couse it not being HA?

it should be ok:

It says status none after i set it to started:

best regards.