Grettings,

I broke my own rule and jump on the recent update and it hit me in the face:

I have a cluster with 3 Proxmox servers (pmx1 & pmx3; pmx2 used just for quorum)

The sequence used to upgrade was:

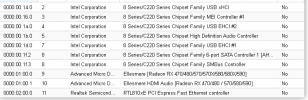

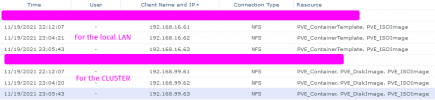

Now I have this in the cluster

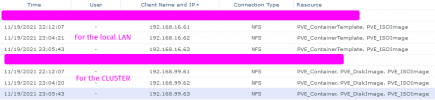

I use a Synology NAS as network storage with NFS shared folders

This is the cluster storage

The same occurs if I try to migrate some CT from pmx1 -> pm2:

If I try to migrate from pmx3 -> pmx1 a CT that is offline the error persists:

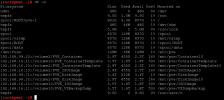

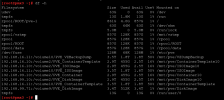

This is what

At this moment (11am), staff is working on VMs and I can't restart PMX1.

Thank you for your help.

Regards

I broke my own rule and jump on the recent update and it hit me in the face:

I have a cluster with 3 Proxmox servers (pmx1 & pmx3; pmx2 used just for quorum)

The sequence used to upgrade was:

- I upgrade pmx2 (the one just for quorum, it doesn't run any vm) and reboot

- Migrate all vms from pmx1 -> pmx3, upgrade pmx1 and reboot

- Migrate al from pmx3 -> pmx1, without any issue, then I upgrade pmx3 and reboot

Now I have this in the cluster

I use a Synology NAS as network storage with NFS shared folders

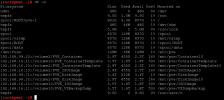

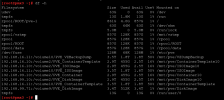

This is the cluster storage

Code:

Now I try to migrate some VMs/CTs from pmx1 -> pm3 and this error occurs any time:

2021-11-20 01:32:38 starting migration of CT 206 to node 'pmx1' (192.168.16.61)

2021-11-20 01:32:38 ERROR: no such logical volume pve/data at /usr/share/perl5/PVE/Storage/LvmThinPlugin.pm line 219.

2021-11-20 01:32:38 aborting phase 1 - cleanup resources

2021-11-20 01:32:38 start final cleanup

2021-11-20 01:32:38 ERROR: migration aborted (duration 00:00:00): no such logical volume pve/data at /usr/share/perl5/PVE/Storage/LvmThinPlugin.pm line 219.

TASK ERROR: migration abortedThe same occurs if I try to migrate some CT from pmx1 -> pm2:

Code:

2021-11-20 01:38:13 shutdown CT 201

2021-11-20 01:38:16 starting migration of CT 201 to node 'pmx2' (192.168.16.62)

2021-11-20 01:38:16 ERROR: no such logical volume pve/data at /usr/share/perl5/PVE/Storage/LvmThinPlugin.pm line 219.

2021-11-20 01:38:16 aborting phase 1 - cleanup resources

2021-11-20 01:38:16 start final cleanup

2021-11-20 01:38:16 start container on source node

2021-11-20 01:38:18 ERROR: migration aborted (duration 00:00:05): no such logical volume pve/data at /usr/share/perl5/PVE/Storage/LvmThinPlugin.pm line 219.

TASK ERROR: migration abortedIf I try to migrate from pmx3 -> pmx1 a CT that is offline the error persists:

Code:

2021-11-20 01:44:51 starting migration of CT 206 to node 'pmx1' (192.168.16.61)

2021-11-20 01:44:51 ERROR: no such logical volume pve/data at /usr/share/perl5/PVE/Storage/LvmThinPlugin.pm line 219.

2021-11-20 01:44:51 aborting phase 1 - cleanup resources

2021-11-20 01:44:51 start final cleanup

2021-11-20 01:44:51 ERROR: migration aborted (duration 00:00:00): no such logical volume pve/data at /usr/share/perl5/PVE/Storage/LvmThinPlugin.pm line 219.

TASK ERROR: migration abortedThis is what

df shows in pmx1, pmx3 (IP 192.168.16.11 is another NAS that was used some weeks ago, the new nas and final is 192.168.16.10)

At this moment (11am), staff is working on VMs and I can't restart PMX1.

Thank you for your help.

Regards