Hey

@LnxBil

Thanks for your answer. But no, I'll explain why my question isn't stupid.

The main purpose of VMs is to be used in mainframes or in data-centers. But I'm talking from the home user perspective. You may think the home user is not important because individually they have very little resources, but collectively the home users are in a much greater number. And I believe there are potentially more resources available coming from home users rather than from data-center maintainers. I mean money (subscriptions) and development contribution for the Proxmox project. But that's if Proxmox meets the demands of home users instead of only data-centers. And what are the home user demands?

In the past, I used to make my machines dual-boot between Windows and Linux, only because I wanted to have some Linux experience. Actually, this would be a big plus for me professionally. But I ended up never having that experience because it was too impractical to use Linux. First, it took some time to reboot (back then, machines weren't so fast). Then when finally in Linux, I found myself bored. All of my stuff was stored and configured in the Windows machine, so in Linux I didn't have much of what to do. Also, some of my hardware was unavailable in Linux (mostly because I didn't have the time/will to figure out how to make it work).

Then, running Linux in a virtual machine inside Windows was the next thing I tried. But the virtualization software still didn't give Linux full access to my files (the file system) and it was really slow for serious stuff.

But now there is Proxmox, a game changer. When compared to other VM solutions that run on top of another existing OS, Proxmox is certainly unbeatable in terms of performance. And when compared to the dual-booting, bare-metal solution, there is no doubt that Proxmox makes the multi-OS experience much easier and flexible. But the question is: How much will that cost for the home user primary OS, the one that is used 95% of the time?

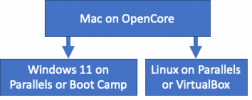

I chose to ask about Windows because I thought that would bring more answers. But my actual primary system is Mac, not Windows. And that brings me to this new home user demand. Most people can't afford the Mac hardware, which is 5 times more expensive than PC hardware. As you know, there are ways to work around that (OpenCore/Clover), to use Mac on a bare metal PC hardware. However, 99.9% of home users won't be able to do that. Either because their PC hardware is not suitable, or because they don't have the necessary knowledge to set that up. And here comes Proxmox again, as a facilitator. And this is were a new trend of VM usage is beginning.

PS: I don't think Apple is angry about that. They are earning more money, because more users are getting to know their system and their brand. Bill Gates also knows that Windows wouldn't be the most used OS if it wasn't for the unlicensed copies. Not everyone could afford the license. Some just didn't agree to pay for it. But 99% of the public ended up using it, which is what matters the most for Microsoft.

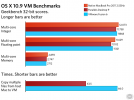

Now back to the topic of performance. I'm a newbie here. I see now that all KVM is when the VM uses a virtualized kvm64 CPU instead of the host CPU, right? So none of those benchmarks that I found are a good reflection of Proxmox best possible performance, right? That could explain why there are some users talking about <5% of performance loss with Windows or Mac over Proxmox (and I thought that couldn't be true as it seemed contradict the KVM benchmarks).

PS: I'll study what you wrote to understand better things like "cannot migrate your VM". And if there is any advantage for the home user to use a Snapshot instead of Mac's Time Machine, for instance.