Greetings. I've been setting up my ovh dedicated server with failover ips (really just extra ips). I tried using various recommendations on forums and at ovh; but usually something was missing. Maybe this should go in networking forum.

The following is just a summary of what I did to get proxmox to work with IP4 and IP6 on the host and vms on a Dedicated OVH server.

There is no NAT involved. Just direct public Internet IP addresses on each VM and the host.

It's not a complete tutorial, but should fill in the missing pieces to get this to work specifially at OVH on a dedicated server (VPS, other companies, and other platforms are a little different). This is mostly just a quick brain dump while it's fresh in my mind.

Some initial notes and preparation:

- OVH dedicated server networking ips may use gateways outside the subnets. In some Linux distributions this makes it hard as it won't just work or follow any easily found documentation.

- I added a block of IP4 failover ip addresses so I could setup the virtual mac on each vm. I read from OVH that a public IPV6 will not work without a virual mac. And the only place to add a virtual mac is with an extra ip. So, yeah, time to buy some ips.

- I initially tried setting up NAT with various scenarios and it was just a miserable experience trying to get it all to work. I decided to just simplify and setup an IP to each VM and later just use an internal virtual network to communicate between vms. I'll just set a default firewall on each VM to block everything except what is needed if anything from the public Internet IP.

- I installed proxmox from the ISO using IPMI. OVH has a template that may work as well. But, I had so messed up my partitioning during reinstalling and testing that the OVH installer didn't work anymore for me.

- I'm using Ubuntu 20.04 on the guest vms with netplan network configuration.

Step 1:

Get the host to work on ip4. This part is pretty easy since dhcp seems to pick it up automatically.

Step 2:

Get the host to work on ip6. Some of this I configured in the gui. But here's the working file:

/etc/network/interfaces

That should work. Test ping with ip4 and ip6.

Step 3:

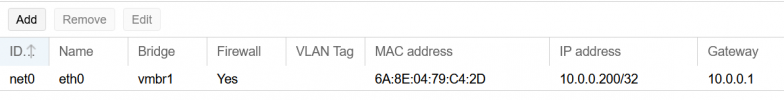

Get VM to work.

Using Ubunu 20.04 in my case with netplan based networking.

Make sure IP4 and IP6 are assigned in OVH control panel. Assign the virtual mac from OVH to your VM.

Probably will need to use the host console to the vm the first time to get the networking to function.

Edit this file as needed to make it work for your setup.

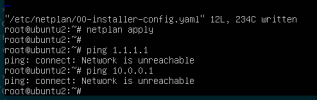

/etc/netplan/00-installer-config.yaml

Try the netplan and see if it all works. Your configurations may vary but the core pieces are there as far as the hard to figure out subnetting for OVH dedicated.

The following is just a summary of what I did to get proxmox to work with IP4 and IP6 on the host and vms on a Dedicated OVH server.

There is no NAT involved. Just direct public Internet IP addresses on each VM and the host.

It's not a complete tutorial, but should fill in the missing pieces to get this to work specifially at OVH on a dedicated server (VPS, other companies, and other platforms are a little different). This is mostly just a quick brain dump while it's fresh in my mind.

Some initial notes and preparation:

- OVH dedicated server networking ips may use gateways outside the subnets. In some Linux distributions this makes it hard as it won't just work or follow any easily found documentation.

- I added a block of IP4 failover ip addresses so I could setup the virtual mac on each vm. I read from OVH that a public IPV6 will not work without a virual mac. And the only place to add a virtual mac is with an extra ip. So, yeah, time to buy some ips.

- I initially tried setting up NAT with various scenarios and it was just a miserable experience trying to get it all to work. I decided to just simplify and setup an IP to each VM and later just use an internal virtual network to communicate between vms. I'll just set a default firewall on each VM to block everything except what is needed if anything from the public Internet IP.

- I installed proxmox from the ISO using IPMI. OVH has a template that may work as well. But, I had so messed up my partitioning during reinstalling and testing that the OVH installer didn't work anymore for me.

- I'm using Ubuntu 20.04 on the guest vms with netplan network configuration.

Step 1:

Get the host to work on ip4. This part is pretty easy since dhcp seems to pick it up automatically.

Step 2:

Get the host to work on ip6. Some of this I configured in the gui. But here's the working file:

/etc/network/interfaces

Code:

auto lo

iface lo inet loopback

iface lo inet6 loopback

iface eno1 inet manual

iface eno1 inet6 manual

iface enp0s20f0u8u3c2 inet manual

iface eno2 inet manual

auto vmbr0

iface vmbr0 inet static

address 1.2.3.4/24 # your public ipv4 IP

gateway 1.2.3.254 # ovh gateway ends in .254

bridge-ports eno1

bridge-stp off

bridge-fd 0

iface vmbr0 inet6 static

address 1111:2222:3333:6666::1/64 # your public ipv6 IP and subnet

gateway 1111:2222:3333:66FF:FF:FF:FF:FF # ovh gateway ends outside the subnet with ff:ff:ff:ff:ff partially replacing the ip

bridge-ports eno1

bridge-stp off

bridge-fd 0

auto vmbr1

iface vmbr1 inet static

address 10.1.1.1/24 # optional just an internal network not routed

bridge-ports none

bridge-stp off

bridge-fd 0That should work. Test ping with ip4 and ip6.

Step 3:

Get VM to work.

Using Ubunu 20.04 in my case with netplan based networking.

Make sure IP4 and IP6 are assigned in OVH control panel. Assign the virtual mac from OVH to your VM.

Probably will need to use the host console to the vm the first time to get the networking to function.

Edit this file as needed to make it work for your setup.

/etc/netplan/00-installer-config.yaml

Code:

# This is the network config written by 'subiquity'

network:

ethernets:

ens18:

addresses:

- 3.4.5.6/32 # an OVH public failover ip assigned to your server with vmac setup

- 1111:2222:3333:6666::2/64 # an OVH ipv6 from your server allocation

nameservers:

addresses: [ "127.0.0.1" ]

optional: true

routes:

- to: 0.0.0.0/0

via: 1.2.3.254 # OVH ip4 gateway BASED ON GATEWAY OF THE MAIN SERVER IP

on-link: true

- to: "::/0"

via: "1111:2222:3333:66ff:ff:ff:ff:ff" #OVH ipv6 gateway outside the IP6 subnet

on-link: true

ens19:

addresses:

- 10.1.1.2/24 # optional internal network

version: 2Try the netplan and see if it all works. Your configurations may vary but the core pieces are there as far as the hard to figure out subnetting for OVH dedicated.