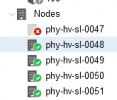

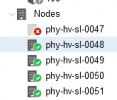

I've got a 5 node setup with ceph running SSD. VM's and Containers have been setup for HA.

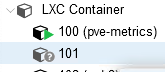

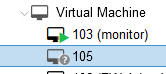

We just had a node crash due to hardware, but the machines that were on that node are not failing over. In the GUI they have a grey question mark next to them, and clicking on them shows "no route to hose (595).

I don't seem to be able to bring these machines up on a different node, they appear completely lost?? Does not seem like intended behavior.. Any help would be great.

We just had a node crash due to hardware, but the machines that were on that node are not failing over. In the GUI they have a grey question mark next to them, and clicking on them shows "no route to hose (595).

I don't seem to be able to bring these machines up on a different node, they appear completely lost?? Does not seem like intended behavior.. Any help would be great.

Code:

:~# ceph -w

cluster:

id: 972b4d17-8d71-4b77-8ed9-8e44e2c84f16

health: HEALTH_WARN

1/5 mons down, quorum phy-hv-sl-0049,phy-hv-sl-0051,phy-hv-sl-0048,phy-hv-sl-0050

services:

mon: 5 daemons, quorum phy-hv-sl-0049,phy-hv-sl-0051,phy-hv-sl-0048,phy-hv-sl-0050 (age 89m), out of quorum: phy-hv-sl-0047

mgr: phy-hv-sl-0051(active, since 88m), standbys: phy-hv-sl-0048, phy-hv-sl-0050, phy-hv-sl-0049

mds: 1/1 daemons up, 3 standby

osd: 25 osds: 20 up (since 89m), 20 in (since 79m)

data:

volumes: 1/1 healthy

pools: 4 pools, 1089 pgs

objects: 73.61k objects, 158 GiB

usage: 507 GiB used, 8.6 TiB / 9.1 TiB avail

pgs: 1089 active+clean

io:

client: 1.3 KiB/s rd, 993 KiB/s wr, 0 op/s rd, 45 op/s wr

Code:

:~# ha-manager status

quorum OK

master phy-hv-sl-0050 (active, Fri Sep 24 21:23:04 2021)

lrm phy-hv-sl-0047 (old timestamp - dead?, Fri Sep 24 19:49:03 2021)

lrm phy-hv-sl-0048 (active, Fri Sep 24 21:23:04 2021)

lrm phy-hv-sl-0049 (active, Fri Sep 24 21:23:06 2021)

lrm phy-hv-sl-0050 (active, Fri Sep 24 21:23:06 2021)

lrm phy-hv-sl-0051 (active, Fri Sep 24 21:23:01 2021)

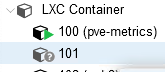

service ct:100 (phy-hv-sl-0051, started)

service ct:102 (phy-hv-sl-0048, started)

service ct:104 (phy-hv-sl-0050, started)

service ct:107 (phy-hv-sl-0051, started)

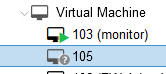

service vm:103 (phy-hv-sl-0048, started)

service vm:106 (phy-hv-sl-0049, started)