Hello

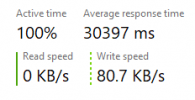

I am experiencing a weird issue where whenever a backup job starts for a guest VM, there is a chance for the guest to report 100% disk activity and refuse to do any more writes, which causes application crashes and other issues. I cant seem to find a rhyme or reason for this to happen, as backups functioned fine for 2 weeks prior until they broke one day, with no configuration changes or package updates inbetween. The host disk is an NVMe SSD (Sabrent rocket 4.0 2TB), so I have high doubts that the read/write speeds are being maxed out with the rate backups can transfer (1 gigabit WAN to a backup target consisting of mechanical HDDs)

The backup consists of 4 VMs (One freeBSD pfsense, one windows 10, two ubuntu server), and they all backup to a proxmox backup server target. All VMs are susceptible to the random freezing and they all started to have the issue at the same time, with the only real solution being to manually stop the backups with vzdump --stop and re-run them, hoping the guest disk activity does not max out.

Things I have tried:

Package versions:

Any ideas? Thanks

I am experiencing a weird issue where whenever a backup job starts for a guest VM, there is a chance for the guest to report 100% disk activity and refuse to do any more writes, which causes application crashes and other issues. I cant seem to find a rhyme or reason for this to happen, as backups functioned fine for 2 weeks prior until they broke one day, with no configuration changes or package updates inbetween. The host disk is an NVMe SSD (Sabrent rocket 4.0 2TB), so I have high doubts that the read/write speeds are being maxed out with the rate backups can transfer (1 gigabit WAN to a backup target consisting of mechanical HDDs)

The backup consists of 4 VMs (One freeBSD pfsense, one windows 10, two ubuntu server), and they all backup to a proxmox backup server target. All VMs are susceptible to the random freezing and they all started to have the issue at the same time, with the only real solution being to manually stop the backups with vzdump --stop and re-run them, hoping the guest disk activity does not max out.

Things I have tried:

- Enabling/disabling guest agent

- Restarting the guest VMs

- Restarting the host

- Searching logs for anything out of the ordinary (there isnt as far as I can tell)

Package versions:

proxmox-ve: 6.4-1 (running kernel: 5.4.114-1-pve)

pve-manager: 6.4-6 (running version: 6.4-6/be2fa32c)

pve-kernel-5.4: 6.4-2

pve-kernel-helper: 6.4-2

pve-kernel-5.4.114-1-pve: 5.4.114-1

pve-kernel-5.4.106-1-pve: 5.4.106-1

ceph-fuse: 12.2.11+dfsg1-2.1+b1

corosync: 3.1.2-pve1

criu: 3.11-3

glusterfs-client: 5.5-3

ifupdown: 0.8.35+pve1

ksm-control-daemon: 1.3-1

libjs-extjs: 6.0.1-10

libknet1: 1.20-pve1

libproxmox-acme-perl: 1.1.0

libproxmox-backup-qemu0: 1.0.3-1

libpve-access-control: 6.4-1

libpve-apiclient-perl: 3.1-3

libpve-common-perl: 6.4-3

libpve-guest-common-perl: 3.1-5

libpve-http-server-perl: 3.2-2

libpve-storage-perl: 6.4-1

libqb0: 1.0.5-1

libspice-server1: 0.14.2-4~pve6+1

lvm2: 2.03.02-pve4

lxc-pve: 4.0.6-2

lxcfs: 4.0.6-pve1

novnc-pve: 1.1.0-1

openvswitch-switch: 2.12.3-1

proxmox-backup-client: 1.1.6-2

proxmox-mini-journalreader: 1.1-1

proxmox-widget-toolkit: 2.5-5

pve-cluster: 6.4-1

pve-container: 3.3-5

pve-docs: 6.4-2

pve-edk2-firmware: 2.20200531-1

pve-firewall: 4.1-3

pve-firmware: 3.2-3

pve-ha-manager: 3.1-1

pve-i18n: 2.3-1

pve-qemu-kvm: 5.2.0-6

pve-xtermjs: 4.7.0-3

qemu-server: 6.4-2

smartmontools: 7.2-pve2

spiceterm: 3.1-1

vncterm: 1.6-2

zfsutils-linux: 2.0.4-pve1

pve-manager: 6.4-6 (running version: 6.4-6/be2fa32c)

pve-kernel-5.4: 6.4-2

pve-kernel-helper: 6.4-2

pve-kernel-5.4.114-1-pve: 5.4.114-1

pve-kernel-5.4.106-1-pve: 5.4.106-1

ceph-fuse: 12.2.11+dfsg1-2.1+b1

corosync: 3.1.2-pve1

criu: 3.11-3

glusterfs-client: 5.5-3

ifupdown: 0.8.35+pve1

ksm-control-daemon: 1.3-1

libjs-extjs: 6.0.1-10

libknet1: 1.20-pve1

libproxmox-acme-perl: 1.1.0

libproxmox-backup-qemu0: 1.0.3-1

libpve-access-control: 6.4-1

libpve-apiclient-perl: 3.1-3

libpve-common-perl: 6.4-3

libpve-guest-common-perl: 3.1-5

libpve-http-server-perl: 3.2-2

libpve-storage-perl: 6.4-1

libqb0: 1.0.5-1

libspice-server1: 0.14.2-4~pve6+1

lvm2: 2.03.02-pve4

lxc-pve: 4.0.6-2

lxcfs: 4.0.6-pve1

novnc-pve: 1.1.0-1

openvswitch-switch: 2.12.3-1

proxmox-backup-client: 1.1.6-2

proxmox-mini-journalreader: 1.1-1

proxmox-widget-toolkit: 2.5-5

pve-cluster: 6.4-1

pve-container: 3.3-5

pve-docs: 6.4-2

pve-edk2-firmware: 2.20200531-1

pve-firewall: 4.1-3

pve-firmware: 3.2-3

pve-ha-manager: 3.1-1

pve-i18n: 2.3-1

pve-qemu-kvm: 5.2.0-6

pve-xtermjs: 4.7.0-3

qemu-server: 6.4-2

smartmontools: 7.2-pve2

spiceterm: 3.1-1

vncterm: 1.6-2

zfsutils-linux: 2.0.4-pve1

Any ideas? Thanks