Hello,

I have bought Netis ST3310GF and have successfully configured LACP on Mikrotik hEx and 1 Proxmox Server. It works perfectly. After I have bought another NIC with 2 ports and I have found that LACP not working for 2 Server.

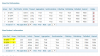

1 Server NIC:

Intel Corporation 82575EB Gigabit Network Connection

2 Server NIC

Intel Corporation 82576 Gigabit Network Connection

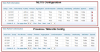

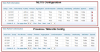

After some time I have turned LACP on 1 Server. LACP starts work on 2 Server normally. I have found that there is Link Aggregation Group in Partner's Info has similliar value - 9. If i will turn two server simultaneously - one of them will have status of Aggragation - False.

I have tryed to find solution to force change this Group in Proxmox but no success.

Is it posssible?

P.S. 2 Server mode now - balance-xor that's why there is no info in Partner's view.

I have bought Netis ST3310GF and have successfully configured LACP on Mikrotik hEx and 1 Proxmox Server. It works perfectly. After I have bought another NIC with 2 ports and I have found that LACP not working for 2 Server.

1 Server NIC:

Intel Corporation 82575EB Gigabit Network Connection

2 Server NIC

Intel Corporation 82576 Gigabit Network Connection

After some time I have turned LACP on 1 Server. LACP starts work on 2 Server normally. I have found that there is Link Aggregation Group in Partner's Info has similliar value - 9. If i will turn two server simultaneously - one of them will have status of Aggragation - False.

I have tryed to find solution to force change this Group in Proxmox but no success.

Is it posssible?

P.S. 2 Server mode now - balance-xor that's why there is no info in Partner's view.