We are running hummer on a dedicated 3 node cluster on top of Proxmox. Using 10Gb for cluster and public networks (separate cards). All the same 16 x 10K SAS drives spread evenly among 3 nodes.

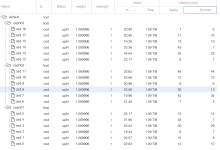

We are not having any issues but I see the latency is almost never below in single digits, almost always >10 and up to 85 ms. Is this normal I have no way of comparing with other clusters ?

I saw people complaining about high delay but posting pictures where the delay was way above 100ms.

Thanks for you help.

We are not having any issues but I see the latency is almost never below in single digits, almost always >10 and up to 85 ms. Is this normal I have no way of comparing with other clusters ?

I saw people complaining about high delay but posting pictures where the delay was way above 100ms.

Thanks for you help.