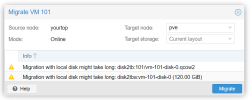

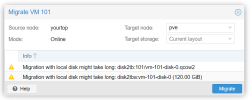

I am trying to migrate VS from one proxmox server to another proxmox server over Cluster.

But it doesn't work and there is no way for me to setup anything when I am migrating.

I have 3 virtual servers but none work for migrating I always get some disk problem.

Is there any better and easier way to migrate my virtual servers?

But it doesn't work and there is no way for me to setup anything when I am migrating.

I have 3 virtual servers but none work for migrating I always get some disk problem.

Is there any better and easier way to migrate my virtual servers?