Hello everyone,

since yesterday morning (that I upgraded several systems from 8.1.3 to 8.1.4 in reposistory enterprise) I have serious problems when creating snapshots.

N.3 clusters with 3 nodes each with CEPH datastore

N.5 servers with ZFS datastore

The behavior is the same.

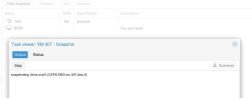

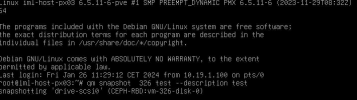

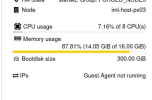

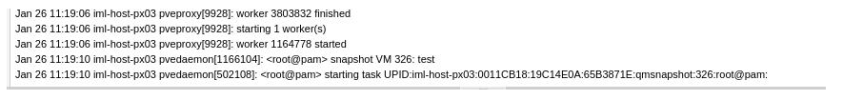

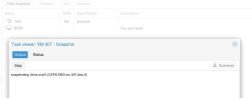

When I activate a snapshot (I tried without RAM) if the machine is big enough (during several attempts I had no problems with small VMs, let's say with a VM from 300 Gbyte up, yes, always) it happens that the snapshot window remains active and the procedure does not finish.

The VM loses connectivity on the AGENT (in fact the IP of the VM is no longer displayed in the Proxmox panel), the agent seems to be working, it is running, but the only way to get back to full functionality is to restart the VM. Verified with both Debian VMs, Ubuntu, Windows 2019 server, etc.

I attach a few screenshots.

since yesterday morning (that I upgraded several systems from 8.1.3 to 8.1.4 in reposistory enterprise) I have serious problems when creating snapshots.

N.3 clusters with 3 nodes each with CEPH datastore

N.5 servers with ZFS datastore

The behavior is the same.

When I activate a snapshot (I tried without RAM) if the machine is big enough (during several attempts I had no problems with small VMs, let's say with a VM from 300 Gbyte up, yes, always) it happens that the snapshot window remains active and the procedure does not finish.

The VM loses connectivity on the AGENT (in fact the IP of the VM is no longer displayed in the Proxmox panel), the agent seems to be working, it is running, but the only way to get back to full functionality is to restart the VM. Verified with both Debian VMs, Ubuntu, Windows 2019 server, etc.

I attach a few screenshots.