Latest activity

-

Nnihilanthlnxc replied to the thread automated install via PXE boot.Greetings. Could you provide the URL of that project on your GitHub? I'm stuck on this. I've tried several variations and nothing works. The installer isn't downloading the answer.toml file. That variation you suggested sounds interesting, and...

-

UdoB replied to the thread Pardon my less-than-intelligent question, but is there a way to install Proxmox on a Ceph cluster?.The usual boot process uses the BIOS firmware to read the very first blocks of the operating system. This is before even the "initrd"/"initramfs" is available = "pre-boot". While boot devices may be local hardware and network devices with some...

UdoB replied to the thread Pardon my less-than-intelligent question, but is there a way to install Proxmox on a Ceph cluster?.The usual boot process uses the BIOS firmware to read the very first blocks of the operating system. This is before even the "initrd"/"initramfs" is available = "pre-boot". While boot devices may be local hardware and network devices with some... -

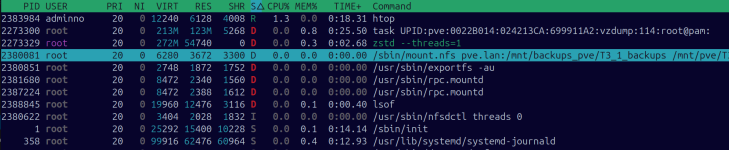

JJulian33 replied to the thread LXCs with NFS mounts failing to start after reboot or restore.Unfortunately this is not fixed. My infra es home lab with proxmox-ve: 9.1.0 (running kernel: 6.17.9-1-pve)pve-manager: 9.1.5 (running version: 9.1.5/80cf92a64bef6889) 2 nodes. Node has an NFS exported storage and HA VM, among other things. I...

-

UdoB replied to the thread [PVE 9.1.0/PVE-Manager 9.1.5] Customized ZFS Min/Max Size--Unexpected Behavior in RAM Summary. Did I Do Something Wrong?.I would expect this behavior. And yes, the ARC only gets actually used when ZFS recognizes relevant read pattern by watching the MRU/MFU counters. (--> "warm up". Newer systems may reload the ARC on boot from disk though...)

UdoB replied to the thread [PVE 9.1.0/PVE-Manager 9.1.5] Customized ZFS Min/Max Size--Unexpected Behavior in RAM Summary. Did I Do Something Wrong?.I would expect this behavior. And yes, the ARC only gets actually used when ZFS recognizes relevant read pattern by watching the MRU/MFU counters. (--> "warm up". Newer systems may reload the ARC on boot from disk though...) -

UdoB reacted to bitranox's post in the thread UPS NUTS Client to shutdown other node in cluster (not HA). with

UdoB reacted to bitranox's post in the thread UPS NUTS Client to shutdown other node in cluster (not HA). with Like.

Since your nodes are in a Proxmox cluster, SSH keys are already exchanged between them. That makes this pretty painless. SSH shutdown from node 1's NUT script, Node 1 already has a working NUT client, so you just add a script that SSHs into node...

Like.

Since your nodes are in a Proxmox cluster, SSH keys are already exchanged between them. That makes this pretty painless. SSH shutdown from node 1's NUT script, Node 1 already has a working NUT client, so you just add a script that SSHs into node... -

gurubert reacted to VictorSTS's post in the thread Ceph squid OSD crash related to RocksDB ceph_assert(cut_off == p->length) with

gurubert reacted to VictorSTS's post in the thread Ceph squid OSD crash related to RocksDB ceph_assert(cut_off == p->length) with Like.

Thanks for the heads up. Pretty sure most where created with Ceph Reef except a few that got recreated recently with Squid 19.2.3. I'm aware of that bug, but given that I don't use EC pools (Ceph bugreport mentions it seems to only happen on OSD...

Like.

Thanks for the heads up. Pretty sure most where created with Ceph Reef except a few that got recreated recently with Squid 19.2.3. I'm aware of that bug, but given that I don't use EC pools (Ceph bugreport mentions it seems to only happen on OSD... -

gurubert reacted to bitranox's post in the thread Ceph squid OSD crash related to RocksDB ceph_assert(cut_off == p->length) with

gurubert reacted to bitranox's post in the thread Ceph squid OSD crash related to RocksDB ceph_assert(cut_off == p->length) with Like.

as others pointed out already this hits OSDs that are fairly full (~75%+) with heavy disk fragmentation. v19.2.3 already ships a race condition fix (https://ceph.io/en/news/blog/2025/v19-2-3-squid-released/) that prevents new corruption, but it...

Like.

as others pointed out already this hits OSDs that are fairly full (~75%+) with heavy disk fragmentation. v19.2.3 already ships a race condition fix (https://ceph.io/en/news/blog/2025/v19-2-3-squid-released/) that prevents new corruption, but it... -

Aalpha754293 posted the thread Pardon my less-than-intelligent question, but is there a way to install Proxmox on a Ceph cluster? in Proxmox VE: Installation and configuration.Pardon my less-than-intelligent question, but is there a way to install Proxmox on a Ceph cluster such that Proxmox boots off of a Ceph cluster? Or is this not possible?

-

Ssacarias replied to the thread Reporting enhancements in the bug tracker.@t.lamprecht PVE 9.1 still doesn't have the proposed changes...

-

fstrankowski replied to the thread Another io-error with yellow triangle.Maybe useful for people coming back to this thread one day: Take a look at the kernel.org thread which describes this bug including examples from Proxmox and QEMU. There is also an excellent writeup here.

fstrankowski replied to the thread Another io-error with yellow triangle.Maybe useful for people coming back to this thread one day: Take a look at the kernel.org thread which describes this bug including examples from Proxmox and QEMU. There is also an excellent writeup here. -

TTritonB7 reacted to nvanaert's post in the thread BUG: Installing Microsoft Windows Server 2025 fails (related to NUMA / CPU- & Memory Hotplug) with

Like.

Update: I've got past the installer. It's likely a bug, related to NUMA or CPU / Memory hotplug as those are the features that I've turned off. Update 2: Once the installation is done I can turn on NUMA and CPU / Memory Hotplug and I do not seem...

Like.

Update: I've got past the installer. It's likely a bug, related to NUMA or CPU / Memory hotplug as those are the features that I've turned off. Update 2: Once the installation is done I can turn on NUMA and CPU / Memory Hotplug and I do not seem... -

KKingneutron reacted to l.leahu-vladucu's post in the thread Connect a USB drive plugged into PC to a VM? with

Like.

Hello craig.stephens! Yes, you can do this using USB Passthrough. Click on the VM and go to Hardware -> Add -> USB Device.

Like.

Hello craig.stephens! Yes, you can do this using USB Passthrough. Click on the VM and go to Hardware -> Add -> USB Device. -

Zzenoprax replied to the thread HA does not trigger when node loses LAN but remains in quorum.> it is very hard to determine a good set of robust rules enabling this to be automatic ... I feel like they are missing the point of your proposal which was essentially "add the ability to add some additional conditions for failure". Deploying...

-

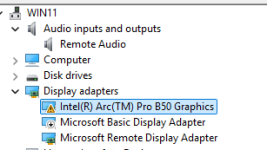

Bbellaireroad replied to the thread Arc B50 Pro Crashes VM.Got a little closer to the golden ring today. Connected the ARC Pro B50 with occulink both Win11 and Mint started without crashing Opened Win 11 and loaded the XE drivers, but they would not stay enabled to a "43 error" It is running on remote...

-

KKingneutron reacted to bbgeek17's post in the thread Is there a command to get a list of all nodes in a cluster with their subscription key? with

Like.

This would be a one liner :cool: pvesh get /cluster/resources --type node --output-format json|jq -r .[].node| xargs -I {} sh -c 'echo {}: $(pvesh get nodes/{}/subscription --output-format json | jq -r .message)' pve-1: There is no subscription...

Like.

This would be a one liner :cool: pvesh get /cluster/resources --type node --output-format json|jq -r .[].node| xargs -I {} sh -c 'echo {}: $(pvesh get nodes/{}/subscription --output-format json | jq -r .message)' pve-1: There is no subscription... -

Zzenoprax reacted to matteo.granuzzo's post in the thread HA does not trigger when node loses LAN but remains in quorum with

Like.

Hi All, I have opened a Bugzilla feature request fo that! https://bugzilla.proxmox.com/show_bug.cgi?id=7354 If you are interested, please comment. ;)

Like.

Hi All, I have opened a Bugzilla feature request fo that! https://bugzilla.proxmox.com/show_bug.cgi?id=7354 If you are interested, please comment. ;) -

HHoward A2 Labs posted the thread Just added a node after 9.1.4 > 9.1.5 update and Ceph keys weren't installed in Proxmox VE: Installation and configuration.Went through the normal process of adding a node to the cluster and installing Ceph, then went to run ceph-volume lvm batch and it errored out for missing config. Figured no big deal, symlinked /etc/ceph/ceph.conf -> /etc/pve/ceph.conf, I've had...

-

Ddviske replied to the thread BUG: Installing Microsoft Windows Server 2025 fails (related to NUMA / CPU- & Memory Hotplug).@nvanaert I'm also having this issue at the moment, both on 9.1.1 and 9.1.5. In my case it's only happening when I'm using an Autounattend.xml file to automatically install it as part of a CI workflow, but you might find this useful in...

-

Ttomsie1000 replied to the thread Secure Boot – Microsoft UEFI CA 2023 Certificate Not Included in EFI Disk.having done a lot (a lot lot) of reading and testing on this subject now I believe that there is still an outstanding issue with the qm enroll-efi-keys command I, like others, ran this command against a windows 11 machine, and it does indeed...

-

BBigBadBlack reacted to janus57's post in the thread [SOLVED] Prune simulator OK but real prune KO :-( with

Like.

Hi, You should tell more about your setup : How many backups per day (show the Schedule) What you want to keep ? Show the logs of the prune task Best regards,

Like.

Hi, You should tell more about your setup : How many backups per day (show the Schedule) What you want to keep ? Show the logs of the prune task Best regards,