Latest activity

-

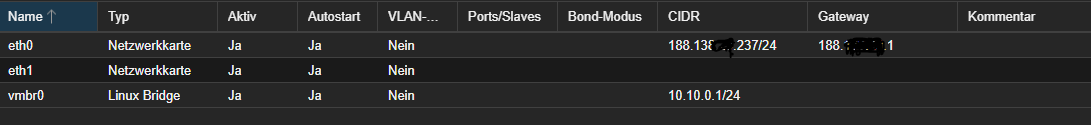

BBlaXioN replied to the thread Proxmox und eine VM mit Ubuntu und Plesk.Hi, ja irgendwie habe ich das ganze noch nicht richtig verstanden. Wie errreiche ich dann den Server von außen? Also ich hab den Server gerade nochmal neu aufgestockt und fang von 0 an. Voreingestellt ist das so wie auf dem Bild zu sehen. Ich...

-

fiona replied to the thread Gleiche Id in storage.cfg zulässig?.Hi, eine Storage-ID muss eindeutig sein und nur einen Eintrag haben. Für Node-spezifische Konfigurationen/Mappings gibt es initiale Patches: https://bugzilla.proxmox.com/show_bug.cgi?id=7200...

fiona replied to the thread Gleiche Id in storage.cfg zulässig?.Hi, eine Storage-ID muss eindeutig sein und nur einen Eintrag haben. Für Node-spezifische Konfigurationen/Mappings gibt es initiale Patches: https://bugzilla.proxmox.com/show_bug.cgi?id=7200... -

Hhboetes2 replied to the thread Where does 10.0.0.0/8 come from?.I agree adding the route of the listening interface to the mynetworks is a sane default. But I think there should be an option to disable this default, since it is completely reasonable to want to configure something else, which is currently...

-

Ffl0moto replied to the thread Backup randomly fails on Synology NAS.Hi, not entirely sure if noserverino also helps in your cause, but I'd give it a try: https://forum.proxmox.com/threads/smb-cifs-mount-point-stale-file-handle.124105/

-

XXN-Matt replied to the thread Watchdog Reboots.Would be nice to get some Proxmox involvement as this is clearly an issue with this and other threads of the same problem.

-

XXN-Matt replied to the thread Watchdog Reboots.We are solely Intel, so I don't think chipset is relevant.

-

EEduardo Taboada replied to the thread Install Proxmox on Dell PowerEdge R6515 with RAID1.Its better to put it in IT mode id You want later use ZFS or Ceph for VM storage. You can do that in Idrac/bios

-

GGilou replied to the thread Where does 10.0.0.0/8 come from?.Yeah, it's not that bad, it's indeed the route that drives it to think it has a /8 network. Code is not wrong, but it fails to select the correct (more precise) route, but that may be by design. In src/PMG/Utils.pm it does a proper route check...

-

fiona replied to the thread Live migration failure: Unknown savevm section or instance 'dbus-vmstate/dbus-vmstate' 0.Hi, it is a racy bug that's is fixed in qemu-server >= 9.1.3 with: https://git.proxmox.com/?p=qemu-server.git;a=commit;h=b82c2578e7a452dd5119ca31b8847f75f22fe842...

fiona replied to the thread Live migration failure: Unknown savevm section or instance 'dbus-vmstate/dbus-vmstate' 0.Hi, it is a racy bug that's is fixed in qemu-server >= 9.1.3 with: https://git.proxmox.com/?p=qemu-server.git;a=commit;h=b82c2578e7a452dd5119ca31b8847f75f22fe842... -

BBaris Manco replied to the thread Install Proxmox on Dell PowerEdge R6515 with RAID1.Anyways, I couldn't find a solution to be able to run Promox with ZFS RAID1 so after long hours of struggle I just went with hardware RAID1 instead

-

Lldebolle replied to the thread [SOLVED] PVE: VM backup to NFS share on Truenas: backup succeeds, but comment and log creation fail.Good morning Gilou, Please find the info below. storage.cfg: nfs: NAS_NFS export /mnt/sphere/Backups/proxmox path /mnt/pve/NAS_NFS server 192.168.13.22 content snippets,rootdir,iso,images,vztmpl,backup,import...

-

BBaris Manco replied to the thread Install Proxmox on Dell PowerEdge R6515 with RAID1.H730P mini

-

fiona replied to the thread [SOLVED] No solution found for migration error found stale volume copy.It seems like a different issue than the original one in this thread. For you, the storage types are compatible and the disk migration progresses a while. Does it always fail at the same offset? Could you share the system logs/journal from both...

fiona replied to the thread [SOLVED] No solution found for migration error found stale volume copy.It seems like a different issue than the original one in this thread. For you, the storage types are compatible and the disk migration progresses a while. Does it always fail at the same offset? Could you share the system logs/journal from both... -

SSteven-b replied to the thread Proxmox VE 9.1.1 – Windows Server / SQL Server issues.Hi, Very interested in this cause SQL server is used a lot. If I understand your problem correctly; You have an old VMWare server that is faster than your newer server where you are using PVE 9.1? Can you provide specs from both servers; CPU...

-

CCookiefamily posted the thread Adding HA resources to an existing HA affinity rule and outdated manpages in Proxmox VE: Installation and configuration.Hi, in the past we used ha-manager add $VMID --group $groupname to be able to assign a VM to what then was a "rule" (HA Groups). With PVE 9 HA Groups have been removed in favor of affinity rules which do offer more functionality - But I am...

-

GGilou replied to the thread Where does 10.0.0.0/8 come from?.How is your interface configured still? EDIT: I'm guessing, properly, as in, as a /16 or /24, I can reproduce it instantly whatever the size of the network configured, pmg guesses "class A! What else could it be!" when using 10.x subnets. I'd say...

-

fiona replied to the thread [SOLVED] LXC Debian 13 With Nesting Disabled - No Console.An enhancement by @Robert Obkircher to warn about this landed in pve-container=6.0.19: pve-container (6.0.19) trixie; urgency=medium ... * fix #6897: warn that enabling cgroup nesting may be required for systemd. While the nesting is greyed...

fiona replied to the thread [SOLVED] LXC Debian 13 With Nesting Disabled - No Console.An enhancement by @Robert Obkircher to warn about this landed in pve-container=6.0.19: pve-container (6.0.19) trixie; urgency=medium ... * fix #6897: warn that enabling cgroup nesting may be required for systemd. While the nesting is greyed... -

GGilou replied to the thread Where does 10.0.0.0/8 come from?.I need to check.. and it may be a "bug" in the way it determines it's own net.. not totally wrong because 10/8 certainly includes 10.10/16 or what not, but not exactly clever. I don't remember how this is computed, but I think that is the issue..

-

MMichiel_1afa replied to the thread Watchdog Reboots.We have had this same issue since replacing our intel based nodes with amd ones. Lately we have unexpected reboots at least weekly on one or more nodes. For us this always happens during the backup window (lucky?) and we see high IO delay right...

-

Hhboetes2 replied to the thread Where does 10.0.0.0/8 come from?.root@pinkdemon ~ # rg pinkdemon /etc/hosts 5:10.10.110.31 pinkdemon.example.com pinkdemon root@pinkdemon ~ # getent hosts pinkdemon 10.10.110.31 pinkdemon.example.com pinkdemon And rg '10\.0\.0' /etc reveals nothing new. So, please...