Latest activity

-

Ggfngfn256 replied to the thread how P2V in Proxmox ?.Not exactly. That tool is for importing an existing VMware ESXi VM to PVE. The poster's request is for a P2V tool within PVE.

-

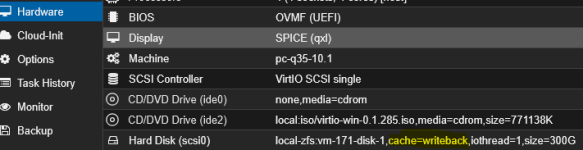

AAlexR76 replied to the thread Terminalserver unter PVE langsam.Macht da aus Performance Sicht für den TS nicht WriteBack deutlich mehr Sinn ? Dazu dann noch ggf dieses problem ? 0.1.285: VirtIO SCSI/VirtIO Block: Read errors and performance issues with IO-heavy Windows Server 2025 VMs Using VirtIO SCSI or...

-

Ssgw replied to the thread Mellanox ConnectX-4 LX and brigde-vlan-aware on Proxmox 8.0.1.Any hints related to #30 ?

-

Aayh20 reacted to Tornavida's post in the thread deactivated root@PAM - how to reactivate [SOLVED] with

Like.

so as thought, solution simple - but for n00bs - not :) So as mentioned above: I'messed aroung with my Web-Gui and somehow deactivated my root login on the server. to cut a long story short: # nano /etc/pve/user.cfg...

Like.

so as thought, solution simple - but for n00bs - not :) So as mentioned above: I'messed aroung with my Web-Gui and somehow deactivated my root login on the server. to cut a long story short: # nano /etc/pve/user.cfg... -

Ccklahn replied to the thread SPAM Report stündlich wenn neue Objekte.Hallo, ist es mit der 9.0.3 jetzt möglich, den SPAM-Report stündlich zu versenden, sobald eine Mail in Quarantäne ist? Gruß Christoph

-

Ffl0moto replied to the thread Upgrade old proxmox 5.Hi Mikel, this should be what you're looking for: https://forum.proxmox.com/threads/new-archive-cdn-for-end-of-life-eol-releases.178957/#post-830194 Cheers

-

MMikel Martinez posted the thread Upgrade old proxmox 5 in Proxmox VE: Installation and configuration.Hello, I have an old Proxmox 5 installation on two hosts that I need to upgrade. The problem is that, even though I have a subscription, the Buster packages have been deleted, so I can't upgrade. Is there another location where the repository is...

-

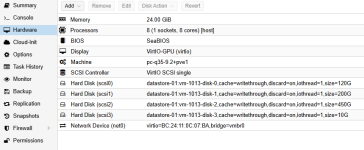

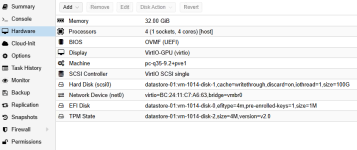

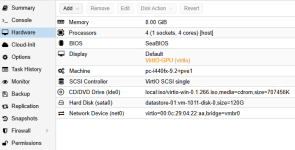

Ccklahn replied to the thread Terminalserver unter PVE langsam.Hallo, hier die Configs als Screenshot: DC: Exchange: Terminalserver: Verwaltungsmaschine:

-

IIsThisThingOn replied to the thread Hohe SWAP Auslastung.Ist denn das zvol auf SSDs? Sonst würde ich das nicht machen. Aber toll läuft es, war mit 99% der CPU Type. Deine CPU ist ja noch ziemlich neu ist, kannst du auch mal versuchen ab X86-64-v3 oder gar X86-64-v4 startet.

-

MMarkusKo reacted to Raudi's post in the thread [SOLVED] PVE 9.1.4 / NVIDIA Tesla T4 / vGPU 19.3 Installation with

Like.

Hier noch mal eine Zusammenfassung der Installation auf einem Secure Boot aktivierten System, welches mit PVE 9.1 installiert und auf aktuellem Patchlevel gebraucht wurde... Kernel: 6.17.4-2-pve PVE-Manager: 9.1.4 NVIDIA vGPU Treiber: 580.126.08...

Like.

Hier noch mal eine Zusammenfassung der Installation auf einem Secure Boot aktivierten System, welches mit PVE 9.1 installiert und auf aktuellem Patchlevel gebraucht wurde... Kernel: 6.17.4-2-pve PVE-Manager: 9.1.4 NVIDIA vGPU Treiber: 580.126.08... -

IHi Alle, also ich habe jetzt folgendes getan und es scheint zu wirken. Ich habe den CPU Typ auf X86-64-v2-AES gestellt. Ich habe bei den Vituellen HDDs discard und SSD Emulation eingeschalten. Ich habe KSM Sharing ausgeschalten. Ich habe das...

-

Nnorm4n posted the thread LXC - Unprivileged AppArmor Profile anpassen? in Proxmox VE (Deutsch/German).Hallo, nach dem Update von Proxmox 8 auf Proxmox 9 ist die Rechteverwaltung der LXCs restriktiver geworden, so dass ein isc-dhcp-server seinen Dienst nicht mehr starten darf. Die entsprechenden Meldung im syslog/kernellog stammen von AppArmor...

-

VictorSTS replied to the thread Slow ceph operation.Nice to see this reaching the official documentation! Maybe OP did setup a vlan for Ceph Public network with different IP network from that of other cluster services and can just move the vlan to a different physical nic/bond. Did you...

VictorSTS replied to the thread Slow ceph operation.Nice to see this reaching the official documentation! Maybe OP did setup a vlan for Ceph Public network with different IP network from that of other cluster services and can just move the vlan to a different physical nic/bond. Did you... -

HCeph Public is the network used to read/write from/to your Ceph OSDs from each PVE host, so your are limited to 1GB/s. Ceph Cluster network is used for OSD replication traffic only. Move Ceph Public to your 10GB nic and there should be an...

-

Nnpr replied to the thread PVE backups causing VM stalls.I think I may have come to some bit of a conclusion about why NFS seems to be an inferior choice here: A wrong choice of file mount. NFS mounts appear to have a bad default. If I compare the two types by mounting it both ways, spot the...

-

HHeracleos replied to the thread Ceph librbd vs krbd.Yes, in my case too, the performance increase was massive, although I believe that Crystaldiskmark with only 1gb chunk running on a VM is not very indicative since it uses the PVE node cache. I am inclined to think that the actual performance is...

-

Jjordelory reacted to ghandalf's post in the thread Several questions about POM - Proxmox offline mirror with

Like.

Hello, I have installed a POM and added a POM subscription and PVE subscriptions, which has been working well. I'm also syncing several repositories, all setup via proxmox-offline-mirror setup -> works also perfectly fine. This is my config: cat...

Like.

Hello, I have installed a POM and added a POM subscription and PVE subscriptions, which has been working well. I'm also syncing several repositories, all setup via proxmox-offline-mirror setup -> works also perfectly fine. This is my config: cat... -

Ssteffeninseoul posted the thread How to use PBS and move backups into a cloud storage with WORM? in Proxmox Backup: Installation and configuration.Hi! I am new to ProxMox so perhaps this is a trivial issue that I have. any help is greatly appreciated! --> I am running PBS on my QNAP NAS (PBS inside a VM) and PBS does the backups of my VM that run on a ProxMox VW. That works all fine. -->...

-

aaron replied to the thread Slow ceph operation.Move that to a fast network too! Otherwise your guests will be limited by that 1 Gbit. see https://docs.ceph.com/en/latest/rados/configuration/network-config-ref/ for which ceph network is used for what. Checkout our 2023 Ceph benchmark...

aaron replied to the thread Slow ceph operation.Move that to a fast network too! Otherwise your guests will be limited by that 1 Gbit. see https://docs.ceph.com/en/latest/rados/configuration/network-config-ref/ for which ceph network is used for what. Checkout our 2023 Ceph benchmark...