Latest activity

-

JJohannes S reacted to leesteken's post in the thread Use Cases for 3-Way ZFS Mirror for VM/LXC Storage vs. 2-Way Mirror? with

Like.

Probably, but in my case it's only 1TB of backups and this gave me both a read improvement and a third copy. Eventually it is via multiple locations, as you also pointed out. My backups are on two different media (due to the mix of SSD and HDD...

Like.

Probably, but in my case it's only 1TB of backups and this gave me both a read improvement and a third copy. Eventually it is via multiple locations, as you also pointed out. My backups are on two different media (due to the mix of SSD and HDD... -

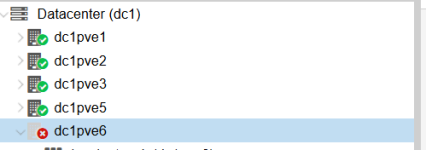

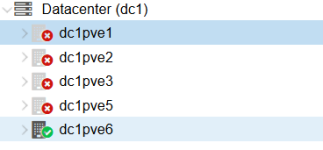

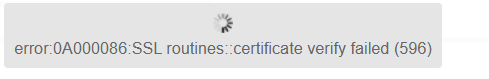

AAlexandros85 posted the thread Error with adding a new node in the cluster. in Proxmox VE: Installation and configuration.Hello, I'm facing the problem with adding the new node to existing PVE cluster. After adding the node through the web-interface, it shows as offline: And on the new node all other nodes are shown offline: And the error I'm getting is: I've...

-

Pparadinglunatic replied to the thread PVEProxy Error.root@pve01:~# apt update Get:1 http://security.debian.org trixie-security InRelease [43.4 kB] Hit:2 http://ftp.us.debian.org/debian trixie InRelease Get:3 http://ftp.us.debian.org/debian...

-

news replied to the thread PVEProxy Error.please run on the host root console: apt update apt dist-upgrade -y apt autoremove -y And show us the results in a seperate CODE-Block.

news replied to the thread PVEProxy Error.please run on the host root console: apt update apt dist-upgrade -y apt autoremove -y And show us the results in a seperate CODE-Block. -

Pparadinglunatic replied to the thread PVEProxy Error.Yup, that upgrade guide is the one I followed to perform the upgrade. Didn't run into any strange issues during the upgrade either. Just double checked my repo's and there are no references to bookworm.

-

news replied to the thread PVEProxy Error.hello, did you run this? # https://pve.proxmox.com/wiki/Upgrade_from_8_to_9 and that? # https://pve.proxmox.com/wiki/Upgrade_from_8_to_9#Update_the_configured_APT_repositories

news replied to the thread PVEProxy Error.hello, did you run this? # https://pve.proxmox.com/wiki/Upgrade_from_8_to_9 and that? # https://pve.proxmox.com/wiki/Upgrade_from_8_to_9#Update_the_configured_APT_repositories -

BYou need to add the shared=1 option on this line. This way the container can be migrated whereby the local mountpoint will be ignored during migration. You need to make sure that the local mount point is available on every node yourself (e.g...

-

TTomHildebrand replied to the thread [SOLVED] How can I retrofit virtio drivers into a fully installed Windows 11 VM.Yes, that did it. Thank you so much narrateourale My mistake was that I didn't adjust the Proxmox VM Options -> boot order. I kept going around in circles trying to adjust the boot order in the Windows repair screen, which of course didn't work...

-

Ssoftworx reacted to bbgeek17's post in the thread PSA: PVE 9.X iSCSI/iscsiadm upgrade incompatibility with

Like.

A bit of good news to kick off the new year: our pull request addressing the iSCSI DB consistency/compatibility issue has been accepted by the Open-iSCSI maintainers. This means the fix will be included upstream and should make its way into a...

Like.

A bit of good news to kick off the new year: our pull request addressing the iSCSI DB consistency/compatibility issue has been accepted by the Open-iSCSI maintainers. This means the fix will be included upstream and should make its way into a... -

aaron reacted to Adevill20's post in the thread [SOLVED] Proxmox APT repository CDN is down with

aaron reacted to Adevill20's post in the thread [SOLVED] Proxmox APT repository CDN is down with Like.

Ok, the problem was our local DNS resolver.

Like.

Ok, the problem was our local DNS resolver. -

Eelmarconi replied to the thread Backup to NFS share spikes IO delay, locks up hypervisor..Good find! Experienced same thing today, cleaned out old snapshots, and we'll see. However I do rely on frequent snapshotting a lot, as Sanoid runs hourly on several machines. IO delay isn't seriously impacted on those. Any thoughts?

-

PAfter upgrading from 8.x to 9 (currently on 9.1.4), my logs are getting flooded with the same error every 5 seconds, where the PID shown changes based on one of the three PID's pveproxy is running on. I've restarted the service and this is the...

-

Uusernamerequired replied to the thread Proxmox random reboots on HP Elitedesk 800g4 - fixed with proxmox install on top of Debian 12 - now issues with hardware transcoding in plex.its kinda sad that it has to be done, but after hours or even days of debugging i found this thread and can confirm that this is still a bug in 01/2026, proxmox 9.1.4, kernel 6.17.4-2-pve and HP ProDesk 400 G5 Desktop Mini in my case. for the...

-

JJohannes S reacted to alexskysilk's post in the thread Use Cases for 3-Way ZFS Mirror for VM/LXC Storage vs. 2-Way Mirror? with

Like.

This isnt valid for zfs. ZFS will simply replace any read with a failed checksum and replace it on the affected vdev. EXCEPT this didnt actually work, which is why you dont see these anymore. abstraction doesnt change the underlying device...

Like.

This isnt valid for zfs. ZFS will simply replace any read with a failed checksum and replace it on the affected vdev. EXCEPT this didnt actually work, which is why you dont see these anymore. abstraction doesnt change the underlying device... -

bbgeek17 replied to the thread High latency and catastrophic write performance with iSCSI + shared LVM.Hey @softworx , did you mean to use µ (us) ? 20 ns is less than DRAM :-) Blockbridge : Ultra low latency all-NVME shared storage for Proxmox - https://www.blockbridge.com/proxmox

bbgeek17 replied to the thread High latency and catastrophic write performance with iSCSI + shared LVM.Hey @softworx , did you mean to use µ (us) ? 20 ns is less than DRAM :-) Blockbridge : Ultra low latency all-NVME shared storage for Proxmox - https://www.blockbridge.com/proxmox -

LLennox replied to the thread Paperless auf Proxmox als CT.Nein, er erklärt es auch noch mal als separaten CT ohne Home Assistant später im Video. Und nach dieser habe ich es installiert. Ich habe Home Assistant als VM und paperless als separaten CT laufen.

-

Ssumithjay replied to the thread Dell R730xd Install 9.1 Error SWC0700.I experienced similar issues during the install on Dell R730xd. After, editing the install script with nomodeset and SR-IOV and I/OAT DMA Engine in Dell R730xd BIOS, ProxMox VE 9.1 installer ran successfully.

-

Lldupv replied to the thread Proxmox VE 7.3: Resize disk of running instances with disks in iSCSI storage.it would be easier, "qm monitor" provides access to the Human Monitor Interface (HMP) and I haven't had success with "block_resize device size -- resize a block image" (qemu) block_resize drive-scsi0 107374182400 Error: Cannot grow device files...

-

Aalexskysilk replied to the thread Use Cases for 3-Way ZFS Mirror for VM/LXC Storage vs. 2-Way Mirror?.This isnt valid for zfs. ZFS will simply replace any read with a failed checksum and replace it on the affected vdev. EXCEPT this didnt actually work, which is why you dont see these anymore. abstraction doesnt change the underlying device...

-

Ssoftworx replied to the thread High latency and catastrophic write performance with iSCSI + shared LVM.Also, check to make sure TCP Delayed Ack is disabled. Delayed acks will sometimes hold tcp acks for other data. This is normally good, and the impact is not that dramatic, for regular network traffic. For iscsi it can do bad things for your...