Latest activity

-

BBigBrodeur posted the thread virt-viewer scaling issue (fractionnal scaling) in Proxmox VE: Installation and configuration.Okay, i have been trying to find a fix for this for roughly 2 weeks, and no AI in this world will give the correct answer. I have proxmox 9.1.2 and running many VMs on it. I have the same problem with everyone of them: 1. If i remote-view from...

-

Ppsaintemarie replied to the thread Monitoring Proxmox with Datadog.Anyone got the journald logs working here? The conf has the following: logs: - type: journald source: proxmox include_units: - pveproxy.service - pvedaemon.service - pve-firewall.service - pve-ha-crm.service -...

-

BBigBrodeur replied to the thread Move Disk hangs forever in version 8.2.4.For the peoples asking if i resolved to fix this, the answer is "No" - what i ended up doing is scrapping everything and start fresh on the LVM. Again, this was a long time ago, i was pretty green at ProxMox. Also i upgraded to 9.xx recently...

-

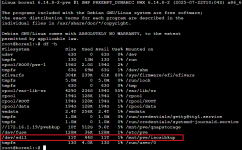

Llucid13 replied to the thread PBS Backup issue on only 1 node out of 3.Sorry I fixed the formatting. Is there a reason that "/mnt/vzsnap0/.data" wouldn't be created during backup? I don't have any issues with my other nodes.

-

IIsThisThingOn reacted to UdoB's post in the thread ZFS SSD Migration Consumer zu Server SSD with

Like.

Ich habe einfach zunächst die dritte SSD wie in https://pve.proxmox.com/pve-docs/pve-admin-guide.html#sysadmin_zfs_change_failed_dev beschrieben angeklebt. Nach diesem ersten Zwischenschritt (vor dem Wiederholen mit dem vierten Device) hat man...

Like.

Ich habe einfach zunächst die dritte SSD wie in https://pve.proxmox.com/pve-docs/pve-admin-guide.html#sysadmin_zfs_change_failed_dev beschrieben angeklebt. Nach diesem ersten Zwischenschritt (vor dem Wiederholen mit dem vierten Device) hat man... -

Llucid13 replied to the thread PBS Backup issue on only 1 node out of 3.

-

Nneobiker replied to the thread ZFS SSD Migration Consumer zu Server SSD.Es ist wohl der GLOTRENDS PU11 U.2 auf PCIe 4.0 X4 Adapter, nicht die SSD. Die andere SSD am Supermicro AOC-SLG3-2E4T Host Bus Adapter läuft. SSD sind Samsung PM9A3.

-

bbgeek17 replied to the thread Pool names restrictions different in 9.Makes sense, you did not specify the type of pool (resource vs storage vs) and I made an assumption of storage as they have similar restriction. My apologies. I don't have sufficient experience with Resource pools. Generally, until a PVE team...

bbgeek17 replied to the thread Pool names restrictions different in 9.Makes sense, you did not specify the type of pool (resource vs storage vs) and I made an assumption of storage as they have similar restriction. My apologies. I don't have sufficient experience with Resource pools. Generally, until a PVE team... -

UUmbraAtrox replied to the thread [SOLVED] Issues with Intel ARC A770M GPU Passthrough on NUC12SNKi72 (vfio-pci not ready after FLR or bus reset).Just registered to say thanks for suggesting to dump the bios. Did that and all the A750 issues are just gone. I can start and shutdown VMs, even shutdown one and start another that gets the gpu passed through without artificing or other issues...

-

Jjlauro replied to the thread Pool names restrictions different in 9.The place I am using them is resource pools under permissions so they can be used as the filter for backup jobs. They don't show under pvesm status from what I can tell.

-

news replied to the thread PVE ausgefallen beim PBS Backup, danach Bootprobleme.Schau dir bitte auch mal die zfs auto-snapshot an. Paket apt list zfs-auto-snapshot Wenn es automatisiert zfs auto-snapshot geben soll, dann ist das Paket ein guter Start. Man setze dann bei allen Pools an der Wurzel: zfs set...

news replied to the thread PVE ausgefallen beim PBS Backup, danach Bootprobleme.Schau dir bitte auch mal die zfs auto-snapshot an. Paket apt list zfs-auto-snapshot Wenn es automatisiert zfs auto-snapshot geben soll, dann ist das Paket ein guter Start. Man setze dann bei allen Pools an der Wurzel: zfs set... -

PPouch6867 replied to the thread PBS Backup issue on only 1 node out of 3.that's a hell of an output... not sure about everyone else but I really like seeing each INFO output in it's own line... anyway, I see: fstat "/mnt/vzsnap0/.data" failed - ENOENT: No such file or directory

-

news reacted to ThomasBo's post in the thread PVE ausgefallen beim PBS Backup, danach Bootprobleme with

news reacted to ThomasBo's post in the thread PVE ausgefallen beim PBS Backup, danach Bootprobleme with Like.

So, kurzes Update, ich habe mein System geändert. Habe mich gestern in die Vorzüge von ZFS eingelesen usw. Heute auf dem Mainboard 2 m2 NVMEs installiert auf denen nur Proxmox Os läuft als ZFS Mirror. Dann RAID Controller entfernt, die 4 SSDs...

Like.

So, kurzes Update, ich habe mein System geändert. Habe mich gestern in die Vorzüge von ZFS eingelesen usw. Heute auf dem Mainboard 2 m2 NVMEs installiert auf denen nur Proxmox Os läuft als ZFS Mirror. Dann RAID Controller entfernt, die 4 SSDs... -

Ddrevilish replied to the thread Disk Type is unknown (for old rotational hdd).Having the same, started when I updated my backplane and hba to SAS3 LSI-9300-16i BPN-SAS3-846EL1 pve-manager/9.1.2/9d436f37a0ac4172 (running kernel: 6.17.4-1-pve) === START OF INFORMATION SECTION === Device Model: ST20000NM002H-3KV133...

-

Llucid13 posted the thread PBS Backup issue on only 1 node out of 3 in Proxmox Backup: Installation and configuration.Hi All, I've been looking for a while but haven't found any resolution to my issue. I have 3 separate proxmox nodes on 3 machines. I set up a PBS instance on a 4th machine (Synology NAS). Right now 2 of my nodes back up with no issue. The...

-

Ddrevilish replied to the thread Spinning disks reporting as type "unknown".I just upgraded my Backplane and HBA to SAS3 and now getting "unknown" as well. All the SSDs are appearing as type "SSD" but what should be "Hard Disk" is "unknown".

-

Jjdancer replied to the thread Proxmox Offline Mirror Pick the Latest Snapshot.I use this script by Thomas https://forum.proxmox.com/threads/proxmox-offline-mirror-released.115219/#post-506894

-

bbgeek17 replied to the thread Pool names restrictions different in 9.The easiest thing to do is to install Virtual PVE and test out your exact scenario : digit-named-pool with VM which has disks on it. Start with 8, upgrade to 9. A quick test on PVE9 shows that the storage subsystem will skip newly incompatible...

bbgeek17 replied to the thread Pool names restrictions different in 9.The easiest thing to do is to install Virtual PVE and test out your exact scenario : digit-named-pool with VM which has disks on it. Start with 8, upgrade to 9. A quick test on PVE9 shows that the storage subsystem will skip newly incompatible... -

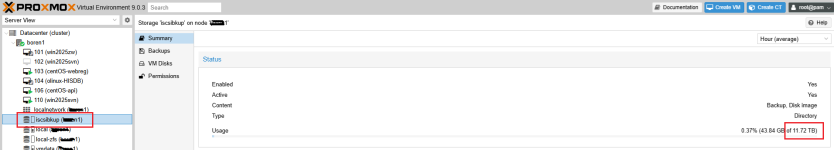

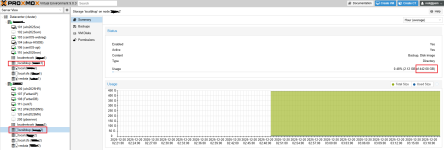

Mmicahel jiang posted the thread [SOLVED] The cluster host displays a different storage capacity than the actual capacity. in Proxmox VE: Installation and configuration.Hi How are you! I am a new. The attached image shows that although my storage device has 11TB, another host in cluster only shows 442GB. Why is this? Can anyone tell me? Thanks!