Latest activity

-

shanreich reacted to d0glesby's post in the thread [SOLVED] Problem migrating VMs in a Proxmox 9 cluster with VLAN on vmbr0. with

shanreich reacted to d0glesby's post in the thread [SOLVED] Problem migrating VMs in a Proxmox 9 cluster with VLAN on vmbr0. with Like.

Stefan, I've updated with the latest patch, here's the latest output when migrating a VM: 2025-12-16 11:13:46 use dedicated network address for sending migration traffic (10.XX.XX.XX) 2025-12-16 11:13:46 starting migration of VM 273 to node...

Like.

Stefan, I've updated with the latest patch, here's the latest output when migrating a VM: 2025-12-16 11:13:46 use dedicated network address for sending migration traffic (10.XX.XX.XX) 2025-12-16 11:13:46 starting migration of VM 273 to node... -

Dd0glesby replied to the thread [SOLVED] Problem migrating VMs in a Proxmox 9 cluster with VLAN on vmbr0..Stefan, I've updated with the latest patch, here's the latest output when migrating a VM: 2025-12-16 11:13:46 use dedicated network address for sending migration traffic (10.XX.XX.XX) 2025-12-16 11:13:46 starting migration of VM 273 to node...

-

Ffdcastel replied to the thread Proxmox x Hyper-V storage performance..Thank you, @ucholak, for sharing your experience and expertise -- I really appreciated it. You gave me a glimpse of hope, but unfortunately it faded rather quickly :) I reformatted my NVMe drives to 4K using: nvme format /dev/nvme2n1...

-

Dd0glesby replied to the thread 8.4/9.1 Dell r730 kernel: sd 0:0:0:0: Power-on or device reset occurred error.Make sure you have all of your firmware updated on the R730 motherboard and RAID controller.

-

shanreich replied to the thread [SOLVED] Problem migrating VMs in a Proxmox 9 cluster with VLAN on vmbr0..Thanks for testing! Sent a revised patch: https://lore.proxmox.com/pve-devel/20251216160513.360391-1-s.hanreich@proxmox.com/T/#u

shanreich replied to the thread [SOLVED] Problem migrating VMs in a Proxmox 9 cluster with VLAN on vmbr0..Thanks for testing! Sent a revised patch: https://lore.proxmox.com/pve-devel/20251216160513.360391-1-s.hanreich@proxmox.com/T/#u -

Jjulyat replied to the thread Bonded ethernet uplinks broken in pve-common 9.1.1.well i got myself a simple patch but its not well tested so im not gonna post it out at least now i can start those vms

-

Dduffyevan reacted to Danie de Jager's post in the thread from Manual: qm.conf: What does Protection do exactly? with

Like.

Hi, I'm busy harding a Proxmox Host and going through the settings I see "Protection" and in the help documents it states (and I've not been able to find anything on this subject. Is this purely so users cannot delete the VM until they turn this...

Like.

Hi, I'm busy harding a Proxmox Host and going through the settings I see "Protection" and in the help documents it states (and I've not been able to find anything on this subject. Is this purely so users cannot delete the VM until they turn this... -

Dduffyevan reacted to Fantu's post in the thread from Manual: qm.conf: What does Protection do exactly? with

Like.

This thing is not intuitive or documented (and I also looked for it arriving here), I think it is useful to add a "help" link to the documentation that specifies it as there is for other fields but not for this one.

Like.

This thing is not intuitive or documented (and I also looked for it arriving here), I think it is useful to add a "help" link to the documentation that specifies it as there is for other fields but not for this one. -

Dduffyevan reacted to proxiarch's post in the thread from Manual: qm.conf: What does Protection do exactly? with

Like.

Just dropping the obligatory "Still, also why I am here..."

Like.

Just dropping the obligatory "Still, also why I am here..." -

Dduffyevan reacted to h&j's post in the thread from Manual: qm.conf: What does Protection do exactly? with

Like.

Same here as a semi-new user

Like.

Same here as a semi-new user -

Dd0glesby replied to the thread [SOLVED] Problem migrating VMs in a Proxmox 9 cluster with VLAN on vmbr0..Stefan, I applied the patch info to the IPRoute2.pm file and was able to successfully migrate a VM as a test. I did see the following in the output during the migration: 2025-12-16 10:37:37 use dedicated network address for sending migration...

-

Ddbondarchuk replied to the thread Windows VM stuck on boot with CPU type set to host.Yes, I have been through that thread, and unfortunately, nothing helped. But today, to test, I created a new VM with CPU set to host, installed a new version of Windows on the VM disk, and it works fine. So the problem is really interesting :)

-

GGE_Admin replied to the thread [SOLVED] Super slow, timeout, and VM stuck while backing up, after updated to PVE 9.1.1 and PBS 4.0.20.Thanks Lukas for this useful suggestion!

-

LLKo replied to the thread [SOLVED] Super slow, timeout, and VM stuck while backing up, after updated to PVE 9.1.1 and PBS 4.0.20.I'm doing my testing in the off-hours anyway, 6.17.11-3-test-pve is up and running and we'll have a result in the morning. For all others: if the stalled backup crashes your VMs, please look into enabling fleecing for the scheduled backups...

-

SSteveITS reacted to shanreich's post in the thread [SOLVED] Problem migrating VMs in a Proxmox 9 cluster with VLAN on vmbr0. with

Like.

there is already a patch available for the issue w.r.t bonds - the initial fix for the nic prefix broke the bonds: https://lore.proxmox.com/pve-devel/20251216094329.36089-1-s.hanreich@proxmox.com/T/#u

Like.

there is already a patch available for the issue w.r.t bonds - the initial fix for the nic prefix broke the bonds: https://lore.proxmox.com/pve-devel/20251216094329.36089-1-s.hanreich@proxmox.com/T/#u -

shanreich replied to the thread [SOLVED] Problem migrating VMs in a Proxmox 9 cluster with VLAN on vmbr0..there is already a patch available for the issue w.r.t bonds - the initial fix for the nic prefix broke the bonds: https://lore.proxmox.com/pve-devel/20251216094329.36089-1-s.hanreich@proxmox.com/T/#u

shanreich replied to the thread [SOLVED] Problem migrating VMs in a Proxmox 9 cluster with VLAN on vmbr0..there is already a patch available for the issue w.r.t bonds - the initial fix for the nic prefix broke the bonds: https://lore.proxmox.com/pve-devel/20251216094329.36089-1-s.hanreich@proxmox.com/T/#u -

Dd0glesby replied to the thread [SOLVED] Problem migrating VMs in a Proxmox 9 cluster with VLAN on vmbr0..I've run into this today after I performed an full-upgrade and rebooted one node in a cluster. Now I am unable to migrate VMs to that node. All physical interfaces start with "eth". I do have a bond0 interface that is used for the bridged port...

-

LLoomiX posted the thread Wyse 5070 cannot connect via browser in Proxmox VE: Networking and Firewall.I have just installed Proxmox 9.1 on my new Dell Wyse 5070. My router is a fritz box 7530 and the Wyse is connected via LAN. So I have given the Proxmox Server the IP 192.168.178.74 and if I look on the web front end of the fritz box I can see...

-

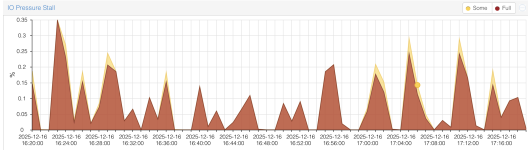

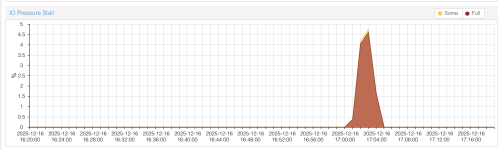

AAnyone can me explain why the IO pressure stall always display average 0.1% on ZFS on 4x NVMe, but LVM on hardware raid with SSD usually display zero for all time, except rare bursts when VMs is cloning?

-

DDer Harry replied to the thread ACME Plugin - Hetzner "DNS Console is moving to the Hetzner Console".This is a patch that you can apply for current Proxmox 9.x and PBS 4.x systems. Edit: Unfortunately it needs a reboot. Only tested for PVE (Thanks @TaktischerSpeck) PDM / PBS might still need a reboot Updated for PDM, Update for reboot...