Latest activity

-

SSteveITS reacted to shanreich's post in the thread [SOLVED] Problem migrating VMs in a Proxmox 9 cluster with VLAN on vmbr0. with

Like.

there is already a patch available for the issue w.r.t bonds - the initial fix for the nic prefix broke the bonds: https://lore.proxmox.com/pve-devel/20251216094329.36089-1-s.hanreich@proxmox.com/T/#u

Like.

there is already a patch available for the issue w.r.t bonds - the initial fix for the nic prefix broke the bonds: https://lore.proxmox.com/pve-devel/20251216094329.36089-1-s.hanreich@proxmox.com/T/#u -

shanreich replied to the thread [SOLVED] Problem migrating VMs in a Proxmox 9 cluster with VLAN on vmbr0..there is already a patch available for the issue w.r.t bonds - the initial fix for the nic prefix broke the bonds: https://lore.proxmox.com/pve-devel/20251216094329.36089-1-s.hanreich@proxmox.com/T/#u

shanreich replied to the thread [SOLVED] Problem migrating VMs in a Proxmox 9 cluster with VLAN on vmbr0..there is already a patch available for the issue w.r.t bonds - the initial fix for the nic prefix broke the bonds: https://lore.proxmox.com/pve-devel/20251216094329.36089-1-s.hanreich@proxmox.com/T/#u -

Dd0glesby replied to the thread [SOLVED] Problem migrating VMs in a Proxmox 9 cluster with VLAN on vmbr0..I've run into this today after I performed an full-upgrade and rebooted one node in a cluster. Now I am unable to migrate VMs to that node. All physical interfaces start with "eth". I do have a bond0 interface that is used for the bridged port...

-

LLoomiX posted the thread Wyse 5070 cannot connect via browser in Proxmox VE: Networking and Firewall.I have just installed Proxmox 9.1 on my new Dell Wyse 5070. My router is a fritz box 7530 and the Wyse is connected via LAN. So I have given the Proxmox Server the IP 192.168.178.74 and if I look on the web front end of the fritz box I can see...

-

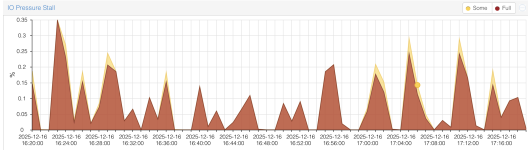

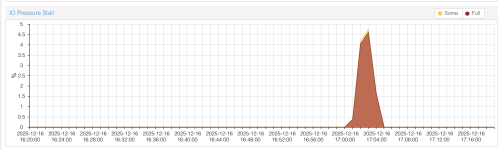

AAnyone can me explain why the IO pressure stall always display average 0.1% on ZFS on 4x NVMe, but LVM on hardware raid with SSD usually display zero for all time, except rare bursts when VMs is cloning?

-

DDer Harry replied to the thread ACME Plugin - Hetzner "DNS Console is moving to the Hetzner Console".This is a patch that you can apply for current Proxmox 9.x and PBS 4.x systems. Edit: Unfortunately it needs a reboot. Only tested for PVE (Thanks @TaktischerSpeck) PDM / PBS might still need a reboot Updated for PDM, Update for reboot...

-

Jjt_telrite replied to the thread TrueNAS Storage Plugin.@warlocksyno @curruscanis Have either of you notice any irregularity viewing the disks in the UI (pve>disks in the menu)? I get communication failures but not all hosts are effected. i.e. Connection refused (595), Connection timed out (596)

-

Moayad replied to the thread Renaming the server - missed the /storage cfg.Hi, Could you please check the `/etc/hosts` file? I would also check our wiki guide on renaming PVE node [0]. [0] https://pve.proxmox.com/wiki/Renaming_a_PVE_node

Moayad replied to the thread Renaming the server - missed the /storage cfg.Hi, Could you please check the `/etc/hosts` file? I would also check our wiki guide on renaming PVE node [0]. [0] https://pve.proxmox.com/wiki/Renaming_a_PVE_node -

Jjepper replied to the thread KRBD 0 Ceph prevents VMs from starting.You're absolutely right. I had already looked at this particular snippet, but I must have suffered from severe troubleshooting/afternoon fatigue. I have symlinked from /etc/pve/priv/ceph now to one of the paths kvm is looking for, and the VM came...

-

Uuwka77 replied to the thread NAS UGreen DXP4800 Plus.Meine Frage ist: Was mache ich am besten aus meiner neuen UGreen-NAS, die eine ältere Synology ablösen soll. Sie soll in einer nicht produktiven HomeLab-Umgebung zwei Aufgaben erfüllen: Ca. 6 TB Backup-Dateien aufnehmen und als Datastore für...

-

Bbrainsoft posted the thread Can't upload ISOs via PVE (proxmox 9 ZFS IO Stall) in Proxmox VE: Installation and configuration.Hello, I used to be able to upload isos from the web ui. This is a basic feature, of course it worked, never had a problem with pve 8. Somewhere since PVE9, maybe 9.1, I can no longer upload isos. The system io stall goes up to 90 and the...

-

RRico29 replied to the thread [SOLVED] Super slow, timeout, and VM stuck while backing up, after updated to PVE 9.1.1 and PBS 4.0.20.Hello, Facing the same problem since I upgraded all my PBS to pbs4. Running 5 pbs-servers / 8 pve clusters, we had freezes on different VMs on different PVE versions. I've rolled back all my pbs to 6.14.11-4-pve. I'll test the latest "test-pve"...

-

Rrandymartin reacted to leesteken's post in the thread Proxmox 9.1.2 HA migration issues when rebooting node. with

Like.

Maybe try updating your nodes; the release notes of today's qemu-server 9.1.2 update includes: EDIT: This is available on the no-subscription repository: https://pve.proxmox.com/pve-docs/pve-admin-guide.html#sysadmin_package_repositories EDIT2...

Like.

Maybe try updating your nodes; the release notes of today's qemu-server 9.1.2 update includes: EDIT: This is available on the no-subscription repository: https://pve.proxmox.com/pve-docs/pve-admin-guide.html#sysadmin_package_repositories EDIT2... -

Rrandymartin reacted to Dennigma's post in the thread Proxmox 9.1.2 HA migration issues when rebooting node. with

Like.

I have the same problem since i updated today, you need to set the manual maintenance mode before rebooting. for some reasons migrating with this works fine. when its stuck in the faulty state it helps to shutdown the vm (not in the proxmox...

Like.

I have the same problem since i updated today, you need to set the manual maintenance mode before rebooting. for some reasons migrating with this works fine. when its stuck in the faulty state it helps to shutdown the vm (not in the proxmox... -

GGE_Admin replied to the thread [SOLVED] Super slow, timeout, and VM stuck while backing up, after updated to PVE 9.1.1 and PBS 4.0.20.Thanks Chris for the info, however, we're in the "feature freeze" phase leading up to the Christmas holidays, and I don't feel like testing a kernel that might work well for a few days and then, on December 25th, crashes my system just as I'm...

-

Jjakob_ledermann replied to the thread [SOLVED] Automatic Hot/Cold Storage for VMs.It looks like I managed to implement this successfully with the following hookscript #!/bin/bash if [ "$2" == "pre-start" ] then echo "Move the disk from NAS to SSD" nohup /usr/sbin/qm disk move "$1" sata0 local-zfs --delete true...

-

Mmicrochris replied to the thread Windows Guest memory utilization problem on PVE 9.0.x.Ok, I have some news: My pfSense/OpenBSD VM and a Windows XP VM seem to have triggered ballooning - their mem usage went down significantly - despite showing "actual=1024 max_mem=1024" using "info balloon" in monitor. The mentioned Windows...

-

cwt replied to the thread IO Pressure Stall issue after upgrade to 9.1.OMG!! 40 LXCs each with 4 vcpu and 4gb RAM? Running on a 16/32 core CPU combined with consumer NAS drives? Massive overcommit, no wonder that you‘re running in I/O stalls. I don’t believe that the system ever ran normally. Otherwise I through my...

cwt replied to the thread IO Pressure Stall issue after upgrade to 9.1.OMG!! 40 LXCs each with 4 vcpu and 4gb RAM? Running on a 16/32 core CPU combined with consumer NAS drives? Massive overcommit, no wonder that you‘re running in I/O stalls. I don’t believe that the system ever ran normally. Otherwise I through my... -

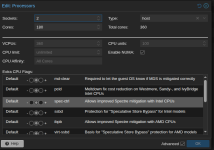

Ssrdecny replied to the thread Offline CPUs when using more than one socket.I do have 2 sockets in the VM configuration: For some reason the VM thinks it has 1 socket with 360 CPUs, but the socket and core count in VM is correct.

-

DDarkangeel_hd replied to the thread Nested PVE (on PVE host) Kernel panic Host injected async #PF in kernel mode.With no ballooning and no ksm it did also crash... I will try (exceptionally) disabling swap on the host and see how it performs But as was stated in this thread and other places before, that should not be a desirable running config.