Latest activity

-

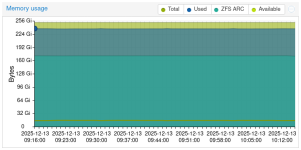

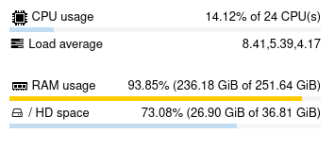

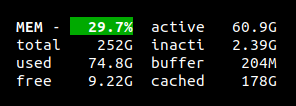

Bbobjoe123456 posted the thread Web UI reports critical memory usage, just caching in Proxmox VE: Installation and configuration.As you can see in the graph, like 65% of memory usage is ARC. This is fine, but the web UI reports this as actual used memory, leading to incorrect usage stats. Glances reports it correctly.

-

KBonjour, j'ai un Proxmox dans une vieille version (proxmox-ve: 5.1-25) Suite à un problème de carte mère, 2 condensateurs HS, je les ai changés, en attendant de pouvoir récupérer les données. Mon Proxmox redémarre, les VMs aussi cependant, j'ai...

-

SSteffenDE replied to the thread Nach HW Tausch (identisch) seltsames Verhalten und kein Backup.Habe ich gemacht bzw. kontrolliert, leider immer noch, finde das Problem nicht ... Im Syslog sehe ich ständig fehler dieser art RRDC und RRD update error: illegal attempt to update using time 1765645632 when last update time is 1790536034...

-

Jjhmc93 replied to the thread AMD A12 igpu.I couldn't tell you, but yes I own a mini pc with an AMD A12 CPU, I was just wondering if I could use pass through, if I can't pass the igpu through I'll just run a Linux desktop on it bare metal

-

FFrankList80 reacted to neobiker's post in the thread ZFS SSD Migration Consumer zu Server SSD with

Like.

HDD Copy Station geht IMO nicht für nvme-SSD / U.2 SSDs

Like.

HDD Copy Station geht IMO nicht für nvme-SSD / U.2 SSDs -

Nneobiker replied to the thread ZFS SSD Migration Consumer zu Server SSD.HDD Copy Station geht IMO nicht für nvme-SSD / U.2 SSDs

-

FFrankList80 replied to the thread ZFS SSD Migration Consumer zu Server SSD.Praktisch: Nutzung einer HDD Copy Station (gleich gross) - vollkommen agnostisch. Funktioniert i.d.R. (Anmerkung: Sorry, bei Mirrors sicherlich auch, aber der zusätzliche Speicherplatz ist das "Hin") .... aber generell: HDD/SSD Copy Station)...

-

NHallo, ich möchte meine Proxmox 8.4.14 installation (root system auf ZFS) von einem Consumer SSD Mirror (2x 512M) auf Server SSD Mirror (2x 950G) migrieren. Testweise habe ich die neuen SSD in einem freien PC eingebaut und Proxmox 9.1.2 darauf...

-

WI've just spent troubleshooting this for 4 days, but finally realized where I went wrong. It was the order of things. I wanted to change the boot pool name from "rpool" to "spool": 1) Install proxmox, boot it 2) Login and edit most files...

-

JHello, Is it possible to pass through the igpu for this cpu?

-

Jjuliokele replied to the thread Proxmox host randomly starts incorrectly reporting 90% ram usage in windows vm."I could not find this anywhere on the forums..." https://forum.proxmox.com/threads/balloon-funktioniert-nicht-bei-windows-2012r2-migration-von-hyper-v-zu-proxmox-ve.43148/ The Proxmox staff might need to add this to their best practices.

-

LLongQT-sea replied to the thread iGPU passthrough on Proxmox VE 9.1.1 – VM freezes on boot.@cracked As long as you meet the requirements, following this guide will work for your Intel iGPU. https://github.com/LongQT-sea/intel-igpu-passthru?#overview

-

LLongQT-sea replied to the thread updated to PVE 9.0 then GPU passthrough stopped working on Windows.@JonSnow Try this brother: https://github.com/LongQT-sea/intel-igpu-passthru

-

JJohannes S reacted to IsThisThingOn's post in the thread ZFS storage is very full, we would like to increase the space but... with

Like.

Sorry, I misunderstood. In that case you can leave it. Sure. So Proxmox uses the good default of 16k volblocksize. That means that all your VMs ZFS raw disks, are offered 16k blocks. Now lets look how ZFS provides 16k blocks. You have RAIDZ1...

Like.

Sorry, I misunderstood. In that case you can leave it. Sure. So Proxmox uses the good default of 16k volblocksize. That means that all your VMs ZFS raw disks, are offered 16k blocks. Now lets look how ZFS provides 16k blocks. You have RAIDZ1... -

JJohannes S reacted to meyergru's post in the thread ZFS storage is very full, we would like to increase the space but... with

Like.

Obviously, I meant "not in a sensible way" - at least for what the OP asked for, namely to increase his storage from 2x the size of one of his physical disks, so using 5 disks either does not use one of them, thus not increasing the net size...

Like.

Obviously, I meant "not in a sensible way" - at least for what the OP asked for, namely to increase his storage from 2x the size of one of his physical disks, so using 5 disks either does not use one of them, thus not increasing the net size... -

JJohannes S reacted to UdoB's post in the thread ZFS storage is very full, we would like to increase the space but... with

Like.

Of course I know that you (and most users here in the forum) know the facts, but this sentence: calls for "Mr. Obvious", stating "yes, you can!" ;-) root@pnz:~# zpool create multimirror mirror sdc sdd sde sdf sdg root@pnz:~# zpool status...

Like.

Of course I know that you (and most users here in the forum) know the facts, but this sentence: calls for "Mr. Obvious", stating "yes, you can!" ;-) root@pnz:~# zpool create multimirror mirror sdc sdd sde sdf sdg root@pnz:~# zpool status... -

JJohannes S reacted to SteveITS's post in the thread Proxmox host randomly starts incorrectly reporting 90% ram usage in windows vm with

Like.

A VM will use memory as it needs. The VM knows how much it is currently using. The host knows the peak ever allocated because it doesn’t get that back. Except via ballooning. Or shutdown/“power off”…I don’t actually remember if a VM restart is...

Like.

A VM will use memory as it needs. The VM knows how much it is currently using. The host knows the peak ever allocated because it doesn’t get that back. Except via ballooning. Or shutdown/“power off”…I don’t actually remember if a VM restart is... -

SSteveITS replied to the thread Proxmox host randomly starts incorrectly reporting 90% ram usage in windows vm.A VM will use memory as it needs. The VM knows how much it is currently using. The host knows the peak ever allocated because it doesn’t get that back. Except via ballooning. Or shutdown/“power off”…I don’t actually remember if a VM restart is...

-

UdoB replied to the thread Computer shutting down every morning around 3 that I didn't set to happen..The first thing is to look into the journal: journalctl -b -1 -e = show the end (-e) of the previous (-1) boot. Good luck then. Learning by doing is fine! Just try to understand what you are doing... ;-)

UdoB replied to the thread Computer shutting down every morning around 3 that I didn't set to happen..The first thing is to look into the journal: journalctl -b -1 -e = show the end (-e) of the previous (-1) boot. Good luck then. Learning by doing is fine! Just try to understand what you are doing... ;-) -

PPmUserZFS replied to the thread CEPH replica vs EC 4.2.Replicated size=3 NVMe pool: Writes: ~0.5–1 ms (ACK after 2 copies). Reads: ~0.2–0.5 ms. EC NVMe pool: Writes: ~2–4 ms. Reads: ~1–2 ms. So EC on NVMe is still fast, but replication will always beat EC for latency-sensitive workloads because...