Latest activity

-

Pproxuser77 replied to the thread Promox startet nicht mehr nach Update auf Version 9.Hatte das auch auf einem host und bei mir hat folgendes geholfen: 1. Proxmox 9 ISO herunterladen, auf einen USB Stick spielen und damit booten. 2. Dann Im Boot Menu des USB Sticks Rescue oder Recovery auswählen. (Kann mich nicht mehr an die...

-

Yyunmkfed replied to the thread Proxmox hangs after upgrade to v9.I recorded the entire boot sequence and console updates a few minutes after. https://youtu.be/C9GJ2WJ-1x8

-

FFSNaval reacted to fba's post in the thread CEPH Configuration: CephFS only on a Specific Pool? with

Like.

So you have a rbd pool called vmpool and now you want to put CephFS into that pool? That doesn't work and doesn't make sense, to me at least. Would you like to elaborate on what you want to achieve?

Like.

So you have a rbd pool called vmpool and now you want to put CephFS into that pool? That doesn't work and doesn't make sense, to me at least. Would you like to elaborate on what you want to achieve? -

Nneponn reacted to mr.hollywood's post in the thread [SOLVED] Intel NIC e1000e hardware unit hang with

Like.

^ That's very interesting. I've been downloading a Kali Everything torrent, withhout issue. 12+gb, but it's not stressing it as far as speed goes. I'll do more testing with iperf3 later. It may be of interest that our mac statuses and...

Like.

^ That's very interesting. I've been downloading a Kali Everything torrent, withhout issue. 12+gb, but it's not stressing it as far as speed goes. I'll do more testing with iperf3 later. It may be of interest that our mac statuses and... -

tcabernoch replied to the thread CEPH cache disk.Thanks, Steve. The reason I wanted to do a DB/WAL disk is that the capacity SSDs are SATA ... so they mount at 6gb/s. This is a Gen13 Dell. They should have bought SAS for12gb/s. Terrible original build choices. And I thought the speed I was...

tcabernoch replied to the thread CEPH cache disk.Thanks, Steve. The reason I wanted to do a DB/WAL disk is that the capacity SSDs are SATA ... so they mount at 6gb/s. This is a Gen13 Dell. They should have bought SAS for12gb/s. Terrible original build choices. And I thought the speed I was... -

readyspace replied to the thread Mount Point - Files exist within LXC, but not host.Quick checks: was the bind mount active before file creation? And are your LXCs unprivileged so UID/GID mapping could be hiding the file?

readyspace replied to the thread Mount Point - Files exist within LXC, but not host.Quick checks: was the bind mount active before file creation? And are your LXCs unprivileged so UID/GID mapping could be hiding the file? -

MMolch posted the thread [SOLVED] Fehler beim Klick auf „Whitelist“ – internal error, unknown action 'whitelist' (PMG 9.0) in Mail Gateway: Installation and configuration.Hallo zusammen, nach dem Upgrade auf Proxmox Mail Gateway 9.0 erhalte ich beim Klick auf den Link „Whitelist“ in der Quarantäne-Ansicht folgenden Fehler: internal error, unknown action 'whitelist' at /usr/share/perl5/PMG/API2/Quarantine.pm...

-

readyspace replied to the thread Cluster Issues.HI, stale node entries or mismatched SSH keys can definitely cause cluster sync chaos. In addition, make sure the new node’s ring0_addr matches the existing subnet in /etc/pve/corosync.conf, and that /etc/hosts across all nodes correctly maps...

readyspace replied to the thread Cluster Issues.HI, stale node entries or mismatched SSH keys can definitely cause cluster sync chaos. In addition, make sure the new node’s ring0_addr matches the existing subnet in /etc/pve/corosync.conf, and that /etc/hosts across all nodes correctly maps... -

SSteveITS replied to the thread CEPH cache disk.If you already have SSDs then I wouldn’t try to separate DB/WAL. Ceph capacity depends on replication, default is 3/2 so typically 1/3 of total space less overhead.

-

tcabernoch replied to the thread Cluster Issues.Clue there ... you wiped it clean. And then you probably rejoined it with the same name ... Did you delete /etc/pve/nodes/OLD-NODE-YOU-NUKED before rejoining the rebuilt machine? Did you comment out the old ssh key in...

tcabernoch replied to the thread Cluster Issues.Clue there ... you wiped it clean. And then you probably rejoined it with the same name ... Did you delete /etc/pve/nodes/OLD-NODE-YOU-NUKED before rejoining the rebuilt machine? Did you comment out the old ssh key in... -

Rramonmedina replied to the thread Keep copies of emails..Resurrecting this thread - I'm migrating to PMG from the free (but now defunct) EFA Email Filter Appliance in my home lab, and I have deployed the SpamTitan appliance at work. My hope was to also migrate work to PMG pending how well it works for...

-

readyspace replied to the thread [SOLVED] Configure vlan on CHR in proxmox.Hi, you will need to setup using Proxmox bridges and VLAN tagging. Please try this... Create one bridge (e.g. vmbr0) for WAN (CHR ether1). Create another VLAN-aware bridge (e.g. vmbr1) for LAN and VLANs (CHR ether2). Attach VLAN interfaces (10...

readyspace replied to the thread [SOLVED] Configure vlan on CHR in proxmox.Hi, you will need to setup using Proxmox bridges and VLAN tagging. Please try this... Create one bridge (e.g. vmbr0) for WAN (CHR ether1). Create another VLAN-aware bridge (e.g. vmbr1) for LAN and VLANs (CHR ether2). Attach VLAN interfaces (10... -

Mmr.hollywood replied to the thread [SOLVED] Intel NIC e1000e hardware unit hang.^ That's very interesting. I've been downloading a Kali Everything torrent, withhout issue. 12+gb, but it's not stressing it as far as speed goes. I'll do more testing with iperf3 later. It may be of interest that our mac statuses and...

-

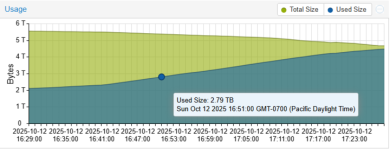

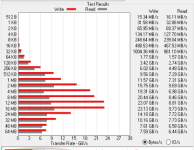

tcabernoch replied to the thread CEPH cache disk.So, after all of that questing, testing, and inquiry, I exposed it to some real-world load. I restored a whole bunch of client VMs to the cluster and ran them like hell. And then I completely filled the datastore till it stopped working, to see...

tcabernoch replied to the thread CEPH cache disk.So, after all of that questing, testing, and inquiry, I exposed it to some real-world load. I restored a whole bunch of client VMs to the cluster and ran them like hell. And then I completely filled the datastore till it stopped working, to see... -

readyspace replied to the thread proxmox and 2 vm with outer ip: and different gw.Hi, you’re likely hitting a routing and proxy ARP issue caused by multiple gateways and Hetzner’s MAC-bound IP setup. At Hetzner, each additional IP or subnet must be assigned to a unique virtual MAC and attached to the VM NIC — you can’t just...

readyspace replied to the thread proxmox and 2 vm with outer ip: and different gw.Hi, you’re likely hitting a routing and proxy ARP issue caused by multiple gateways and Hetzner’s MAC-bound IP setup. At Hetzner, each additional IP or subnet must be assigned to a unique virtual MAC and attached to the VM NIC — you can’t just... -

readyspace replied to the thread Having issue running a game server on lxc.Make sure your router → Proxmox host → LXC container network path is open. The usual blockers are service binding, firewalls, or missing NAT rules on the Proxmox host. Ask them to check that the game server is listening on the IP, that...

readyspace replied to the thread Having issue running a game server on lxc.Make sure your router → Proxmox host → LXC container network path is open. The usual blockers are service binding, firewalls, or missing NAT rules on the Proxmox host. Ask them to check that the game server is listening on the IP, that... -

Aalarsson replied to the thread pvestatd segfaults.FWIW, I am also now seeing the same issue on one of my MS-01s since upgrading from 8.4 to 9.0.10 with kernel 6.41. No other daemons are segfaulting. I suspected bad RAM but memtest86 isn't finding anything.