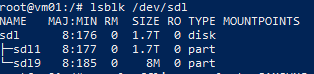

i build a server with 4 disks

first 2 disk raid 1 zfs for proxmox os

the rest 2 disk raid 1 zfs for vm

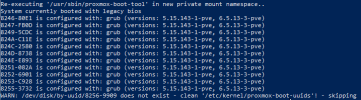

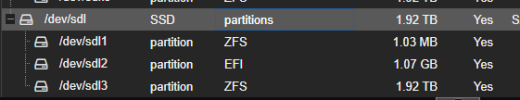

i have no problem to replace zfs pool for vm however when i do the same for the proxmox os (found both bios and efi) partition missing

any easy way of doing natively? thanks

first 2 disk raid 1 zfs for proxmox os

the rest 2 disk raid 1 zfs for vm

i have no problem to replace zfs pool for vm however when i do the same for the proxmox os (found both bios and efi) partition missing

any easy way of doing natively? thanks