Hello,

previous week i faced the issue of process zfs unmount stuck while proceeding rollback of LXC. After that, also incoming replication got stuck (zfs receive).

I though allright, its been long time since restart and there was new kernel and zfs installed but system was not rebooted. So i have restarted the system and the running pve-kernel version changed from 5.15.83-1 to 5.15.107-2 and ZFS changed from 2.1.7 to 2.1.11.

Unfortunatelly, it took just few days and i got into this issue again. I was hoping after rebooting the server, the new version of kernel and zfs, will solve the problem and it will not appear again. Unfortunatelly i was wrong.

The reroll issue happened for the same LXC - 194. Since the reboot i have used rollback only once for LXC 194 and it went allright, but today, the second time it got stuck again.

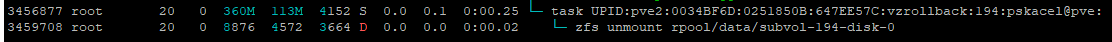

Since the process is in D state, it cannot be killed.

I will appreciate any hints for possible solution or process to reveal more details about this issue.

previous week i faced the issue of process zfs unmount stuck while proceeding rollback of LXC. After that, also incoming replication got stuck (zfs receive).

I though allright, its been long time since restart and there was new kernel and zfs installed but system was not rebooted. So i have restarted the system and the running pve-kernel version changed from 5.15.83-1 to 5.15.107-2 and ZFS changed from 2.1.7 to 2.1.11.

Unfortunatelly, it took just few days and i got into this issue again. I was hoping after rebooting the server, the new version of kernel and zfs, will solve the problem and it will not appear again. Unfortunatelly i was wrong.

The reroll issue happened for the same LXC - 194. Since the reboot i have used rollback only once for LXC 194 and it went allright, but today, the second time it got stuck again.

Since the process is in D state, it cannot be killed.

I will appreciate any hints for possible solution or process to reveal more details about this issue.

proxmox-ve: 7.4-1 (running kernel: 5.15.107-2-pve)

pve-manager: 7.4-3 (running version: 7.4-3/9002ab8a)

pve-kernel-5.15: 7.4-3

pve-kernel-5.15.107-2-pve: 5.15.107-2

pve-kernel-5.15.107-1-pve: 5.15.107-1

pve-kernel-5.15.102-1-pve: 5.15.102-1

pve-kernel-5.15.85-1-pve: 5.15.85-1

pve-kernel-5.15.83-1-pve: 5.15.83-1

pve-kernel-5.15.74-1-pve: 5.15.74-1

ceph-fuse: 15.2.17-pve1

corosync: 3.1.7-pve1

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown2: 3.1.0-1+pmx4

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.24-pve2

libproxmox-acme-perl: 1.4.4

libproxmox-backup-qemu0: 1.3.1-1

libproxmox-rs-perl: 0.2.1

libpve-access-control: 7.4-3

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.4-1

libpve-guest-common-perl: 4.2-4

libpve-http-server-perl: 4.2-3

libpve-rs-perl: 0.7.6

libpve-storage-perl: 7.4-2

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 5.0.2-2

lxcfs: 5.0.3-pve1

novnc-pve: 1.4.0-1

proxmox-backup-client: 2.4.2-1

proxmox-backup-file-restore: 2.4.2-1

proxmox-kernel-helper: 7.4-1

proxmox-mail-forward: 0.1.1-1

proxmox-mini-journalreader: 1.3-1

proxmox-widget-toolkit: 3.7.0

pve-cluster: 7.3-3

pve-container: 4.4-3

pve-docs: 7.4-2

pve-edk2-firmware: 3.20230228-2

pve-firewall: 4.3-2

pve-firmware: 3.6-5

pve-ha-manager: 3.6.1

pve-i18n: 2.12-1

pve-qemu-kvm: 7.2.0-8

pve-xtermjs: 4.16.0-1

qemu-server: 7.4-3

smartmontools: 7.2-pve3

spiceterm: 3.2-2

swtpm: 0.8.0~bpo11+3

vncterm: 1.7-1

zfsutils-linux: 2.1.11-pve111:12:09 up 4 days, 13:23, 2 users, load average: 12.89, 11.37, 11.12 total used free shared buff/cache available

Mem: 188Gi 122Gi 60Gi 2.6Gi 5.7Gi 62Gi

Swap: 54Gi 1.6Gi 53Giroot 3459569 0.0 0.0 0 0 ? D 09:51 0:00 [z_zrele]

root 3459708 0.0 0.0 8876 4572 ? D 09:51 0:00 zfs unmount rpool/data/subvol-194-disk-0

root 3821079 0.0 0.0 8880 4696 ? D 10:14 0:00 zfs recv -F -- rpool/data/subvol-100-disk-0[Jun 6 09:55] INFO: task z_zrele:3459569 blocked for more than 120 seconds.

[ +0.001258] Tainted: P O 5.15.107-2-pve #1

[ +0.001135] "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message.

[ +0.001185] task:z_zrele state:D stack: 0 pid:3459569 ppid: 2 flags:0x00004000

[ +0.001122] Call Trace:

[ +0.001098] <TASK>

[ +0.001058] __schedule+0x34e/0x1740

[ +0.001088] ? __mod_memcg_lruvec_state+0x63/0xe0

[ +0.001044] ? __count_memcg_events+0x40/0xa0

[ +0.000979] schedule+0x69/0x110

[ +0.000925] io_schedule+0x46/0x80

[ +0.000970] wait_on_page_bit_common+0x114/0x3e0

[ +0.000924] ? filemap_invalidate_unlock_two+0x50/0x50

[ +0.000861] __lock_page+0x4c/0x60

[ +0.000945] truncate_inode_pages_range+0x352/0x6a0

[ +0.000925] truncate_pagecache+0x49/0x70

[ +0.000923] truncate_setsize+0x27/0x60

[ +0.000908] zpl_evict_inode+0x33/0x60 [zfs]

[ +0.001147] evict+0xd2/0x190

[ +0.000947] iput.part.0+0x174/0x1d0

[ +0.000926] iput+0x1c/0x30

[ +0.000911] zfs_rele_async_task+0xe/0x20 [zfs]

[ +0.001015] taskq_thread+0x29f/0x4d0 [spl]

[ +0.000896] ? wake_up_q+0x90/0x90

[ +0.000932] ? taskq_thread_spawn+0x60/0x60 [spl]

[ +0.000939] kthread+0x12a/0x150

[ +0.000819] ? set_kthread_struct+0x50/0x50

[ +0.000794] ret_from_fork+0x22/0x30

[ +0.000798] </TASK>

[ +0.000762] INFO: task zfs:3459708 blocked for more than 120 seconds.

[ +0.000743] Tainted: P O 5.15.107-2-pve #1

[ +0.000734] "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message.

[ +0.000706] task:zfs state:D stack: 0 pid:3459708 ppid:3456877 flags:0x00004000

[ +0.000702] Call Trace:

[ +0.000710] <TASK>

[ +0.000676] __schedule+0x34e/0x1740

[ +0.000676] ? wake_up_process+0x15/0x20

[ +0.000644] ? __queue_work+0x20c/0x490

[ +0.000650] schedule+0x69/0x110

[ +0.000676] taskq_wait_outstanding+0x90/0xd0 [spl]

[ +0.000647] ? wait_woken+0x70/0x70

[ +0.000598] zfs_preumount+0x4b/0x70 [zfs]

[ +0.000683] zpl_kill_sb+0x13/0x30 [zfs]

[ +0.000658] deactivate_locked_super+0x3b/0xb0

[ +0.000572] deactivate_super+0x44/0x50

[ +0.000631] cleanup_mnt+0x150/0x1d0

[ +0.000606] __cleanup_mnt+0x12/0x20

[ +0.000558] task_work_run+0x6d/0xb0

[ +0.000537] exit_to_user_mode_prepare+0x1a8/0x1b0

[ +0.000556] syscall_exit_to_user_mode+0x27/0x50

[ +0.000531] do_syscall_64+0x69/0xc0

[ +0.000531] entry_SYSCALL_64_after_hwframe+0x61/0xcb

[ +0.000506] RIP: 0033:0x7f67e5288a67

[ +0.000532] RSP: 002b:00007ffc71e39b18 EFLAGS: 00000246 ORIG_RAX: 00000000000000a6

[ +0.000555] RAX: 0000000000000000 RBX: 0000000000000000 RCX: 00007f67e5288a67

[ +0.000554] RDX: 0009200008010000 RSI: 0000000000000000 RDI: 000055da7cd74510

[ +0.000542] RBP: 00007ffc71e39b80 R08: 0000000000000020 R09: 0000000000000000

[ +0.000536] R10: 0000000000000000 R11: 0000000000000246 R12: 0000000000000000

[ +0.000529] R13: 000055da7cd74510 R14: 000055da7cd65320 R15: 000055da7cd678a0

[ +0.000532] </TASK>

[Jun 6 09:59] INFO: task z_zrele:3459569 blocked for more than 362 seconds.

[ +0.000743] Tainted: P O 5.15.107-2-pve #1

[ +0.000536] "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message.

[ +0.000550] task:z_zrele state:D stack: 0 pid:3459569 ppid: 2 flags:0x00004000

[ +0.000604] Call Trace:

[ +0.000575] <TASK>

[ +0.000659] __schedule+0x34e/0x1740

[ +0.000627] ? __mod_memcg_lruvec_state+0x63/0xe0

[ +0.000614] ? __count_memcg_events+0x40/0xa0

[ +0.000543] schedule+0x69/0x110

[ +0.000562] io_schedule+0x46/0x80

[ +0.000607] wait_on_page_bit_common+0x114/0x3e0

[ +0.000608] ? filemap_invalidate_unlock_two+0x50/0x50

[ +0.000649] __lock_page+0x4c/0x60

[ +0.000596] truncate_inode_pages_range+0x352/0x6a0

[ +0.000571] truncate_pagecache+0x49/0x70

[ +0.000598] truncate_setsize+0x27/0x60

[ +0.000576] zpl_evict_inode+0x33/0x60 [zfs]

[ +0.000686] evict+0xd2/0x190

[ +0.000535] iput.part.0+0x174/0x1d0

[ +0.000601] iput+0x1c/0x30

[ +0.000598] zfs_rele_async_task+0xe/0x20 [zfs]

[ +0.000649] taskq_thread+0x29f/0x4d0 [spl]

[ +0.000563] ? wake_up_q+0x90/0x90

[ +0.000556] ? taskq_thread_spawn+0x60/0x60 [spl]

[ +0.000564] kthread+0x12a/0x150

[ +0.000569] ? set_kthread_struct+0x50/0x50

[ +0.000572] ret_from_fork+0x22/0x30

[ +0.000563] </TASK>

[ +0.000516] INFO: task zfs:3459708 blocked for more than 362 seconds.

[ +0.000575] Tainted: P O 5.15.107-2-pve #1

[ +0.000570] "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message.

[ +0.000572] task:zfs state:D stack: 0 pid:3459708 ppid:3456877 flags:0x00004000

[ +0.000617] Call Trace:

[ +0.000603] <TASK>

[ +0.000582] __schedule+0x34e/0x1740

[ +0.000586] ? wake_up_process+0x15/0x20

[ +0.000609] ? __queue_work+0x20c/0x490

[ +0.000581] schedule+0x69/0x110

[ +0.000582] taskq_wait_outstanding+0x90/0xd0 [spl]

[ +0.000626] ? wait_woken+0x70/0x70

[ +0.000618] zfs_preumount+0x4b/0x70 [zfs]

[ +0.000697] zpl_kill_sb+0x13/0x30 [zfs]

[ +0.000646] deactivate_locked_super+0x3b/0xb0

[ +0.000582] deactivate_super+0x44/0x50

[ +0.000586] cleanup_mnt+0x150/0x1d0

[ +0.000564] __cleanup_mnt+0x12/0x20

[ +0.000607] task_work_run+0x6d/0xb0

[ +0.000548] exit_to_user_mode_prepare+0x1a8/0x1b0

[ +0.000546] syscall_exit_to_user_mode+0x27/0x50

[ +0.000535] do_syscall_64+0x69/0xc0

[ +0.000506] entry_SYSCALL_64_after_hwframe+0x61/0xcb

[ +0.000523] RIP: 0033:0x7f67e5288a67

[ +0.000506] RSP: 002b:00007ffc71e39b18 EFLAGS: 00000246 ORIG_RAX: 00000000000000a6

[ +0.000561] RAX: 0000000000000000 RBX: 0000000000000000 RCX: 00007f67e5288a67

[ +0.000569] RDX: 0009200008010000 RSI: 0000000000000000 RDI: 000055da7cd74510

[ +0.000531] RBP: 00007ffc71e39b80 R08: 0000000000000020 R09: 0000000000000000

[ +0.000561] R10: 0000000000000000 R11: 0000000000000246 R12: 0000000000000000

[ +0.000566] R13: 000055da7cd74510 R14: 000055da7cd65320 R15: 000055da7cd678a0

[ +0.000555] </TASK>

[Jun 6 10:03] INFO: task z_zrele:3459569 blocked for more than 604 seconds.

[ +0.001032] Tainted: P O 5.15.107-2-pve #1

[ +0.000793] "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message.

[ +0.000740] task:z_zrele state:D stack: 0 pid:3459569 ppid: 2 flags:0x00004000

[ +0.000818] Call Trace:

[ +0.000805] <TASK>

[ +0.000789] __schedule+0x34e/0x1740

[ +0.000787] ? __mod_memcg_lruvec_state+0x63/0xe0

[ +0.000758] ? __count_memcg_events+0x40/0xa0

[ +0.000786] schedule+0x69/0x110

[ +0.000851] io_schedule+0x46/0x80

[ +0.000755] wait_on_page_bit_common+0x114/0x3e0

[ +0.000773] ? filemap_invalidate_unlock_two+0x50/0x50

[ +0.000765] __lock_page+0x4c/0x60

[ +0.000768] truncate_inode_pages_range+0x352/0x6a0

[ +0.000805] truncate_pagecache+0x49/0x70

[ +0.000782] truncate_setsize+0x27/0x60

[ +0.000764] zpl_evict_inode+0x33/0x60 [zfs]

[ +0.000955] evict+0xd2/0x190

[ +0.000746] iput.part.0+0x174/0x1d0

[ +0.000761] iput+0x1c/0x30

[ +0.000690] zfs_rele_async_task+0xe/0x20 [zfs]

[ +0.000837] taskq_thread+0x29f/0x4d0 [spl]

[ +0.000721] ? wake_up_q+0x90/0x90

[ +0.000735] ? taskq_thread_spawn+0x60/0x60 [spl]

[ +0.000776] kthread+0x12a/0x150

[ +0.000728] ? set_kthread_struct+0x50/0x50

[ +0.000720] ret_from_fork+0x22/0x30

[ +0.000714] </TASK>

[ +0.000692] INFO: task zfs:3459708 blocked for more than 604 seconds.

[ +0.000733] Tainted: P O 5.15.107-2-pve #1

[ +0.000791] "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message.

[ +0.000771] task:zfs state:D stack: 0 pid:3459708 ppid:3456877 flags:0x00004000

[ +0.000773] Call Trace:

[ +0.000764] <TASK>

[ +0.000773] __schedule+0x34e/0x1740

[ +0.000852] ? wake_up_process+0x15/0x20

[ +0.000792] ? __queue_work+0x20c/0x490

[ +0.000757] schedule+0x69/0x110

[ +0.000777] taskq_wait_outstanding+0x90/0xd0 [spl]

[ +0.000791] ? wait_woken+0x70/0x70

[ +0.000823] zfs_preumount+0x4b/0x70 [zfs]

[ +0.000902] zpl_kill_sb+0x13/0x30 [zfs]

[ +0.000836] deactivate_locked_super+0x3b/0xb0

[ +0.000772] deactivate_super+0x44/0x50

[ +0.000735] cleanup_mnt+0x150/0x1d0

[ +0.000809] __cleanup_mnt+0x12/0x20

[ +0.000720] task_work_run+0x6d/0xb0

[ +0.000658] exit_to_user_mode_prepare+0x1a8/0x1b0

[ +0.000744] syscall_exit_to_user_mode+0x27/0x50

[ +0.000638] do_syscall_64+0x69/0xc0

[ +0.000712] entry_SYSCALL_64_after_hwframe+0x61/0xcb

[ +0.000664] RIP: 0033:0x7f67e5288a67

[ +0.000701] RSP: 002b:00007ffc71e39b18 EFLAGS: 00000246 ORIG_RAX: 00000000000000a6

[ +0.000690] RAX: 0000000000000000 RBX: 0000000000000000 RCX: 00007f67e5288a67

[ +0.000708] RDX: 0009200008010000 RSI: 0000000000000000 RDI: 000055da7cd74510

[ +0.000693] RBP: 00007ffc71e39b80 R08: 0000000000000020 R09: 0000000000000000

[ +0.000730] R10: 0000000000000000 R11: 0000000000000246 R12: 0000000000000000

[ +0.000712] R13: 000055da7cd74510 R14: 000055da7cd65320 R15: 000055da7cd678a0arch: amd64

cores: 4

hostname: thingsboard-testing

lock: rollback

memory: 10240

net0: name=eth0,bridge=vmbr0,firewall=1,hwaddr=3E:05:71:41:7F:20,ip=dhcp,type=veth

onboot: 1

ostype: ubuntu

parent: pred_pouzitim_java_convertoru

rootfs: local-zfs:subvol-194-disk-0,size=180G

swap: 2048

[before_dump]

arch: amd64

cores: 2

hostname: thingsboard-testing

memory: 5120

net0: name=eth0,bridge=vmbr0,firewall=1,hwaddr=3E:05:71:41:7F:20,ip=dhcp,type=veth

onboot: 1

ostype: ubuntu

parent: pred_nahratim_cql

rootfs: local-zfs:subvol-194-disk-0,size=120G

snaptime: 1685104752

swap: 2048

[nainstalovana_cassandra]

arch: amd64

cores: 2

hostname: thingsboard-testing

memory: 5120

net0: name=eth0,bridge=vmbr0,firewall=1,hwaddr=3E:05:71:41:7F:20,ip=dhcp,type=veth

onboot: 1

ostype: ubuntu

parent: po_buildu_jar

rootfs: local-zfs:subvol-194-disk-0,size=120G

snaptime: 1685021857

swap: 2048

[po_buildu_jar]

arch: amd64

cores: 2

hostname: thingsboard-testing

memory: 5120

net0: name=eth0,bridge=vmbr0,firewall=1,hwaddr=3E:05:71:41:7F:20,ip=dhcp,type=veth

onboot: 1

ostype: ubuntu

parent: snap_working_community

rootfs: local-zfs:subvol-194-disk-0,size=120G

snaptime: 1685021009

swap: 2048

[po_dumpu]

arch: amd64

cores: 2

hostname: thingsboard-testing

memory: 5120

net0: name=eth0,bridge=vmbr0,firewall=1,hwaddr=3E:05:71:41:7F:20,ip=dhcp,type=veth

onboot: 1

ostype: ubuntu

parent: before_dump

rootfs: local-zfs:subvol-194-disk-0,size=180G

snaptime: 1685354172

swap: 2048

[pred_nahratim_cql]

arch: amd64

cores: 2

hostname: thingsboard-testing

memory: 5120

net0: name=eth0,bridge=vmbr0,firewall=1,hwaddr=3E:05:71:41:7F:20,ip=dhcp,type=veth

onboot: 1

ostype: ubuntu

parent: nainstalovana_cassandra

rootfs: local-zfs:subvol-194-disk-0,size=120G

snaptime: 1685104372

swap: 2048

[pred_pouzitim_java_convertoru]

arch: amd64

cores: 2

hostname: thingsboard-testing

memory: 5120

net0: name=eth0,bridge=vmbr0,firewall=1,hwaddr=3E:05:71:41:7F:20,ip=dhcp,type=veth

onboot: 1

ostype: ubuntu

parent: po_dumpu

rootfs: local-zfs:subvol-194-disk-0,size=180G

snaptime: 1685355177

swap: 2048

[snap_1]

arch: amd64

cores: 2

hostname: thingsboard-testing

memory: 5120

net0: name=eth0,bridge=vmbr0,firewall=1,hwaddr=3E:05:71:41:7F:20,ip=dhcp,type=veth

onboot: 1

ostype: ubuntu

rootfs: local-zfs:subvol-194-disk-0,size=120G

snaptime: 1685013073

swap: 2048

[snap_working_community]

arch: amd64

cores: 2

hostname: thingsboard-testing

memory: 5120

net0: name=eth0,bridge=vmbr0,firewall=1,hwaddr=3E:05:71:41:7F:20,ip=dhcp,type=veth

onboot: 1

ostype: ubuntu

parent: snap_1

rootfs: local-zfs:subvol-194-disk-0,size=120G

snaptime: 1685014924

swap: 2048total 4.5K

drwxr----- 2 root root 2 May 25 11:21 .

drwxr-xr-x 50 root root 50 May 25 11:21 ..