Hi,

I want to add a zfs storage to an LXC client. done that before, now it fails.

created the dataset, set a quota, added it in pve as a storage.

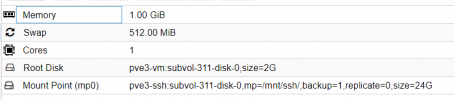

now i want to add it to my LXC Client as a resource/mp0. but the container fails to start with mp0. when i remove mp0, it boots without issues.

Like i did it before with other LXC Containers, for PBS and an APT-Mirror,... where i decided to keep it similar. i did not have that issues there, just a few weeks earlier.

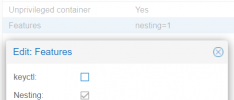

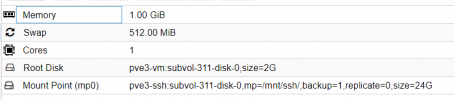

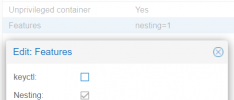

just in case, settings/options/features are common for all compared LXC Containers.

pve-manager/7.3-6/723bb6ec (running kernel: 5.15.85-1-pve)

so i rechecked my zfs dataset if i created it different, have redone it.

so i rechecked my pve storages, if there are differences. have deleted and recreated them.

could not find any mistakes or differences to the others before. even checked the commands in my .bash_history for diff's

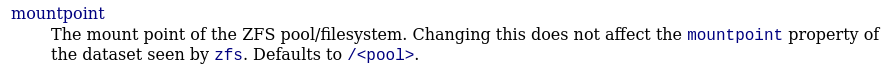

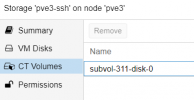

I guess i have found the issue, the mountpoint is missing for my pve3-ssh storage.

matches also the line in the debug output:

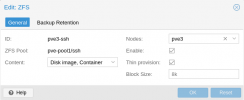

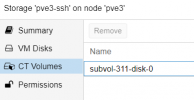

Thats how my pve zfs storage looks like.

pretty similar.

on the dataset pve-pool1/ssh is already the subvol for the mp0 mountpoint available.

I cannot find any mistake or diff in my procedures between pbs/apt creation of zfs/dataset and pve/storage vs the new one.

I'm pretty sure it has to do with the missing mountpoint setting in

Any idea where i made a mistake?

Why is mountpoint missing in storage.cfg

How to let my cotainer 311 boot with zfs/subvol/mp0 from pve-pool1/ssh storage?

I want to add a zfs storage to an LXC client. done that before, now it fails.

created the dataset, set a quota, added it in pve as a storage.

now i want to add it to my LXC Client as a resource/mp0. but the container fails to start with mp0. when i remove mp0, it boots without issues.

Like i did it before with other LXC Containers, for PBS and an APT-Mirror,... where i decided to keep it similar. i did not have that issues there, just a few weeks earlier.

just in case, settings/options/features are common for all compared LXC Containers.

pve-manager/7.3-6/723bb6ec (running kernel: 5.15.85-1-pve)

- nesting=1

- unpriviledged=YES

- keyctl=0

root@pve3:~# pct start 311 --debug

run_buffer: 322 Script exited with status 2

lxc_init: 844 Failed to run lxc.hook.pre-start for container "311"

__lxc_start: 2027 Failed to initialize container "311"

id 0 hostid 100000 range 65536

INFO lsm - ../src/lxc/lsm/lsm.c:lsm_init_static:38 - Initialized LSM security driver AppArmor

INFO conf - ../src/lxc/conf.c:run_script_argv:338 - Executing script "/usr/share/lxc/hooks/lxc-pve-prestart-hook" for container "311", config section "lxc"

DEBUG conf - ../src/lxc/conf.c:run_buffer:311 - Script exec /usr/share/lxc/hooks/lxc-pve-prestart-hook 311 lxc pre-start produced output: cannot open directory //pve-pool1/ssh: No such file or directory

ERROR conf - ../src/lxc/conf.c:run_buffer:322 - Script exited with status 2

ERROR start - ../src/lxc/start.c:lxc_init:844 - Failed to run lxc.hook.pre-start for container "311"

ERROR start - ../src/lxc/start.c:__lxc_start:2027 - Failed to initialize container "311"

INFO conf - ../src/lxc/conf.c:run_script_argv:338 - Executing script "/usr/share/lxcfs/lxc.reboot.hook" for container "311", config section "lxc"

startup for container '311' failed

root@pve3:~#

so i rechecked my zfs dataset if i created it different, have redone it.

so i rechecked my pve storages, if there are differences. have deleted and recreated them.

could not find any mistakes or differences to the others before. even checked the commands in my .bash_history for diff's

root@pve3:~# cat /etc/pve/storage.cfg

...

zfspool: pve3-apt

pool pve-pool1/apt

content rootdir,images

mountpoint /mnt/apt

nodes pve3

sparse 1

zfspool: pbs1-storage

pool pve-pool1/pbs1-storage

content rootdir,images

mountpoint /mnt/pbs1-storage

nodes pve2

sparse 1

zfspool: pve3-ssh

pool pve-pool1/ssh

content rootdir,images

nodes pve3

sparse 1

...

I guess i have found the issue, the mountpoint is missing for my pve3-ssh storage.

matches also the line in the debug output:

DEBUG conf - ../src/lxc/conf.c:run_buffer:311 - Script exec /usr/share/lxc/hooks/lxc-pve-prestart-hook 311 lxc pre-start produced output: cannot open directory //pve-pool1/ssh: No such file or directory

Thats how my pve zfs storage looks like.

root@pve3:~# zfs list

...

pve-pool1 84.9G 814G 96K /pve-pool1

pve-pool1/apt 84.5G 115G 96K /mnt/apt

pve-pool1/apt/subvol-140-disk-0 84.5G 65.5G 84.5G /mnt/apt/subvol-140-disk-0

pve-pool1/ssh 192K 25.0G 96K /mnt/ssh

pve-pool1/ssh/subvol-311-disk-0 96K 24.0G 96K /mnt/ssh/subvol-311-disk-0

pretty similar.

on the dataset pve-pool1/ssh is already the subvol for the mp0 mountpoint available.

I cannot find any mistake or diff in my procedures between pbs/apt creation of zfs/dataset and pve/storage vs the new one.

I'm pretty sure it has to do with the missing mountpoint setting in

/etc/pve/storage.cfg

Any idea where i made a mistake?

Why is mountpoint missing in storage.cfg

How to let my cotainer 311 boot with zfs/subvol/mp0 from pve-pool1/ssh storage?