Hey PVE-Community,

I have a 2-node cluster with a PBS connected. The servers are Hetzner AX102 machines with each 2 x 1,92TB NVMe SSD attached. I use it mainly to serve one large VM which runs a couple of websites and some smaller VMs and one container.

The VM uses a 1.5TB volume/disk attached, but only uses about 900GB currently. But as I don't see an option to reduce this without creating a new VM and rsyncinc all the data to the second host here is my problem:

Unfortunately when setting this up, I did not think about storage and ZFS too much especially in terms of sizing.

The current setup uses:

- Storage Replication between the PVE hosts VIRTUAL01 and VIRTUAL02 so the VMs have a failover available if the physical server really crashes. HA is disabled, I would failover manually if really needed

- PBS with a daily backup to the PBS server more for disaster recovery, because restoring the 1.5TB needs about 3 hours so it's too long for a failover scenario in my case.

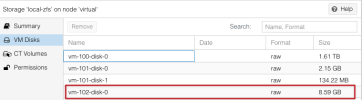

First, I could not imagine what would cause this issue, because i do not use any thin provisioning or similar. From the 1.84TB available disk space, about 1.6TB are for the main VM and the remaining storage is attached to the smaller instances or just not used.

From the timing, this happened at 02:15 when some backup scripts on the virtual machine itself are running, and this led me to the root cause:

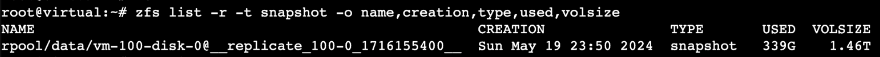

As I use the storage replication feature, this of course creates a snapshot in the background, you just don't see it in the GUI.

And as there are some data scripts running on the VM at 02:15, they create a lot of big TAR files (about 250GB) and send them to another customer of mine. And as the Storage replication is just running once a day (which is fine for me), the snapshot increases as it's copy-on-write and just fills up the storage until it's 100% full. Then the server becomes offline.

Although I just did not think about it, it makes sense that this happens - not sure why it did not happen in the last months, but maybe the amount of changed files was just lower that it could fit the free space. And after the replication is applied the snapshot size anyway is reduced to 0 again for the next window.

But as I run the storage replication anyway, one idea would be to run the scripts on the "cold" replication on VIRTUAL02.

I don't need to (and I think I cannot) power up the same replicated VM on the VIRTUAL02 host. So my options would be:

Or what would you suggest to solve my issue?

The main VM (which has the 1.6TB volume attached) does only use about 900GB for now. But I don't see any change to get this Volume reduced with the current amount of free space? The only way I see is disabling storage replication, creating a brand new VM on the second server with a smaller Disk/Volume and then running

Cheers,

Andy

Sorry for the wall of text, but I'd just like to explain the setup

I have a 2-node cluster with a PBS connected. The servers are Hetzner AX102 machines with each 2 x 1,92TB NVMe SSD attached. I use it mainly to serve one large VM which runs a couple of websites and some smaller VMs and one container.

The VM uses a 1.5TB volume/disk attached, but only uses about 900GB currently. But as I don't see an option to reduce this without creating a new VM and rsyncinc all the data to the second host here is my problem:

Current Setup

Bash:

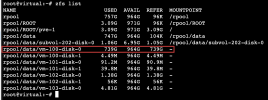

# zfs list

NAME USED AVAIL REFER MOUNTPOINT

rpool 1.34T 346G 96K /rpool

rpool/ROOT 3.08G 352G 96K /rpool/ROOT

rpool/ROOT/pve-1 3.08G 352G 3.08G /

rpool/data 1.33T 346G 104K /rpool/data

rpool/data/subvol-202-disk-0 1.05G 6.95G 1.05G /rpool/data/subvol-202-disk-0

rpool/data/vm-100-disk-0 1.33T 346G 1.33T -

rpool/data/vm-101-disk-0 90.9M 346G 90.9M -

rpool/data/vm-101-disk-1 39.8M 346G 39.8M -

rpool/data/vm-102-disk-0 4.77G 346G 4.77G -Unfortunately when setting this up, I did not think about storage and ZFS too much especially in terms of sizing.

The current setup uses:

- Storage Replication between the PVE hosts VIRTUAL01 and VIRTUAL02 so the VMs have a failover available if the physical server really crashes. HA is disabled, I would failover manually if really needed

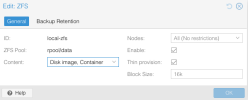

- PBS with a daily backup to the PBS server more for disaster recovery, because restoring the 1.5TB needs about 3 hours so it's too long for a failover scenario in my case.

Problem

Everything was really running fine, until tonight my Opsgenie app called me telling that the servers have gone offline. Luckily due to a similar incident last week I had at least a reservation for the PVE ZFS volume itself, so the virtualization host VIRTUAL01 was still accessible with SSH and GUI.

First, I could not imagine what would cause this issue, because i do not use any thin provisioning or similar. From the 1.84TB available disk space, about 1.6TB are for the main VM and the remaining storage is attached to the smaller instances or just not used.

From the timing, this happened at 02:15 when some backup scripts on the virtual machine itself are running, and this led me to the root cause:

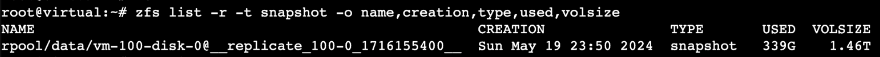

As I use the storage replication feature, this of course creates a snapshot in the background, you just don't see it in the GUI.

And as there are some data scripts running on the VM at 02:15, they create a lot of big TAR files (about 250GB) and send them to another customer of mine. And as the Storage replication is just running once a day (which is fine for me), the snapshot increases as it's copy-on-write and just fills up the storage until it's 100% full. Then the server becomes offline.

Although I just did not think about it, it makes sense that this happens - not sure why it did not happen in the last months, but maybe the amount of changed files was just lower that it could fit the free space. And after the replication is applied the snapshot size anyway is reduced to 0 again for the next window.

So my question is:

I cannot get rid of those scripts which just generate a lot of data and export them. So this will happen again if I keep the same setup.But as I run the storage replication anyway, one idea would be to run the scripts on the "cold" replication on VIRTUAL02.

I don't need to (and I think I cannot) power up the same replicated VM on the VIRTUAL02 host. So my options would be:

- Automatically create a VM and attach the same Disk to the new VM

- Mount the

/dev/zvol/rpool/data/vm-100-disk-0-part1directly on the second PVE host VIRTUAL01 and run the scripts there

Or what would you suggest to solve my issue?

The main VM (which has the 1.6TB volume attached) does only use about 900GB for now. But I don't see any change to get this Volume reduced with the current amount of free space? The only way I see is disabling storage replication, creating a brand new VM on the second server with a smaller Disk/Volume and then running

rsync until I can switch the VM and delete the old one? At least I am not aware of any solution to reduce the size of the existing ZFS volume without huge headache Cheers,

Andy