On one of my training labs, I have a series of training VMs running PVE with nested virtualization. These VM has two disks in a ZFS mirror for the OS, UEFI, secure boot disabled, use systemd-boot (no grub). VM uses

Current version is:

After

At that point, if I just do

If I boot with kernel 6.14.11-4-pve it does boot correctly.

Checking on screen output, seems as if kernel 6.17 takes too long to init disk controller and/or disks:

If I try to

Seems there's some incompatibility with

EDIT: with

machine: q35,viommu=virtio for PCI passthrough explanation and webUI walkthrough. I'm testing upgrading these PVE VMs from PVE 9.0.10 to latest PVE9.1 using no-subscription repository.Current version is:

Code:

proxmox-ve: 9.0.0 (running kernel: 6.14.11-2-pve)

pve-manager: 9.0.10 (running version: 9.0.10/deb1ca707ec72a89)

proxmox-kernel-helper: 9.0.4

proxmox-kernel-6.14.11-2-pve-signed: 6.14.11-2

proxmox-kernel-6.14: 6.14.11-2

proxmox-kernel-6.14.8-2-pve-signed: 6.14.8-2

amd64-microcode: 3.20250311.1

ceph-fuse: 19.2.3-pve1

corosync: 3.1.9-pve2

criu: 4.1.1-1

frr-pythontools: 10.3.1-1+pve4

ifupdown2: 3.3.0-1+pmx10

ksm-control-daemon: 1.5-1

libjs-extjs: 7.0.0-5

libproxmox-acme-perl: 1.7.0

libproxmox-backup-qemu0: 2.0.1

libproxmox-rs-perl: 0.4.1

libpve-access-control: 9.0.3

libpve-apiclient-perl: 3.4.0

libpve-cluster-api-perl: 9.0.6

libpve-cluster-perl: 9.0.6

libpve-common-perl: 9.0.10

libpve-guest-common-perl: 6.0.2

libpve-http-server-perl: 6.0.4

libpve-network-perl: 1.1.8

libpve-rs-perl: 0.10.10

libpve-storage-perl: 9.0.13

libspice-server1: 0.15.2-1+b1

lvm2: 2.03.31-2+pmx1

lxc-pve: 6.0.5-1

lxcfs: 6.0.4-pve1

novnc-pve: 1.6.0-3

proxmox-backup-client: 4.0.15-1

proxmox-backup-file-restore: 4.0.15-1

proxmox-backup-restore-image: 1.0.0

proxmox-firewall: 1.1.2

proxmox-kernel-helper: 9.0.4

proxmox-mail-forward: 1.0.2

proxmox-mini-journalreader: 1.6

proxmox-offline-mirror-helper: 0.7.2

proxmox-widget-toolkit: 5.0.5

pve-cluster: 9.0.6

pve-container: 6.0.13

pve-docs: 9.0.8

pve-edk2-firmware: 4.2025.02-4

pve-esxi-import-tools: 1.0.1

pve-firewall: 6.0.3

pve-firmware: 3.16-4

pve-ha-manager: 5.0.4

pve-i18n: 3.6.0

pve-qemu-kvm: 10.0.2-4

pve-xtermjs: 5.5.0-2

qemu-server: 9.0.22

smartmontools: 7.4-pve1

spiceterm: 3.4.1

swtpm: 0.8.0+pve2

vncterm: 1.9.1

zfsutils-linux: 2.3.4-pve1After

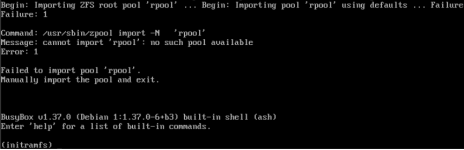

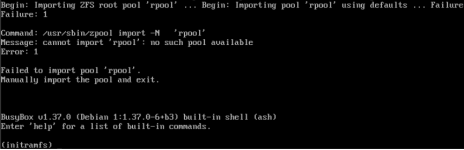

apt update + apt dist-upgrade + reboot, the VM tries to boot with kernel 6.17.2-2-pve. I eventually drops to initramfs complaining about not being able to import ZFS pool 'rpool':

At that point, if I just do

zpool import rpool, the pool is imported correctly. Issuing an exit, drops out from emergency shell and PVE VM boots correctly.If I boot with kernel 6.14.11-4-pve it does boot correctly.

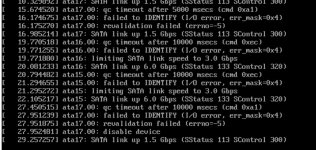

Checking on screen output, seems as if kernel 6.17 takes too long to init disk controller and/or disks:

Code:

[ 1.394364] input: VirtualPS/2 VMware VMMouse as /devices/platform/i8042/serio1/input/input3

[ 1.529064] raid6: avx512x4 gen() 45744 MB/s

[ 1.546064] raid6: avx512x2 gen() 48837 MB/s

[ 1.563065] raid6: avx512x1 gen() 44950 MB/s

[ 1.580064] raid6: avx2x4 gen() 50702 MB/s

[ 1.597064] raid6: avx2x2 gen() 50377 MB/s

[ 1.614064] raid6: avx2x1 gen() 42054 MB/s

[ 1.614259] raid6: using algorithm avx2x4 gen() 50702 MB/s

[ 1.631065] raid6: .... xor() 5329 MB/s, rmw enabled

[ 1.631251] raid6: using avx512x2 recovery algorithm

[ 1.632638] xor: automatically using best checksumming function avx

[ 1.699734] Btrfs loaded, zoned=yes, fsverity=yes

[ 1.718410] spl: loading out-of-tree module taints kernel.

[ 1.745494] zfs: module license 'CDDL' taints kernel.

[ 1.746085] Disabling lock debugging due to kernel taint

[ 1.746380] zfs: module license taints kernel.

[ 2.189144] ZFS: Loaded module v2.3.4-pve1, ZFS pool version 5000, ZFS filesystem version 5

[ 11.809165] pcieport 0000:00:1c.0: deferred probe timeout, ignoring dependency

[ 11.813220] pcieport 0000:00:1c.0: PME: Signaling with IRQ 24

[ 11.813971] pcieport 0000:00:1c.0: AER: enabled with IRQ 24

[ 11.814988] pcieport 0000:00:1c.1: deferred probe timeout, ignoring dependency

[ 11.817551] pcieport 0000:00:1c.1: PME: Signaling with IRQ 25

[ 11.818197] pcieport 0000:00:1c.1: AER: enabled with IRQ 25

[ 11.819122] pcieport 0000:00:1c.2: deferred probe timeout, ignoring dependency

[ 11.821933] pcieport 0000:00:1c.2: PME: Signaling with IRQ 26

[ 11.822533] pcieport 0000:00:1c.2: AER: enabled with IRQ 26

[ 11.823566] pcieport 0000:00:1c.3: deferred probe timeout, ignoring dependency

[ 11.825508] pcieport 0000:00:1c.3: PME: Signaling with IRQ 27

[ 11.826150] pcieport 0000:00:1c.3: AER: enabled with IRQ 27

[ 11.827242] pcieport 0000:00:1e.0: deferred probe timeout, ignoring dependency

[ 11.827569] pcieport 0000:05:01.0: deferred probe timeout, ignoring dependency

[ 11.827904] shpchp 0000:05:01.0: deferred probe timeout, ignoring dependencyIf I try to

zpool import rpool before kernel 6.17 inits disks, no rpool is found.Seems there's some incompatibility with

machine: q35,viommu=virtio and kernel 6.17. I've attached the full dmesg log of a failure.EDIT: with

machine: q35,viommu=intel VM boots correctly and I can use IOMMU on the nested VMs for training purposes.Attachments

Last edited: