Hello everyone,

I have been a silent reader here in the forum for quite some time. Since there are already threads on so many topics/problems here, that has always been sufficient so far.

Now I am currently facing a problem/phenomenon that I cannot quite understand, and although there are some similar cases on the topic, I have not found anything that directly applies to my situation.

I have three nodes in my PVE environment. On one (pve1) there is a PBS VM (I know that PBS is not the best alternative as a VM), which I replicate to my other nodes. This replication has been failing to one of the nodes for some time now. It ran for a few more days to the other node, but is now also failing.

Of course, I wanted to get to the bottom of this, but I can't find the source of the problem.

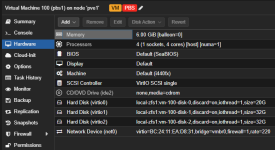

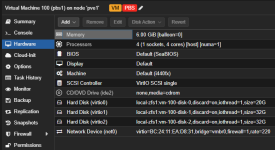

Here is the hardware of the PBS-VM:

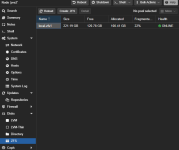

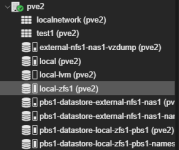

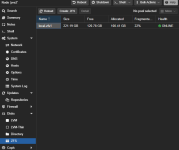

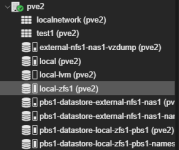

Both replication targets have similar configuration, here is what I see on one of them (pve2).

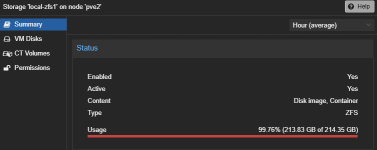

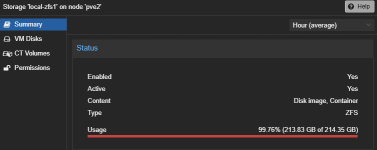

Disks --> ZFS, there is a pool called “local-zfs1” (replication target) that is about half full:

However, when I go to the “local-zfs1” storage, the overview shows me that the storage is completely full:

I don't understand how this difference arises and whether that might be the problem.

I hope someone can help me with this.

Thanks a lot.

Deco20

I have been a silent reader here in the forum for quite some time. Since there are already threads on so many topics/problems here, that has always been sufficient so far.

Now I am currently facing a problem/phenomenon that I cannot quite understand, and although there are some similar cases on the topic, I have not found anything that directly applies to my situation.

I have three nodes in my PVE environment. On one (pve1) there is a PBS VM (I know that PBS is not the best alternative as a VM), which I replicate to my other nodes. This replication has been failing to one of the nodes for some time now. It ran for a few more days to the other node, but is now also failing.

Code:

2025-10-14 18:20:08 100-0: start replication job

2025-10-14 18:20:08 100-0: guest => VM 100, running => 1680

2025-10-14 18:20:08 100-0: volumes => local-zfs1:vm-100-disk-0,local-zfs1:vm-100-disk-1,local-zfs1:vm-100-disk-2

2025-10-14 18:20:09 100-0: freeze guest filesystem

2025-10-14 18:20:09 100-0: create snapshot '__replicate_100-0_1760458808__' on local-zfs1:vm-100-disk-0

2025-10-14 18:20:09 100-0: create snapshot '__replicate_100-0_1760458808__' on local-zfs1:vm-100-disk-1

2025-10-14 18:20:09 100-0: create snapshot '__replicate_100-0_1760458808__' on local-zfs1:vm-100-disk-2

2025-10-14 18:20:09 100-0: thaw guest filesystem

2025-10-14 18:20:09 100-0: using secure transmission, rate limit: none

2025-10-14 18:20:09 100-0: incremental sync 'local-zfs1:vm-100-disk-0' (__replicate_100-0_1760389801__ => __replicate_100-0_1760458808__)

2025-10-14 18:20:10 100-0: send from @__replicate_100-0_1760389801__ to local-zfs1/vm-100-disk-0@__replicate_100-0_1760458808__ estimated size is 3.94G

2025-10-14 18:20:10 100-0: total estimated size is 3.94G

2025-10-14 18:20:10 100-0: TIME SENT SNAPSHOT local-zfs1/vm-100-disk-0@__replicate_100-0_1760458808__

2025-10-14 18:20:11 100-0: 18:20:11 65.0M local-zfs1/vm-100-disk-0@__replicate_100-0_1760458808__

2025-10-14 18:20:12 100-0: 18:20:12 177M local-zfs1/vm-100-disk-0@__replicate_100-0_1760458808__

2025-10-14 18:20:13 100-0: 18:20:13 289M local-zfs1/vm-100-disk-0@__replicate_100-0_1760458808__

2025-10-14 18:20:14 100-0: 18:20:14 401M local-zfs1/vm-100-disk-0@__replicate_100-0_1760458808__

2025-10-14 18:20:15 100-0: 18:20:15 513M local-zfs1/vm-100-disk-0@__replicate_100-0_1760458808__

2025-10-14 18:20:16 100-0: 18:20:16 625M local-zfs1/vm-100-disk-0@__replicate_100-0_1760458808__

2025-10-14 18:20:17 100-0: 18:20:17 737M local-zfs1/vm-100-disk-0@__replicate_100-0_1760458808__

2025-10-14 18:20:18 100-0: 18:20:18 849M local-zfs1/vm-100-disk-0@__replicate_100-0_1760458808__

2025-10-14 18:20:18 100-0: cannot receive incremental stream: out of space

2025-10-14 18:20:18 100-0: command 'zfs recv -F -- local-zfs1/vm-100-disk-0' failed: exit code 1

2025-10-14 18:20:18 100-0: warning: cannot send 'local-zfs1/vm-100-disk-0@__replicate_100-0_1760458808__': signal received

2025-10-14 18:20:18 100-0: cannot send 'local-zfs1/vm-100-disk-0': I/O error

2025-10-14 18:20:18 100-0: command 'zfs send -Rpv -I __replicate_100-0_1760389801__ -- local-zfs1/vm-100-disk-0@__replicate_100-0_1760458808__' failed: exit code 1

2025-10-14 18:20:18 100-0: delete previous replication snapshot '__replicate_100-0_1760458808__' on local-zfs1:vm-100-disk-0

2025-10-14 18:20:18 100-0: delete previous replication snapshot '__replicate_100-0_1760458808__' on local-zfs1:vm-100-disk-1

2025-10-14 18:20:18 100-0: delete previous replication snapshot '__replicate_100-0_1760458808__' on local-zfs1:vm-100-disk-2

2025-10-14 18:20:18 100-0: end replication job with error: command 'set -o pipefail && pvesm export local-zfs1:vm-100-disk-0 zfs - -with-snapshots 1 -snapshot __replicate_100-0_1760458808__ -base __replicate_100-0_1760389801__ | /usr/bin/ssh -e none -o 'BatchMode=yes' -o 'HostKeyAlias=pve2' -o 'UserKnownHostsFile=/etc/pve/nodes/pve2/ssh_known_hosts' -o 'GlobalKnownHostsFile=none' root@192.168.178.22 -- pvesm import local-zfs1:vm-100-disk-0 zfs - -with-snapshots 1 -snapshot __replicate_100-0_1760458808__ -allow-rename 0 -base __replicate_100-0_1760389801__' failed: exit code 255Of course, I wanted to get to the bottom of this, but I can't find the source of the problem.

Here is the hardware of the PBS-VM:

Both replication targets have similar configuration, here is what I see on one of them (pve2).

Disks --> ZFS, there is a pool called “local-zfs1” (replication target) that is about half full:

However, when I go to the “local-zfs1” storage, the overview shows me that the storage is completely full:

I don't understand how this difference arises and whether that might be the problem.

I hope someone can help me with this.

Thanks a lot.

Deco20