Hi, I recently got ahold of a second server that I have put PVE on, which I also plan to run PBS on. (PBS isn't relevant in this case but I will talk about it as the vm, but long story short, I did not want to run PBS baremetal because I would be wasting potential hardware if I just ran pbs)

Said server in question is a Dell Poweredge R720 with 2.5 drive bays, all filled

The current disk configuration is 8X 240.06 GB SSD's, and another 8X 960.20 SSD's.

I originally planned to put them all in one big pool via RAIDZ, but I realized that if I did so I would be wasting space because the 960's would act as the smaller 240's.

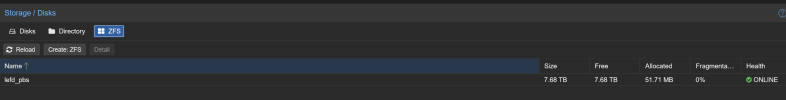

So I decided to take all the 960's and make them in a RAIDZ pool individually (as in seperate from the 240's), and did the same with the smaller SSD's, leaving me with 2 RAIDZ pools.

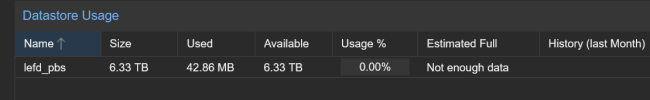

I then went to my PBS VM to allocate every single drop of storage from the pbs_storage pool (the 960 SSD's), and when I put in the pools capacity in GB, it said it was out of storage

I then put in 4000 GB, and saw the usage of the pool was about 5.7 TB (iirc), which seems abnormal to me since I was expecting it to be using about 4 TB, and I have no idea why it was showing an incorrect usage.

I found that I could only make the VM disk 4607 GB (if im remembering correctly) out of the 6720 GB that the pools actual size was

Why is this happening? Is there a way to correct this? The discrepency of VM disk size and capacity/usage dosent seem to happen on RAID10, however I dont want to use RAID10 because I would only have 3.70 TB of space available out of the total SSD's 7681 TB of storage, with RAIDZ giving me 6720. Is raid10 the only way to fix this? Im not sure what to do from here

Said server in question is a Dell Poweredge R720 with 2.5 drive bays, all filled

The current disk configuration is 8X 240.06 GB SSD's, and another 8X 960.20 SSD's.

I originally planned to put them all in one big pool via RAIDZ, but I realized that if I did so I would be wasting space because the 960's would act as the smaller 240's.

So I decided to take all the 960's and make them in a RAIDZ pool individually (as in seperate from the 240's), and did the same with the smaller SSD's, leaving me with 2 RAIDZ pools.

I then went to my PBS VM to allocate every single drop of storage from the pbs_storage pool (the 960 SSD's), and when I put in the pools capacity in GB, it said it was out of storage

I then put in 4000 GB, and saw the usage of the pool was about 5.7 TB (iirc), which seems abnormal to me since I was expecting it to be using about 4 TB, and I have no idea why it was showing an incorrect usage.

I found that I could only make the VM disk 4607 GB (if im remembering correctly) out of the 6720 GB that the pools actual size was

Why is this happening? Is there a way to correct this? The discrepency of VM disk size and capacity/usage dosent seem to happen on RAID10, however I dont want to use RAID10 because I would only have 3.70 TB of space available out of the total SSD's 7681 TB of storage, with RAIDZ giving me 6720. Is raid10 the only way to fix this? Im not sure what to do from here