ZFS mirror various disk size

- Thread starter rafnizp

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Oneway would be to install it in ZFS RAID 0 mode on the smaller disk first. Then once the system is up and running, follow the "Changing a failed bootable device" procedure in the Proxmox VE Admin guide

The only difference! Instead of

You can get the list of the currently used disk with

In the end you should have a mirrored rpool and the bootloader on both disks, so the system will boot if one fails.

The only difference! Instead of

zpool replace, you use the zpool attach rpool <already installed disk> <new disk>.You can get the list of the currently used disk with

zpool status. When you attach the disk, choose it via the /dev/disk/by-id path. There it will also be exposed via the manufacturer, model and serial number. Having them in the ZFS status output can speed up the process of figuring out which disk has an issue a lot.In the end you should have a mirrored rpool and the bootloader on both disks, so the system will boot if one fails.

Then you can set the "hdsize" parameter during the installation. See https://pve.proxmox.com/pve-docs/pve-admin-guide.html#advanced_zfs_optionsFor proxmox installation I would like to use only 40GB from each disk and this to be set as mirrored zpool

Why an extra partition for logs?Rest of disk space from fist drive I want to use for logs and from second for swap

Using non-redundant swap does not sound like a good idea. What happens if that disk fails?

Also, may I ask what kind of disks (model, manufacturer, ...) you plan to use?

Hi Aaron,Then you can set the "hdsize" parameter during the installation. See https://pve.proxmox.com/pve-docs/pve-admin-guide.html#advanced_zfs_options

Why an extra partition for logs?

Using non-redundant swap does not sound like a good idea. What happens if that disk fails?

Also, may I ask what kind of disks (model, manufacturer, ...) you plan to use?

I'm planning to do the same:

1x2TB NVME

1x4TB NVME

Mirroring 100GB from each and leave the rest unpartition so I can use them for other things.

For this, based on your instruction, I would have to:

1. Choose the 2TB for ZFS RAID0 during installation

2. Use hdsize = 100GB

3. Partition the 4TB to 100GB and the rest untouch

4. Follow the Changing a failed bootable device procedure in the Proxmox VE Admin guide and attach the 100GB partition from the 4TB NVME to the existing 100GB Raid0

Is this the correct procedure?

And just to confirm, I can use the rest of the storage on both NVME to build an LVM VG or any other usage right?

Doesn't matter as you are wiping the partition table in step 4 anyway to make the second disk bootable. You would have to extend the partitions after doing step 4.3. Partition the 4TB to 100GB and the rest untouch

Yes.And just to confirm, I can use the rest of the storage on both NVME to build an LVM VG or any other usage right?

I've been reading and reading and can't get this to work.Oneway would be to install it in ZFS RAID 0 mode on the smaller disk first. Then once the system is up and running, follow the "Changing a failed bootable device" procedure in the Proxmox VE Admin guide

The only difference! Instead ofzpool replace, you use thezpool attach rpool <already installed disk> <new disk>.

You can get the list of the currently used disk withzpool status. When you attach the disk, choose it via the /dev/disk/by-id path. There it will also be exposed via the manufacturer, model and serial number. Having them in the ZFS status output can speed up the process of figuring out which disk has an issue a lot.

In the end you should have a mirrored rpool and the bootloader on both disks, so the system will boot if one fails.

When I do zpool status I get the following:

Code:

pool: rpool

state: ONLINE

config:

NAME STATE READ WRITE CKSUM

rpool ONLINE 0 0 0

nvme-eui.000000000000000100a0752446a4a08c-part3 ONLINE 0 0 0

errors: No known data errorsI don't understand why that isn't a physical disk or why it says part3 at the end. I can't figure out how to properly choose and attach a disk using the zpool attach rpool <current disk> <new disk to add>

The manuals don't explain this stuff or have the correct syntax shown. It is very confusing for someone who is not familiar with the commands/usage already. It would be nice if the proxmox manual, for example, said how to list devices and how to choose them to add them using zpool.

I did cd /dev/disk/by-id and then ls

and got:

Code:

nvme-eui.000000000000000100a0752446a4a08c

nvme-eui.000000000000000100a0752446a4a08c-part1

nvme-eui.000000000000000100a0752446a4a08c-part2

nvme-eui.000000000000000100a0752446a4a08c-part3

nvme-INTEL_SSDPE2KX010T8_BTLJ908508XJ1P0FGN

nvme-INTEL_SSDPE2KX010T8_BTLJ908508XJ1P0FGN_1

nvme-INTEL_SSDPE2KX010T8_BTLJ908508XJ1P0FGN_1-part1

nvme-INTEL_SSDPE2KX010T8_BTLJ908508XJ1P0FGN_1-part9

nvme-INTEL_SSDPE2KX010T8_BTLJ908508XJ1P0FGN-part1

nvme-INTEL_SSDPE2KX010T8_BTLJ908508XJ1P0FGN-part9

nvme-Micron_7450_MTFDKCB800TFS_240446A4A08C

nvme-Micron_7450_MTFDKCB800TFS_240446A4A08C_1

nvme-Micron_7450_MTFDKCB800TFS_240446A4A08C_1-part1

nvme-Micron_7450_MTFDKCB800TFS_240446A4A08C_1-part2

nvme-Micron_7450_MTFDKCB800TFS_240446A4A08C_1-part3

nvme-Micron_7450_MTFDKCB800TFS_240446A4A08C-part1

nvme-Micron_7450_MTFDKCB800TFS_240446A4A08C-part2

nvme-Micron_7450_MTFDKCB800TFS_240446A4A08C-part3

nvme-nvme.8086-42544c4a393038353038584a31503046474e-494e54454c205353445045324b583031305438-00000001

nvme-nvme.8086-42544c4a393038353038584a31503046474e-494e54454c205353445045324b583031305438-00000001-part1

nvme-nvme.8086-42544c4a393038353038584a31503046474e-494e54454c205353445045324b583031305438-00000001-part9

usb-Generic_Flash_Disk_A38EC09C-0:0

usb-Generic_Flash_Disk_A38EC09C-0:0-part1I only have 2 nvme disks installed. They are installed behind a pcie nvme switch. Then of course a usb flash disk is inserted at the moment.

Hopefully the answer to this will help others who have drives that are not the same size and trying to zfs mirror them. Thank you.

You can't add a disk without formating it first and cloning the bootloader. And thats partition 3 of your physical disk because partition 1+2 are needed for the bootloader. See chapter "Replacing a failed bootable device" but instead of the "zpool replace..." you use a "zpool attach" for a raid1: https://pve.proxmox.com/wiki/ZFS_on_Linux#_zfs_administration

If you really want a raid0 instead of a raid1 (not recommended!) you could skip the partitioning/bootloader part and directly add the whole disk with the "zpool add" command.

If you really want a raid0 instead of a raid1 (not recommended!) you could skip the partitioning/bootloader part and directly add the whole disk with the "zpool add" command.

Thank you for that information. There wasn't a mention of needing to format it and clone the bootloader in the previous post to which I was replying.

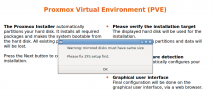

I am trying to get a mirror of the system bootdisk going. I have 2 disks but they are not equal size, so the installer was whiny about it. It would be really nice if the installer could replace the "you can't do this" message with "you can do this, but you will only get the size of the smaller disk." This would be a huge improvement and benefit a lot of people I am sure.

Could someone walk us through how to move forward getting a zfs mirror going starting from the point of 1 disk that proxmox was installed onto in zfs "raid 0" mode? I don't know how to clone the bootloader and otherwise prepare it to use the zpool attach command. I'm not 100% sure on what syntax to use for the attach command when I get to that point either. I think running through it would benefit a lot of people though, if someone is willing to do so.

I am trying to get a mirror of the system bootdisk going. I have 2 disks but they are not equal size, so the installer was whiny about it. It would be really nice if the installer could replace the "you can't do this" message with "you can do this, but you will only get the size of the smaller disk." This would be a huge improvement and benefit a lot of people I am sure.

Could someone walk us through how to move forward getting a zfs mirror going starting from the point of 1 disk that proxmox was installed onto in zfs "raid 0" mode? I don't know how to clone the bootloader and otherwise prepare it to use the zpool attach command. I'm not 100% sure on what syntax to use for the attach command when I get to that point either. I think running through it would benefit a lot of people though, if someone is willing to do so.

As I already said, its the same as replacing a failed disk of a mirror, you just don't replace a disk but add a new one. See the article linked above. So:

Changing a failed bootable device

Depending on how Proxmox VE was installed it is either using systemd-boot or GRUB through proxmox-boot-tool [2] or plain GRUB as bootloader (see Host Bootloader). You can check by running:

# proxmox-boot-tool status

The first steps of copying the partition table, reissuing GUIDs and replacing the ZFS partition are the same. To make the system bootable from the new disk, different steps are needed which depend on the bootloader in use.

# sgdisk <healthy bootable device> -R <new device>

# sgdisk -G <new device>

# zpool attach -f <pool> <part3 of existing disk > <part3 of new disk>

With proxmox-boot-tool:

Use the zpool status -v command to monitor how far the resilvering process of the new disk has progressed.

# proxmox-boot-tool format <new disk's ESP (part2)>

# proxmox-boot-tool init <new disk's ESP (part2)> [grub]

ESP stands for EFI System Partition, which is setup as partition #2 on bootable disks setup by the Proxmox VE installer since version 5.4. For details, see Setting up a new partition for use as synced ESP. With plain GRUB:

Make sure to pass grub as mode to proxmox-boot-tool init if proxmox-boot-tool status indicates your current disks are using GRUB, especially if Secure Boot is enabled!

# grub-install <new disk>

Plain GRUB is only used on systems installed with Proxmox VE 6.3 or earlier, which have not been manually migrated to using proxmox-boot-tool yet.

Last edited:

Code:

:~# proxmox-boot-tool status

Re-executing '/usr/sbin/proxmox-boot-tool' in new private mount namespace..

System currently booted with uefi

77EB-8D67 is configured with: uefi (versions: 6.8.4-2-pve)I'm assuming this is systemd-boot?

Do you know why 4 nvme devices are listed when I only have 2 physical disks installed? I was surprised that rpool says it's on one of these 2 devices and not the physical disk that it's actually on (the Micron).

Would I then do:

sgdisk nvme-eui.000000000000000100a0752446a4a08c -R nvme-INTEL_SSDPE2KX010T8_BTLJ908508XJ1P0FGN

or would it be

sgdisk nvme0n1 -R nvme1n1

So I would do one of the commands above? And then sgdisk -G <samenewdevice> ?

I don't see a part3 on the Intel disk yet. Will that be created with sgdisk?

I'm willing to try this, just want to make sure I'm doing it right and won't mess anything up on a working system right now. I really appreciate your help.

CorrectI'm assuming this is systemd-boot?

Use "ls -la /dev/disk/by-id" instead of your "cd /dev/disk/by-id; ls". An SSD could have multiple IDs pointing to the same SSD.Do you know why 4 nvme devices are listed when I only have 2 physical disks installed?

Yes. It will clone the partition table and both disks will have the same partitions. So you might want to install PVE to the smaller one so cloning won'T fail because of missing space.I don't see a part3 on the Intel disk yet. Will that be created with sgdisk?

I tried sgdisk -R but am getting the following:

Code:

root@x:~# sgdisk nvme-Micron_7450_MTFDKCB800TFS_240446A4A08C -R nvme-INTEL_SSDPE2KX010T8_BTLJ908508XJ1P0FGN

Problem opening nvme-Micron_7450_MTFDKCB800TFS_240446A4A08C for reading! Error is 2.

The specified file does not exist!

root@x:~# sgdisk nvme0n0 -R nvme1n1

Problem opening nvme0n0 for reading! Error is 2.

The specified file does not exist!Point it to the actual device: sgdisk /dev/disk/by-id/nvme-Micron_7450_MTFDKCB800TFS_240446A4A08C ...

# sgdisk <healthy bootable device> -R <new device>Point it to the actual device: sgdisk /dev/disk/by-id/nvme-Micron_7450_MTFDKCB800TFS_240446A4A08C ...

# sgdisk -G <new device>

Thank you so much! These reported "The operation has completed successfully"

On the zpool attach I'm getting "no such device in pool"

Code:

root@rx:~# sgdisk /dev/disk/by-id/nvme-Micron_7450_MTFDKCB800TFS_240446A4A08C -R /dev/disk/by-id/nvme-INTEL_SSDPE2KX010T8_BTLJ908508XJ1P0FGN

The operation has completed successfully.

root@x:~# sgdisk -G /dev/disk/by-id/nvme-INTEL_SSDPE2KX010T8_BTLJ908508XJ1P0FGN

The operation has completed successfully.

root@x:~# zpool attach -f rpool /dev/disk/by-id/nvme-Micron_7450_MTFDKCB800TFS_240446A4A08C-part3 /dev/disk/by-id/nvme-INTEL_SSDPE2KX010T8_BTLJ908508XJ1P0FGN-part3

cannot attach /dev/disk/by-id/nvme-INTEL_SSDPE2KX010T8_BTLJ908508XJ1P0FGN-part3 to /dev/disk/by-id/nvme-Micron_7450_MTFDKCB800TFS_240446A4A08C-part3: no such device in pool

root@x:~# zpool attach -f rpool nvme-Micron_7450_MTFDKCB800TFS_240446A4A08C-part3 nvme-INTEL_SSDPE2KX010T8_BTLJ908508XJ1P0FGN-part3

cannot attach nvme-INTEL_SSDPE2KX010T8_BTLJ908508XJ1P0FGN-part3 to nvme-Micron_7450_MTFDKCB800TFS_240446A4A08C-part3: no such device in pool

root@x:~# zpool attach -f rpool nvme0n1p3 nvme1n1p3

cannot attach nvme1n1p3 to nvme0n1p3: no such device in poolYour pool only knows "nvme-eui.000000000000000100a0752446a4a08c-part3". So try something like: "zpool attach -f rpool nvme-eui.000000000000000100a0752446a4a08c-part3 /dev/disk/by-id/nvme-INTEL_SSDPE2KX010T8_BTLJ908508XJ1P0FGN-part3"

That seems to have done it! Disks > ZFS > pool detail says it is resilvering

Sorry for being slow - the lack of documentation on syntax and referencing disks was difficult. I bet this will help a lot of people out. Thank you for taking the time to help explain and troubleshoot it with me.

Sorry for being slow - the lack of documentation on syntax and referencing disks was difficult. I bet this will help a lot of people out. Thank you for taking the time to help explain and troubleshoot it with me.

Just read the correct documentation, e.g. familiarize with manpages (just type man zpool) or read the PVE docs instead of some stuff your read in the forums, reddit or on the internet. Most people writing stuff - as always - don't know what they're saying or writing. They're no experts and "just want to share what they've learned" without understanding the underlying concepts and just cause trouble.the lack of documentation on syntax and referencing disks was difficult.