Hello,

I'm installing Proxmox on a new production node soon in my home office. The hardware is powerful enough for my needs, but there's only one NVME slot, so the boot NVME will have to be a single-disk ZFS stripe. Not ideal, but sadly my effort of will has not manifested additional PCIe lanes and a second NVME slot on this board.

At the very minimum, I need to install Proxmox on an NVME and have enough room left over for a TrueNAS VM. The TrueNAS VM will be getting real physical HDDs and a pair of large NVME via PCIe passthrough. This VM will come up before anything else and act as shared storage for my cluster. So, I need the OS disk to be big enough to keep Proxmox itself happy and also support a TrueNAS OS-only virtual disk.

How much space do I need for that?

I've got a 128 GB PCIe 3.0x4 NVME (consumer grade, but nice, and also never, ever used, so it has plenty of endurance)* and a 480 GiB Firecuda PCIe 3.0x4 NVME (much higher endurance, and known to work well as I've had it in service elsewhere for years).

My hesitation with the FireCuda is that I have two of them, and I'd rather keep them both free for setting up a mirror pool again some day. It's nice to have a pair of matched high quality drives available.

Either way, I'll be getting a syslog server set up, so I won't be logging directly on to the OS drive. Between that and only having a TrueNAS VM on it (which itself doesn't write to its boot disk that much, by my understanding), writes to the VM should be minimal.

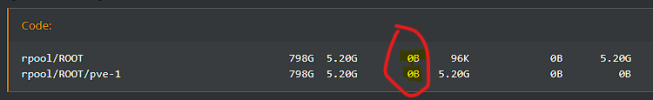

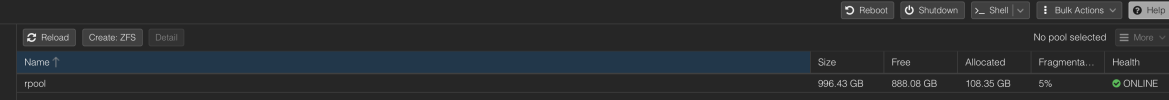

Here's the info on the 128 GB (119.2 GiB?) disk:

Thanks for any advice.

I'm installing Proxmox on a new production node soon in my home office. The hardware is powerful enough for my needs, but there's only one NVME slot, so the boot NVME will have to be a single-disk ZFS stripe. Not ideal, but sadly my effort of will has not manifested additional PCIe lanes and a second NVME slot on this board.

At the very minimum, I need to install Proxmox on an NVME and have enough room left over for a TrueNAS VM. The TrueNAS VM will be getting real physical HDDs and a pair of large NVME via PCIe passthrough. This VM will come up before anything else and act as shared storage for my cluster. So, I need the OS disk to be big enough to keep Proxmox itself happy and also support a TrueNAS OS-only virtual disk.

How much space do I need for that?

I've got a 128 GB PCIe 3.0x4 NVME (consumer grade, but nice, and also never, ever used, so it has plenty of endurance)* and a 480 GiB Firecuda PCIe 3.0x4 NVME (much higher endurance, and known to work well as I've had it in service elsewhere for years).

My hesitation with the FireCuda is that I have two of them, and I'd rather keep them both free for setting up a mirror pool again some day. It's nice to have a pair of matched high quality drives available.

Either way, I'll be getting a syslog server set up, so I won't be logging directly on to the OS drive. Between that and only having a TrueNAS VM on it (which itself doesn't write to its boot disk that much, by my understanding), writes to the VM should be minimal.

Here's the info on the 128 GB (119.2 GiB?) disk:

Code:

~% sudo lspci -nn -vvvv -s 57:00

[sudo] password for johntdavis:

57:00.0 Non-Volatile memory controller [0108]: Phison Electronics Corporation PS5013 E13 NVMe Controller [1987:5013] (rev 01) (prog-if 02 [NVM Express])

Subsystem: Phison Electronics Corporation PS5013-E13 PCIe3 NVMe Controller (DRAM-less) [1987:5013]

Control: I/O- Mem+ BusMaster+ SpecCycle- MemWINV- VGASnoop- ParErr- Stepping- SERR- FastB2B- DisINTx+

Status: Cap+ 66MHz- UDF- FastB2B- ParErr- DEVSEL=fast >TAbort- <TAbort- <MAbort- >SERR- <PERR- INTx-

Latency: 0, Cache Line Size: 64 bytes

Interrupt: pin A routed to IRQ 18

IOMMU group: 18

Region 0: Memory at 85400000 (64-bit, non-prefetchable) [size=16K]

Capabilities: [80] Express (v2) Endpoint, MSI 00

DevCap: MaxPayload 256 bytes, PhantFunc 0, Latency L0s unlimited, L1 unlimited

ExtTag+ AttnBtn- AttnInd- PwrInd- RBE+ FLReset+ SlotPowerLimit 10W

DevCtl: CorrErr- NonFatalErr- FatalErr- UnsupReq-

RlxdOrd+ ExtTag+ PhantFunc- AuxPwr- NoSnoop+ FLReset-

MaxPayload 256 bytes, MaxReadReq 512 bytes

DevSta: CorrErr- NonFatalErr- FatalErr- UnsupReq- AuxPwr- TransPend-

LnkCap: Port #1, Speed 8GT/s, Width x4, ASPM L1, Exit Latency L1 unlimited

ClockPM- Surprise- LLActRep- BwNot- ASPMOptComp+

LnkCtl: ASPM Disabled; RCB 64 bytes, Disabled- CommClk+

ExtSynch- ClockPM- AutWidDis- BWInt- AutBWInt-

LnkSta: Speed 8GT/s, Width x1 (downgraded)

TrErr- Train- SlotClk+ DLActive- BWMgmt- ABWMgmt-

...

Kernel driver in use: nvme

Kernel modules: nvmeThanks for any advice.