For VMs, still expecting 4x1.2Tb sas 2.5 inch 10K Rpm 2.5 inch drives in Raid 10 and from the same pool of disks a portion will be given to store sql databases (These databases after compression are automatically stored on Cloud also) . Do I need to adjust the default volblocksize for Raid 10 also?

8K should be fine for raid10. IF your DBs are important and if you want to maximize performance you should look what blocksize your DBs are using. Sometimes the will write with 16K or 32K blocks and it could be a little bit faster if your pool matches these.

But you really should consider using a SSD pool as a storage for your VMs and DBs. HDDs are really crappy for parallel writes with lots of IOPS even if they are SAS 10K disks.

Isnt that you describing a RAid 10 that could automatically be done if i would choose Raid10 as type with 6 drives or above 4 drievs you have to manually create the Raid10 type?

Yes that like raid10, but with 2 stripes of 3 HDD in mirror and not 3 stripes of a 2 HDD mirror. So everything is mirrored on 3 drives so any two may fail.

So, how do I

-Check the volblocksize which currently being used (I noticed you mentioned 8K but how can I check it )

-increase the volblocksize and measure the best number for that?

You can set the volblocksize for a zfs storage via GUI: "Datacenter -> Storage -> select your ZFS Storage -> Edit -> Block Size". 8K will be default. Every zvol (virtual HDD for VMs) will be created with this Blocksize as "volblocksize". And this value can only be set at creation and can't be changed later. If you want to edit this for your existing zvols you need to create a new virtual HDDs (after you changed the block size for the storage of cause), copy everything from the old zvols to the new ones and destroy the old ones. You can use dd to copy everything on block level. Use the search function or google, there are some tutorials on how to do this because this is a common ZFS beginner problem.

You can use this to show you the volblocksize of your zvols:

zfs get volblocksize -t volume

Does that mean I had to change ashift from 12 (I thought it was a good number for ssd disks) to a different number during storage creation, and if yes from what it depends that number?

No, what ashift you choose depends on the drives you are using. If your HDDs are all using a logical and physical sektor size of 512B you can choose ashift=9. That way ZFS would use a 512B blocksize. But keep in mind that most new drives will use a sector size of 4K and 512B drives are getting rare. If you create a pool with ashift=9 you won't be able to replace a drive with a 4K sector size model later. But a big benefit of ashift=9 would be that you may lower your volblocksize for example to 4K and that way reduce write amplification because a guests filesystem with fixed 4K block size may write to a 4K pool instead of a 8K pool. Its always bad to write data with a lower block size to a storage with a greater block size.

And for SSDs thats complicated...most people will choose a ashift of 12 or 13 but you just need to test it yourself what will give the best performance for your SSDs. Most SSDs will tell you that they are using a logical/physical sector size of 512B or 4K but internally they can only write in much larger blocks (128K or something like that). And no manufacturer will tell you what is used internally.

I just asked what ashift you are using because that table is calculation with sectors. If you are using ashift=12 (4K sectors) and 4 drives in raidz2 with a volblockisze of 8K that would be call B5 in the table with 67% capacity loss. If ashift would be 9, each sector only would be 512B so the 8K volblcoksize would be 16 sectors and therefore cell B19 with only 52% capacity loss.

Where do I set/change that GUI or with CLI command (probably with both ways since GUI is a picture of what is going underneath)

You can use

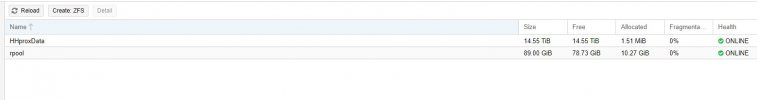

zfs list used,available YourPool to list the pool size. Add "used" and "available" and you get your pool size. Multiply that that with 0.8 or 0.9 and use it as the size of the quota. You can set the quota for a pool using this command:

zfs set quota=1234G YourPool

There are way

more quota options. You can set them for users/group, choose if snapshots should be included or not, ...