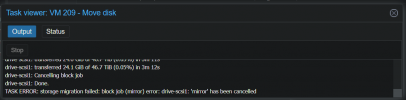

I have a very large VM-disk on my pool which. I wanted to move the data, but after some time the transfer rate dropped from ~120MB/s so ~20MB/s.

I restarted the VM, but it stops during boot since it cannot mount the virtual disk:

Also not possible to mount after boot:

One HDD showed degraded and zpool status showed errors (Read, Write and CKSUM; SMART was still fine though).

So what I did was first clear the errors:

And then start a scrub job:

Same HDD then showed degraded again, storage was ONLINE though. I already got a potential replacement HDD but the hardware actually seems fine. The proxmox server has always been gracefully shut down/rebooted.

This is what I currently get (once again running scrub, just for the record):

Zpool history is attached in the file, this happend in December 2022. I do not have any backup since the vm disk mostly contains media data (~40TB though).

My fileserver cannot access the vm disk at all.

Is there any possibility to save it? Also, I just do not see what happened here since no hardware faulted and ZFS is known to have remarkable resilience.

I restarted the VM, but it stops during boot since it cannot mount the virtual disk:

Also not possible to mount after boot:

Code:

root@fileserver:~# mount /dev/sda1 /mnt/vhd0/

mount: /mnt/vhd0: can't read superblock on /dev/sda1.One HDD showed degraded and zpool status showed errors (Read, Write and CKSUM; SMART was still fine though).

So what I did was first clear the errors:

Code:

zpool clear -F storagepool0

Code:

zpool scrub storagepool0Same HDD then showed degraded again, storage was ONLINE though. I already got a potential replacement HDD but the hardware actually seems fine. The proxmox server has always been gracefully shut down/rebooted.

This is what I currently get (once again running scrub, just for the record):

Code:

root@proxmox:~# sudo zpool status -v storagepool0

pool: storagepool0

state: ONLINE

status: One or more devices has experienced an error resulting in data

corruption. Applications may be affected.

action: Restore the file in question if possible. Otherwise restore the

entire pool from backup.

see: https://openzfs.github.io/openzfs-docs/msg/ZFS-8000-8A

scan: scrub in progress since Sat Dec 17 14:40:43 2022

11.1T scanned at 507M/s, 10.0T issued at 457M/s, 63.0T total

0B repaired, 15.95% done, 1 days 09:43:40 to go

config:

NAME STATE READ WRITE CKSUM

storagepool0 ONLINE 0 0 0

raidz1-0 ONLINE 0 0 0

ata-WDC_WD60EFRX-68MYMN1_WD-WX31D743YL1P ONLINE 0 0 6

ata-WDC_WD60EFRX-68MYMN1_WD-WXK1H641YJ3U ONLINE 0 0 6

ata-WDC_WD60EFRX-68L0BN1_WD-WX11D86HUHAJ ONLINE 0 0 6

ata-WDC_WD60EFRX-68MYMN1_WD-WX11DC4FKEYC ONLINE 0 0 6

ata-WDC_WD60EFRX-68MYMN1_WD-WX61D65NNCXV ONLINE 0 0 6

ata-WDC_WD60EFRX-68MYMN1_WD-WXK1H645X9YJ ONLINE 0 0 6

ata-WDC_WD60EFRX-68L0BN1_WD-WX11D76EPRCF ONLINE 0 0 6

ata-WDC_WD60EFRX-68MYMN1_WD-WX11D259V25T ONLINE 0 0 6

ata-WDC_WD60EFRX-68MYMN1_WD-WX31D743YN91 ONLINE 0 0 6

ata-WDC_WD60EFRX-68MYMN1_WD-WX11DB4H8277 ONLINE 0 0 6

ata-WDC_WD60EFRX-68MYMN1_WD-WXK1H6432F0C ONLINE 0 0 6

ata-WDC_WD60EFRX-68TGBN1_WD-WX31DC46JKJK ONLINE 0 0 6

errors: Permanent errors have been detected in the following files:

storagepool0/vm-209-disk-0:<0x1>Zpool history is attached in the file, this happend in December 2022. I do not have any backup since the vm disk mostly contains media data (~40TB though).

My fileserver cannot access the vm disk at all.

Is there any possibility to save it? Also, I just do not see what happened here since no hardware faulted and ZFS is known to have remarkable resilience.