Hello!

I had a problem, I previously had a Windows server 2016 system on my server, I installed new disks and loaded Proxmox 8 there, everything worked for more than a month. And one day I needed to restore some files on the old system. After starting Windows, I clicked on Disk Management and it asks me you must initialize a disk before logical disk manager can access it, I hastily clicked on Yes. After rebooting and I see that the systems located on the DATA disk do not boot. The disk shows lvm inactive. Then I realized that I erased the partition table... How can I restore functionality?

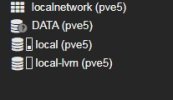

Details:

root@pve5:~# pveversion -v

proxmox-ve: 8.2.0 (running kernel: 6.8.4-2-pve)

pve-manager: 8.2.2 (running version: 8.2.2/9355359cd7afbae4)

proxmox-kernel-helper: 8.1.0

proxmox-kernel-6.8: 6.8.4-2

proxmox-kernel-6.8.4-2-pve-signed: 6.8.4-2

ceph-fuse: 17.2.7-pve3

corosync: 3.1.7-pve3

criu: 3.17.1-2

glusterfs-client: 10.3-5

ifupdown2: 3.2.0-1+pmx8

ksm-control-daemon: 1.5-1

libjs-extjs: 7.0.0-4

libknet1: 1.28-pve1

libproxmox-acme-perl: 1.5.0

libproxmox-backup-qemu0: 1.4.1

libproxmox-rs-perl: 0.3.3

libpve-access-control: 8.1.4

libpve-apiclient-perl: 3.3.2

libpve-cluster-api-perl: 8.0.6

libpve-cluster-perl: 8.0.6

libpve-common-perl: 8.2.1

libpve-guest-common-perl: 5.1.1

libpve-http-server-perl: 5.1.0

libpve-network-perl: 0.9.8

libpve-rs-perl: 0.8.8

libpve-storage-perl: 8.2.1

libspice-server1: 0.15.1-1

lvm2: 2.03.16-2

lxc-pve: 6.0.0-1

lxcfs: 6.0.0-pve2

novnc-pve: 1.4.0-3

proxmox-backup-client: 3.2.0-1

proxmox-backup-file-restore: 3.2.0-1

proxmox-kernel-helper: 8.1.0

proxmox-mail-forward: 0.2.3

proxmox-mini-journalreader: 1.4.0

proxmox-offline-mirror-helper: 0.6.6

proxmox-widget-toolkit: 4.2.1

pve-cluster: 8.0.6

pve-container: 5.0.10

pve-docs: 8.2.1

pve-edk2-firmware: 4.2023.08-4

pve-esxi-import-tools: 0.7.0

pve-firewall: 5.0.5

pve-firmware: 3.11-1

pve-ha-manager: 4.0.4

pve-i18n: 3.2.2

pve-qemu-kvm: 8.1.5-5

pve-xtermjs: 5.3.0-3

qemu-server: 8.2.1

smartmontools: 7.3-pve1

spiceterm: 3.3.0

swtpm: 0.8.0+pve1

vncterm: 1.8.0

zfsutils-linux: 2.2.3-pve2

----------------------------------

root@pve5:~# ls -l /etc/lvm/archive

total 44

-rw------- 1 root root 936 Aug 22 15:56 DATA_00000-133896217.vg

-rw------- 1 root root 1386 Aug 22 15:56 DATA_00001-1903205959.vg

-rw------- 1 root root 1809 Aug 23 09:21 DATA_00002-875926760.vg

-rw------- 1 root root 2242 Aug 23 09:21 DATA_00003-830213952.vg

-rw------- 1 root root 2676 Aug 23 09:21 DATA_00004-1837955517.vg

-rw------- 1 root root 3110 Aug 23 09:21 DATA_00005-1055152557.vg

-rw------- 1 root root 3538 Aug 28 15:40 DATA_00006-1350761795.vg

-rw------- 1 root root 3971 Aug 28 15:40 DATA_00007-1804075052.vg

-rw------- 1 root root 3474 Aug 22 14:33 pve_00000-1688680760.vg

------------------------------

root@pve5:~# lvscan

ACTIVE '/dev/pve/data' [<319.61 GiB] inherit

ACTIVE '/dev/pve/swap' [8.00 GiB] inherit

ACTIVE '/dev/pve/root' [96.00 GiB] inherit

-------------------------------

root@pve5:~# pvs

PV VG Fmt Attr PSize PFree

/dev/sdb3 pve lvm2 a-- <446.13g 16.00g

--------------------------------

root@pve5:~# vgs

VG #PV #LV #SN Attr VSize VFree

pve 1 3 0 wz--n- <446.13g 16.00g

--------------------------------

root@pve5:~# lvs

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

data pve twi-a-tz-- <319.61g 0.00 0.52

root pve -wi-ao---- 96.00g

swap pve -wi-ao---- 8.00g

-------------------------------

root@pve5:~# nano /etc/lvm/lvm.conf

GNU nano 7.2 /etc/lvm/lvm.conf

# This is an example configuration file for the LVM2 system.

# It contains the default settings that would be used if there was no

# /etc/lvm/lvm.conf file.

#

# Refer to 'man lvm.conf' for further information including the file layout.

#

# Refer to 'man lvm.conf' for information about how settings configured in

# this file are combined with built-in values and command line options to

# arrive at the final values used by LVM.

#

# Refer to 'man lvmconfig' for information about displaying the built-in

# and configured values used by LVM.

#

# If a default value is set in this file (not commented out), then a

# new version of LVM using this file will continue using that value,

# even if the new version of LVM changes the built-in default value.

#

# To put this file in a different directory and override /etc/lvm set

# the environment variable LVM_SYSTEM_DIR before running the tools.

#

# N.B. Take care that each setting only appears once if uncommenting

# example settings in this file.

# Configuration section config.

# How LVM configuration settings are handled.

config {

# Configuration option config/checks.

# If enabled, any LVM configuration mismatch is reported.

# This implies checking that the configuration key is understood by

# LVM and that the value of the key is the proper type. If disabled,

# any configuration mismatch is ignored and the default value is used

# without any warning (a message about the configuration key not being

# found is issued in verbose mode only).

# This configuration option has an automatic default value.

# checks = 1

# Configuration option config/abort_on_errors.

# Abort the LVM process if a configuration mismatch is found.

# This configuration option has an automatic default value.

# abort_on_errors = 0

# Configuration option config/profile_dir.

# Directory where LVM looks for configuration profiles.

# This configuration option has an automatic default value.

# profile_dir = "/etc/lvm/profile"

___________________

Please, help..

I had a problem, I previously had a Windows server 2016 system on my server, I installed new disks and loaded Proxmox 8 there, everything worked for more than a month. And one day I needed to restore some files on the old system. After starting Windows, I clicked on Disk Management and it asks me you must initialize a disk before logical disk manager can access it, I hastily clicked on Yes. After rebooting and I see that the systems located on the DATA disk do not boot. The disk shows lvm inactive. Then I realized that I erased the partition table... How can I restore functionality?

Details:

root@pve5:~# pveversion -v

proxmox-ve: 8.2.0 (running kernel: 6.8.4-2-pve)

pve-manager: 8.2.2 (running version: 8.2.2/9355359cd7afbae4)

proxmox-kernel-helper: 8.1.0

proxmox-kernel-6.8: 6.8.4-2

proxmox-kernel-6.8.4-2-pve-signed: 6.8.4-2

ceph-fuse: 17.2.7-pve3

corosync: 3.1.7-pve3

criu: 3.17.1-2

glusterfs-client: 10.3-5

ifupdown2: 3.2.0-1+pmx8

ksm-control-daemon: 1.5-1

libjs-extjs: 7.0.0-4

libknet1: 1.28-pve1

libproxmox-acme-perl: 1.5.0

libproxmox-backup-qemu0: 1.4.1

libproxmox-rs-perl: 0.3.3

libpve-access-control: 8.1.4

libpve-apiclient-perl: 3.3.2

libpve-cluster-api-perl: 8.0.6

libpve-cluster-perl: 8.0.6

libpve-common-perl: 8.2.1

libpve-guest-common-perl: 5.1.1

libpve-http-server-perl: 5.1.0

libpve-network-perl: 0.9.8

libpve-rs-perl: 0.8.8

libpve-storage-perl: 8.2.1

libspice-server1: 0.15.1-1

lvm2: 2.03.16-2

lxc-pve: 6.0.0-1

lxcfs: 6.0.0-pve2

novnc-pve: 1.4.0-3

proxmox-backup-client: 3.2.0-1

proxmox-backup-file-restore: 3.2.0-1

proxmox-kernel-helper: 8.1.0

proxmox-mail-forward: 0.2.3

proxmox-mini-journalreader: 1.4.0

proxmox-offline-mirror-helper: 0.6.6

proxmox-widget-toolkit: 4.2.1

pve-cluster: 8.0.6

pve-container: 5.0.10

pve-docs: 8.2.1

pve-edk2-firmware: 4.2023.08-4

pve-esxi-import-tools: 0.7.0

pve-firewall: 5.0.5

pve-firmware: 3.11-1

pve-ha-manager: 4.0.4

pve-i18n: 3.2.2

pve-qemu-kvm: 8.1.5-5

pve-xtermjs: 5.3.0-3

qemu-server: 8.2.1

smartmontools: 7.3-pve1

spiceterm: 3.3.0

swtpm: 0.8.0+pve1

vncterm: 1.8.0

zfsutils-linux: 2.2.3-pve2

----------------------------------

root@pve5:~# ls -l /etc/lvm/archive

total 44

-rw------- 1 root root 936 Aug 22 15:56 DATA_00000-133896217.vg

-rw------- 1 root root 1386 Aug 22 15:56 DATA_00001-1903205959.vg

-rw------- 1 root root 1809 Aug 23 09:21 DATA_00002-875926760.vg

-rw------- 1 root root 2242 Aug 23 09:21 DATA_00003-830213952.vg

-rw------- 1 root root 2676 Aug 23 09:21 DATA_00004-1837955517.vg

-rw------- 1 root root 3110 Aug 23 09:21 DATA_00005-1055152557.vg

-rw------- 1 root root 3538 Aug 28 15:40 DATA_00006-1350761795.vg

-rw------- 1 root root 3971 Aug 28 15:40 DATA_00007-1804075052.vg

-rw------- 1 root root 3474 Aug 22 14:33 pve_00000-1688680760.vg

------------------------------

root@pve5:~# lvscan

ACTIVE '/dev/pve/data' [<319.61 GiB] inherit

ACTIVE '/dev/pve/swap' [8.00 GiB] inherit

ACTIVE '/dev/pve/root' [96.00 GiB] inherit

-------------------------------

root@pve5:~# pvs

PV VG Fmt Attr PSize PFree

/dev/sdb3 pve lvm2 a-- <446.13g 16.00g

--------------------------------

root@pve5:~# vgs

VG #PV #LV #SN Attr VSize VFree

pve 1 3 0 wz--n- <446.13g 16.00g

--------------------------------

root@pve5:~# lvs

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

data pve twi-a-tz-- <319.61g 0.00 0.52

root pve -wi-ao---- 96.00g

swap pve -wi-ao---- 8.00g

-------------------------------

root@pve5:~# nano /etc/lvm/lvm.conf

GNU nano 7.2 /etc/lvm/lvm.conf

# This is an example configuration file for the LVM2 system.

# It contains the default settings that would be used if there was no

# /etc/lvm/lvm.conf file.

#

# Refer to 'man lvm.conf' for further information including the file layout.

#

# Refer to 'man lvm.conf' for information about how settings configured in

# this file are combined with built-in values and command line options to

# arrive at the final values used by LVM.

#

# Refer to 'man lvmconfig' for information about displaying the built-in

# and configured values used by LVM.

#

# If a default value is set in this file (not commented out), then a

# new version of LVM using this file will continue using that value,

# even if the new version of LVM changes the built-in default value.

#

# To put this file in a different directory and override /etc/lvm set

# the environment variable LVM_SYSTEM_DIR before running the tools.

#

# N.B. Take care that each setting only appears once if uncommenting

# example settings in this file.

# Configuration section config.

# How LVM configuration settings are handled.

config {

# Configuration option config/checks.

# If enabled, any LVM configuration mismatch is reported.

# This implies checking that the configuration key is understood by

# LVM and that the value of the key is the proper type. If disabled,

# any configuration mismatch is ignored and the default value is used

# without any warning (a message about the configuration key not being

# found is issued in verbose mode only).

# This configuration option has an automatic default value.

# checks = 1

# Configuration option config/abort_on_errors.

# Abort the LVM process if a configuration mismatch is found.

# This configuration option has an automatic default value.

# abort_on_errors = 0

# Configuration option config/profile_dir.

# Directory where LVM looks for configuration profiles.

# This configuration option has an automatic default value.

# profile_dir = "/etc/lvm/profile"

___________________

Please, help..