Hi, I'm having some problems with a cluster of three nodes, with several interfaces connected to the same switch in each node, each one with different services in mind.

In each node, there is:

1 pair of 10Gbpe NICs with IP range 172.16.0.x/24, named as vmbr0v4 (i.e., with VLAN 4), connected to 10Gbpe mouths in the switch, for internet communication&Ceph frontend.

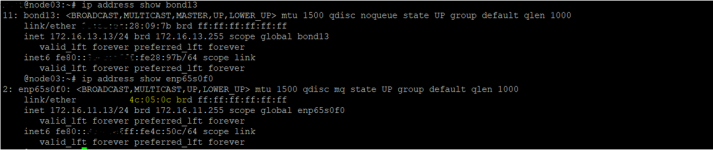

1 pair of 10Gbpe NICs with IP range 172.16.13.x/24, named as bond13, connected also to 10Gbpe mouths in the switch, for Ceph backend.

1 NIC with 1Gbpe bandwidth, with IP range 172.16.11.x/24, for Proxmox Ring0 cluster communication.

1 NIC with 1Gbpe bandwidth, with IP range 172.16.12.x/24, for Proxmox Ring1 cluster communication.

All seems to work well, except for one thing: when testing speeds on the 172.16.13.x range, one or more of the nodes receives at 1Gbpe instead of the expected 10Gbpe. Tracing things, it seems that the receiving node uses one of the Ring0/Ring1 interfaces for receiving data, instead of the bond13 one.

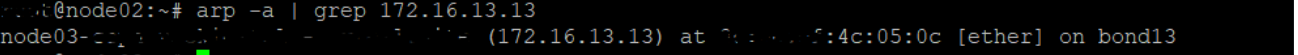

To my understanding this points to problems in the arp table. Indeed, if I consult the arp table of the sending node, I see that the MAC address of the 172.16.13.<n> receiving node is wrong, pointing instead to one of the Ring<n> NICs. So, I clean the arp table with the command:

ip -s -s neigh flush all

But again there is a wrong value in the arp table.

I was given to believe that this behavior happens only when you have the two conflicting interfaces in the same subnet (a.k.a. 'the ARP problem'), but this is not the case...

In each node, there is:

1 pair of 10Gbpe NICs with IP range 172.16.0.x/24, named as vmbr0v4 (i.e., with VLAN 4), connected to 10Gbpe mouths in the switch, for internet communication&Ceph frontend.

1 pair of 10Gbpe NICs with IP range 172.16.13.x/24, named as bond13, connected also to 10Gbpe mouths in the switch, for Ceph backend.

1 NIC with 1Gbpe bandwidth, with IP range 172.16.11.x/24, for Proxmox Ring0 cluster communication.

1 NIC with 1Gbpe bandwidth, with IP range 172.16.12.x/24, for Proxmox Ring1 cluster communication.

All seems to work well, except for one thing: when testing speeds on the 172.16.13.x range, one or more of the nodes receives at 1Gbpe instead of the expected 10Gbpe. Tracing things, it seems that the receiving node uses one of the Ring0/Ring1 interfaces for receiving data, instead of the bond13 one.

To my understanding this points to problems in the arp table. Indeed, if I consult the arp table of the sending node, I see that the MAC address of the 172.16.13.<n> receiving node is wrong, pointing instead to one of the Ring<n> NICs. So, I clean the arp table with the command:

ip -s -s neigh flush all

But again there is a wrong value in the arp table.

I was given to believe that this behavior happens only when you have the two conflicting interfaces in the same subnet (a.k.a. 'the ARP problem'), but this is not the case...

Last edited: