Hello everyone,

I'm new to the wonderful proxmox ecosystem.

I recently configured a proxmox cluster with ceph, but I don't understand the remaining disk space values displayed.

Here is a description of my configuration :

Ceph configuration :

(let me know if more information is needed)

So here's my problem:

I wanted to use the following calculator: https://florian.ca/ceph-calculator/

In order to have a “Safe Cluster” and not a “Risky Cluster”.

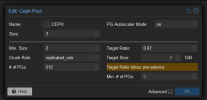

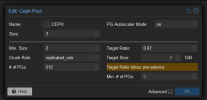

To do this, I applied the ratio of 0.67 to the pool I created as shown in the figure below:

I expected to find ~4.67TB and I see 7.85TB (although this number also includes the space reserved for the OS (~1.21TB).

Is the ratio correctly applied? I'm happy to have lots of disk space, but I feel like I'm in a “risky cluster”.

Thank you very much in advance for your answers!

(I'm using enterprise repositories, and I have tickets. Do you believe it's usefull to ask the support ?)

I'm new to the wonderful proxmox ecosystem.

I recently configured a proxmox cluster with ceph, but I don't understand the remaining disk space values displayed.

Here is a description of my configuration :

- PVE1/PVE2/PVE3 (3 nodes)

- Dell PowerEdge server (VE 8.3.3 installed on NVme with RAID1 BOSS Card 2x480GB)

- "VM+Management Access Network" : 10Gbps > on switch

- "CEPH Public+Cluster network" : 25Gbps DAC Fiber > full mesh RSTP

- "Corosync cluster Network": 1Gbps Ethernet > full mesh RSTP

- 1 Node = 4x SSD Enterprise 2TB (~1,75TB)

- 1 Node = 1xsocket = 48CPU

- 1 Node = 400GB RAM

Ceph configuration :

Code:

[global]

auth_client_required = cephx

auth_cluster_required = cephx

auth_service_required = cephx

cluster_network = 10.0.100.1/24

fsid = c4f9d386-5bc1-4663-9e4d-68ccf76a251d

mon_allow_pool_delete = true

mon_host = 10.0.100.1 10.0.100.2 10.0.100.3

ms_bind_ipv4 = true

ms_bind_ipv6 = false

osd_pool_default_min_size = 2

osd_pool_default_size = 3

public_network = 10.0.100.1/24

[client]

keyring = /etc/pve/priv/$cluster.$name.keyring

[client.crash]

keyring = /etc/pve/ceph/$cluster.$name.keyring

[mon.pve1]

public_addr = 10.0.100.1

[mon.pve2]

public_addr = 10.0.100.2

[mon.pve3]

public_addr = 10.0.100.3

Code:

# begin crush map

tunable choose_local_tries 0

tunable choose_local_fallback_tries 0

tunable choose_total_tries 50

tunable chooseleaf_descend_once 1

tunable chooseleaf_vary_r 1

tunable chooseleaf_stable 1

tunable straw_calc_version 1

tunable allowed_bucket_algs 54

# devices

device 0 osd.0 class ssd

device 1 osd.1 class ssd

device 2 osd.2 class ssd

device 3 osd.3 class ssd

device 4 osd.4 class ssd

device 5 osd.5 class ssd

device 6 osd.6 class ssd

device 7 osd.7 class ssd

device 8 osd.8 class ssd

device 9 osd.9 class ssd

device 10 osd.10 class ssd

device 11 osd.11 class ssd

# types

type 0 osd

type 1 host

type 2 chassis

type 3 rack

type 4 row

type 5 pdu

type 6 pod

type 7 room

type 8 datacenter

type 9 zone

type 10 region

type 11 root

# buckets

host pve1 {

id -3 # do not change unnecessarily

id -4 class ssd # do not change unnecessarily

# weight 6.98639

alg straw2

hash 0 # rjenkins1

item osd.0 weight 1.74660

item osd.1 weight 1.74660

item osd.2 weight 1.74660

item osd.3 weight 1.74660

}

host pve2 {

id -5 # do not change unnecessarily

id -6 class ssd # do not change unnecessarily

# weight 6.98639

alg straw2

hash 0 # rjenkins1

item osd.4 weight 1.74660

item osd.5 weight 1.74660

item osd.6 weight 1.74660

item osd.7 weight 1.74660

}

host pve3 {

id -7 # do not change unnecessarily

id -8 class ssd # do not change unnecessarily

# weight 6.98639

alg straw2

hash 0 # rjenkins1

item osd.8 weight 1.74660

item osd.9 weight 1.74660

item osd.10 weight 1.74660

item osd.11 weight 1.74660

}

root default {

id -1 # do not change unnecessarily

id -2 class ssd # do not change unnecessarily

# weight 20.95917

alg straw2

hash 0 # rjenkins1

item pve1 weight 6.98639

item pve2 weight 6.98639

item pve3 weight 6.98639

}

# rules

rule replicated_rule {

id 0

type replicated

step take default

step chooseleaf firstn 0 type host

step emit

}

# end crush map(let me know if more information is needed)

So here's my problem:

I wanted to use the following calculator: https://florian.ca/ceph-calculator/

In order to have a “Safe Cluster” and not a “Risky Cluster”.

To do this, I applied the ratio of 0.67 to the pool I created as shown in the figure below:

I expected to find ~4.67TB and I see 7.85TB (although this number also includes the space reserved for the OS (~1.21TB).

Is the ratio correctly applied? I'm happy to have lots of disk space, but I feel like I'm in a “risky cluster”.

Thank you very much in advance for your answers!

(I'm using enterprise repositories, and I have tickets. Do you believe it's usefull to ask the support ?)

Last edited: